Yep, I really don't need a managed switch as unmanaged are cheaper. But it seems the more 10Gb ports a switch has, the more likely it will be managed.

The switch market vendors are trying pretty hard to keep the "all 10GbE" switches close to the $100/port level (in the $80-110 range).

2.5 and 5 GbE are the "SOHO" primary target where they'll let costs per port sag as volume buying increases, while keeping 10GbE ports sticky. ( the lower the volume on the bottom edge of the 10GbE switch the lower the pressure to come up with more affordable ones. ).

By the time 1GbE-T standard got as old as the 10GbE-T standard is now there had been some sag in $/port costs. It just isn't happening for 10GbE. Partially why standards folks looped back and did a 2.5/5 GbE stopgap standards. Lots of end users have gotten price anchored on relatively super cheap 1GbE switches and it is really have to get lots of people to 'trade up' on core network. (that and Wi-Fi soaking up more traffic and infrasture spend (router costs up. etc. ) . )

Actually, I haven't yet laid the wire. My last house, I ran a pair of Cat5e's (plus a coax) to each room so I could have one for voice and one for data (most rooms in that house didn't have a phone line). My "new" home has phone lines in every room so I was just planning to run a single Cat8, but perhaps I should run a pair just to give me more options. Though with 9 identified distinct locations where I want wired network access, that equates up to 18 lines if I run pairs to every access point. I also will build a stand-alone workshop/garage about a hundred feet away from the house, which will I lay Cat6A to (don't need 10Gb speeds there but want to put the fastest wire I can now).

If not fixed on wire then then a "pair" of wires to each room with a 10GbE client would reduce the switch costs.

Instead of a "phone"/"voice" segregated network it would be a "high speed stordage network". While a Phone/voice might end up in any random room in a house. A very high speed storage subnet network probably would not.

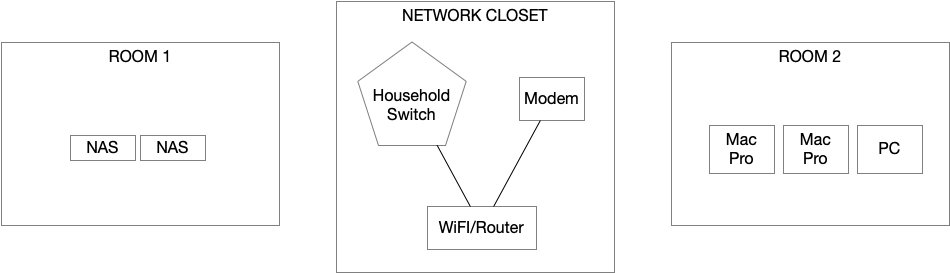

The storage network could be run on a completely different Internet address subnet. For example

192.168.2.xx for normal , generic household LAN/Internet

192.168.4.xx no direct path to Internet and only intra-household storage to client connections

NAS Room 4-5 port 10GbE switch. One connection to each NAS 10GbE ( go to NAS Admin and give static ....4.xx address ) . One (or two) cables out to the multiple higher end computers' room .

Use 1 (or 2.5GbE) ports on NAS boxes to run out to household switch .

Computer Room 10GbE cable to Mac Pro port 1 ( again can just statically assign a number. Since non mobile, permanent and low in network population. )

Mac Pro Port 2 1 GbE cable from room switch connected to the 1GbE wall port.

a three stripe RAID HDD set up is a pretty good match to 5Gb/s (624MB/s) data from over 10GbE. Some pretty quick access to HDDs in another room on the Mac Pro from either one of the NAS boxes that can keep up.

If only just doing a connection between NAS Room and computer room that is just one "extra" wire between rroms ; no where near 5-9 more rooms/wires. And your switch overhead costs drops by several hundred dollars. That one wire is way, way ,way cheaper than a 8 port 10GbE switch.

This way if 4-5 folks want to stream 4K video off of the NAS servers that has zero impact on the Mac Pro using the NAS box as a distant external drive (presuming the NAS drives can keep up with that concurrent workload).

If all the Mac Pro is doing is using a 20Mb/s Internet connection for is just email and moderate web browsing , then that would be just fine for a large number of tasks. So even if through both 10GbE ports at purely local storage networks, then will have offloads lots of bandwidth from the Wi-Fi.

Similar if the NAS is going to have several concurrent clients reading/writing data then really don't want to put all of the NAS client access onto just one port. ( often even if it is a 10GbE one). That is why the mid-high level NAS comes with an internal switch and multiple ports.