Well, since late 2022, AI generated Art becomes sensational and revolutionary as you can create high quality of images and paints with some prompts. I used Automatic1111's WebUI Stable Diffusion with a lot of models. Yeah, Midjourney is another good service but so far, WebUI with Stable Diffusion is the best.

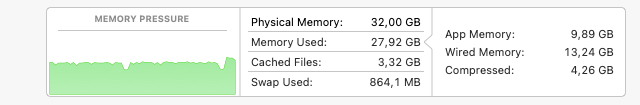

AI generated ART is extremely GPU and RAM intensive and even my M1 Max reached 100 degree celsius, had loud fan noise, and consume a lot of power. Both GPU and RAM reached 100% while I generate images on 512x768 resolution. Nvidia GPU with a lot of VRAM is highly recommended to create images. AMD GPU sucks for this. Just nope. How about Apple Silicon Mac? Well, it's fine with M1 Max but you really need more than 64GB of unified memory. Even then, it maxed out to make one 512x768 image. It would be nice to have Mac Pro with a lot of memory but Nvidia RTX 4090 is better for its price.

github.com

M1 Max MBP with 32 GPU cores + 64GB of RAM took me 10 min and 40 sec while RTX 4090 took only 10 sec to create 4 images at once. RTX 3090 took 16 sec with the same setting which isn't bad.

github.com

M1 Max MBP with 32 GPU cores + 64GB of RAM took me 10 min and 40 sec while RTX 4090 took only 10 sec to create 4 images at once. RTX 3090 took 16 sec with the same setting which isn't bad.

So far, M1 Max is just fine but I have to say it's really slow to create one image especially if you increase the resolution, steps, upscaler, and more. 64GB of RAM? Well, not really useful as the bandwidth isn't really fast enough. 64GB of RAM is the minimum requirement for using Stable Diffusion or otherwise, it would be very difficult to generate images or you need to decrease the memory uses with some codes. More GPU cores for faster speed.

You also can use and rent cloud compute for this which might be way better than just buying a new computer. I gotta check which service fits and works with Mac and WebUI.

machinelearning.apple.com

There is an article about Core ML for Stable Diffusion from Apple's machine learning. Not sure if Automatic1111 is optimizing Apple Silicon but it would be nice if Apple Silicon performs better. But for now, if you running locally, Nvidia is the best bet unless M1 Ultra prove to be as powerful as RTX 3090.

machinelearning.apple.com

There is an article about Core ML for Stable Diffusion from Apple's machine learning. Not sure if Automatic1111 is optimizing Apple Silicon but it would be nice if Apple Silicon performs better. But for now, if you running locally, Nvidia is the best bet unless M1 Ultra prove to be as powerful as RTX 3090.

AI generated ART is extremely GPU and RAM intensive and even my M1 Max reached 100 degree celsius, had loud fan noise, and consume a lot of power. Both GPU and RAM reached 100% while I generate images on 512x768 resolution. Nvidia GPU with a lot of VRAM is highly recommended to create images. AMD GPU sucks for this. Just nope. How about Apple Silicon Mac? Well, it's fine with M1 Max but you really need more than 64GB of unified memory. Even then, it maxed out to make one 512x768 image. It would be nice to have Mac Pro with a lot of memory but Nvidia RTX 4090 is better for its price.

Installation on Apple Silicon

Stable Diffusion web UI. Contribute to AUTOMATIC1111/stable-diffusion-webui development by creating an account on GitHub.

So far, M1 Max is just fine but I have to say it's really slow to create one image especially if you increase the resolution, steps, upscaler, and more. 64GB of RAM? Well, not really useful as the bandwidth isn't really fast enough. 64GB of RAM is the minimum requirement for using Stable Diffusion or otherwise, it would be very difficult to generate images or you need to decrease the memory uses with some codes. More GPU cores for faster speed.

You also can use and rent cloud compute for this which might be way better than just buying a new computer. I gotta check which service fits and works with Mac and WebUI.

Stable Diffusion with Core ML on Apple Silicon

Today, we are excited to release optimizations to Core ML for Stable Diffusion in macOS 13.1 and iOS 16.2, along with code to get started…