Last years I/O, google presented the project Soli.

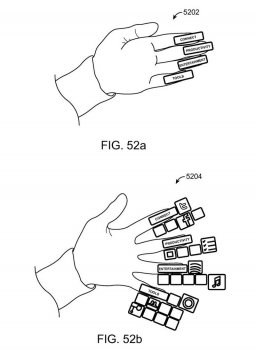

An miniaturized radar, that feats on a smartwatch. With precision, Soli detects micro gestures of your fingers moving between each others or just moving in the air.

Then it translates them into an 1:1 manipulation of 3D virtual objects.

Although the current etineration of Apple Watch is still in Its infancy, I’m a big supporter of wearables and I see them as the future of Apple ecosystem.

Mainly I believe in the deep integration between Apple Watch and the future Augmented reality Apple Glasses. (Of course Airpods will also be important on this ecosystem).

As a concept, Apple Watch would

be your analytical body-tracking, wearable notification, quick text, calling, Apple Pay and Siri hub (to which you communicate using your airpods)

AW would exist as a physical object that leaves you continually connected to the other world, the unpalpable world, the augmented world that only pops into existence when you wear your Apple glasses, that are deeply integrated with AW.

You will take Apple glasses out of your shirt pocket when you want to do some real work, experience media like a movie, or type some document (since it can project a real keyboard on any surface), play, design, whatever you do right now on your iPhone/Ipad and can’t do on the AW.

I started talking about project soli because it seams like the perfect way to create a tasteful gesture base human interface.

At the same time, Apple Glasses could track AW with such a precision that they could augmented the AW, showing information that floats around the AW and surpaces the small screen realstate limitation of It, by showing some perpetual central hub, floating around your wrist, and from which you could select different option, definitions and interactions, while the content is presented to you anchored in real surfaces, floating or just projected in the real world as a virtual object.

It would be a very interesting symbiose between the real world and the virtual one.

What do you guys think?

An miniaturized radar, that feats on a smartwatch. With precision, Soli detects micro gestures of your fingers moving between each others or just moving in the air.

Then it translates them into an 1:1 manipulation of 3D virtual objects.

Although the current etineration of Apple Watch is still in Its infancy, I’m a big supporter of wearables and I see them as the future of Apple ecosystem.

Mainly I believe in the deep integration between Apple Watch and the future Augmented reality Apple Glasses. (Of course Airpods will also be important on this ecosystem).

As a concept, Apple Watch would

be your analytical body-tracking, wearable notification, quick text, calling, Apple Pay and Siri hub (to which you communicate using your airpods)

AW would exist as a physical object that leaves you continually connected to the other world, the unpalpable world, the augmented world that only pops into existence when you wear your Apple glasses, that are deeply integrated with AW.

You will take Apple glasses out of your shirt pocket when you want to do some real work, experience media like a movie, or type some document (since it can project a real keyboard on any surface), play, design, whatever you do right now on your iPhone/Ipad and can’t do on the AW.

I started talking about project soli because it seams like the perfect way to create a tasteful gesture base human interface.

At the same time, Apple Glasses could track AW with such a precision that they could augmented the AW, showing information that floats around the AW and surpaces the small screen realstate limitation of It, by showing some perpetual central hub, floating around your wrist, and from which you could select different option, definitions and interactions, while the content is presented to you anchored in real surfaces, floating or just projected in the real world as a virtual object.

It would be a very interesting symbiose between the real world and the virtual one.

What do you guys think?

Attachments

Last edited: