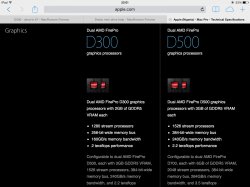

MacVidCards believes it's a 7870XT (he references an earlier post where he demonstrates why but I don't have that link, anybody?)

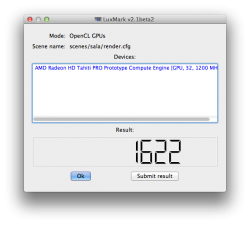

Architosh believes it's a W8000. I'm unclear on what consumer GPU that is equivalent to, but they firmly state it is Tahiti while the 7870 above is Pitcairn I believe.

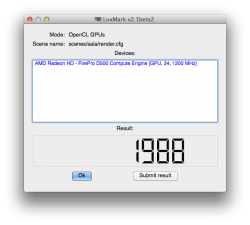

VirtualRain calls it out as a 7950 (chart link)

Which do you think it is?

Architosh believes it's a W8000. I'm unclear on what consumer GPU that is equivalent to, but they firmly state it is Tahiti while the 7870 above is Pitcairn I believe.

VirtualRain calls it out as a 7950 (chart link)

Which do you think it is?