So, faster machine and a rMBP or slower one by itself.

Tough one.

I have to admit: I had a little trouble deciding to post this, Dave. I've done business with you in the past and I'm quite happy with the product I've purchased. I've also recommended you and your services to other Mac Pro owners who didn't realize they had a much larger choice of GPUs for their rigs at the time.

I think you're pushing buttons and trolling, here. I actually think you're smarter than you're letting on, but let's play along for a second.

Clearly, the old Mac Pro, with a pair of (now dated) x5690s will be a CPU powerhouse. It'll be that powerhouse for applications that are properly threaded and don't spend their time constantly context switching. It'll also be a powerhouse for apps that don't need things like Intel's AVX technology; that'll never be available on the old Mac Pro, no matter how hard you try.

And yes, you can augment the power supply situation in the old beast so that you can power up 2 Titans or 2 780Ti GPUs. You'd do this assuming your application can take advantage of more than 1 GPU, and that it needs CUDA processing; note for this discussion I'm flat-out ignoring games. I don't really care about gaming on a Mac and I

personally think that those that do are foolish.

With all of this assembled, you have a system that performs well today, with today's applications. It's a tactical decision. And potentially a good one.

I'm an architect by trade (and nature). Tactical thought is the antithesis of what I need to do for my day to day business. Strategic thought is more my thing, and the strategy here is: what app changes are coming in the future? Apple is clearly pushing to relegate nVidia's CUDA to a, "Awww... isn't that cute!" place. OpenCL isn't there yet,

today. Apps that have been CUDA-aware for a few years are going to take time to get themselves shifted to OpenCL. But several are already on their way.

Apple wants OpenCL to win, and right now, that means AMD is the choice for GPUs. Love it or hate it, nVidia just can't (or, more accurately: won't) get their OpenCL performance up to the same level. With all that time and money invested in CUDA, why would they?

Ignoring the GPUs for a second, the CPU is something else the old Mac Pro just can't update. It's stuck at the x5690 as the top chip and will never go beyond that. As more and more applications start tapping Intel's AVX to help speed things up a bit, the old Mac Pro's CPUs will seem less and less desirable. Final Cut Pro X has been using AVX since its inception. Adobe has hinted that Premiere Pro is, as well (though I have no proof of that).

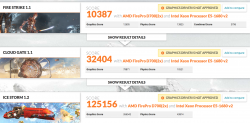

The new machine won't do as well in most benchmarks as the old Mac Pro will. And for the time being, there are a bunch of apps that might even run faster on the old Pro, assuming the owner has updated the GPU to something more modern. But it won't always be the case. Count on it.