So on various forums, some people had the question a while back - Is the bandwidth limitation of having a Vega ii duo GPU on a single 16x any indication in the real world vs having two solo Vega ii, each with 16x pcie bandwidth on the 2019 Mac Pro?

It seemed like no one really tested this, understandable due to the cost - but I was curious. In theory, the Apple white paper says that two Vega ii solo are better for video, but there were no real world tests done.

Obviously, it is preferable to have a single Duo GPU if you can - instead of the two solo Vega ii, but only if performance was close.

I tried to test the heaviest stuff I could for video. 3D rendering seems to not have the same bandwidth limitations, so it is mostly on the video side.

Now, this is rendering and export - perhaps noise correction or real time color grading can reveal more, and I may try it.

I also took special care to make sure it was on NVME drives, as R3d Raw and Pro res 4444 XQ get pretty fast, so I did not want to bottleneck it. Also done on a Mac Pro with 28 cores.

Here are some tests, I added in dual W5700x too. They are surprisingly close most of the time for less cost.

Let's start with 6k R3d Raw 20 min clip, in Resolve, going to 4444 XQ:

Basically within the margin of error for the Vega configs, so this is not enough to show any difference.

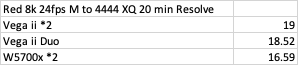

How about R3d raw 8k, to 4444 XQ? Once again very close, margin of error.

Maybe we need more - here is a 10 min, BRAW 12k, to 4444 XQ. But once again, they are basically the same.

In the Resolve Candle test, they are the same too - and in Octane X, the same.

Here is Final Cut Pro, 6k to 422 HQ, basically the same, and other Final Cut Pro tests were similar to the Resolve as well - but generally Resolve is faster.

So it seems that at least for playing back, exporting/rendering, both configs are more or less equal.

Does anyone know what test may reveal this bandwidth difference? There must be something that does it - at least a very niche test I'd imagine. Maybe real time speed on color grading or noise reduction? Everything seems to be within the margin of error.

Or maybe there isn't anything that can really saturate the bandwidth past 8x for each GPU - or is possibly bottlenecked by something else. Like I said, I used NVME drives and kept an eye on those speeds, and 28 cores.

I have some 6900XT numbers too, but you don't want to see those - they beat everything else, lol.

It seemed like no one really tested this, understandable due to the cost - but I was curious. In theory, the Apple white paper says that two Vega ii solo are better for video, but there were no real world tests done.

Obviously, it is preferable to have a single Duo GPU if you can - instead of the two solo Vega ii, but only if performance was close.

I tried to test the heaviest stuff I could for video. 3D rendering seems to not have the same bandwidth limitations, so it is mostly on the video side.

Now, this is rendering and export - perhaps noise correction or real time color grading can reveal more, and I may try it.

I also took special care to make sure it was on NVME drives, as R3d Raw and Pro res 4444 XQ get pretty fast, so I did not want to bottleneck it. Also done on a Mac Pro with 28 cores.

Here are some tests, I added in dual W5700x too. They are surprisingly close most of the time for less cost.

Let's start with 6k R3d Raw 20 min clip, in Resolve, going to 4444 XQ:

Basically within the margin of error for the Vega configs, so this is not enough to show any difference.

How about R3d raw 8k, to 4444 XQ? Once again very close, margin of error.

Maybe we need more - here is a 10 min, BRAW 12k, to 4444 XQ. But once again, they are basically the same.

In the Resolve Candle test, they are the same too - and in Octane X, the same.

Here is Final Cut Pro, 6k to 422 HQ, basically the same, and other Final Cut Pro tests were similar to the Resolve as well - but generally Resolve is faster.

So it seems that at least for playing back, exporting/rendering, both configs are more or less equal.

Does anyone know what test may reveal this bandwidth difference? There must be something that does it - at least a very niche test I'd imagine. Maybe real time speed on color grading or noise reduction? Everything seems to be within the margin of error.

Or maybe there isn't anything that can really saturate the bandwidth past 8x for each GPU - or is possibly bottlenecked by something else. Like I said, I used NVME drives and kept an eye on those speeds, and 28 cores.

I have some 6900XT numbers too, but you don't want to see those - they beat everything else, lol.