@chris01b hey I was actually replying to area7's quote.

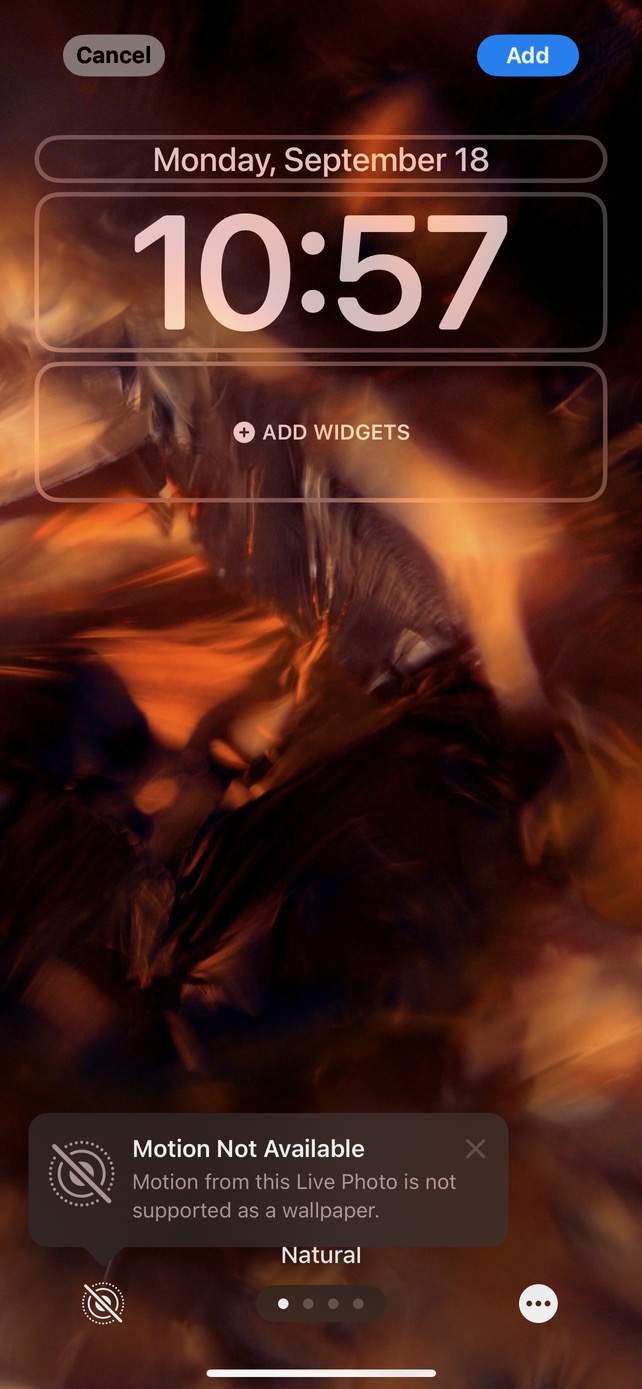

"You can hardcode them/add in metadata for your video but will not make that movie being accepted as valid motion."

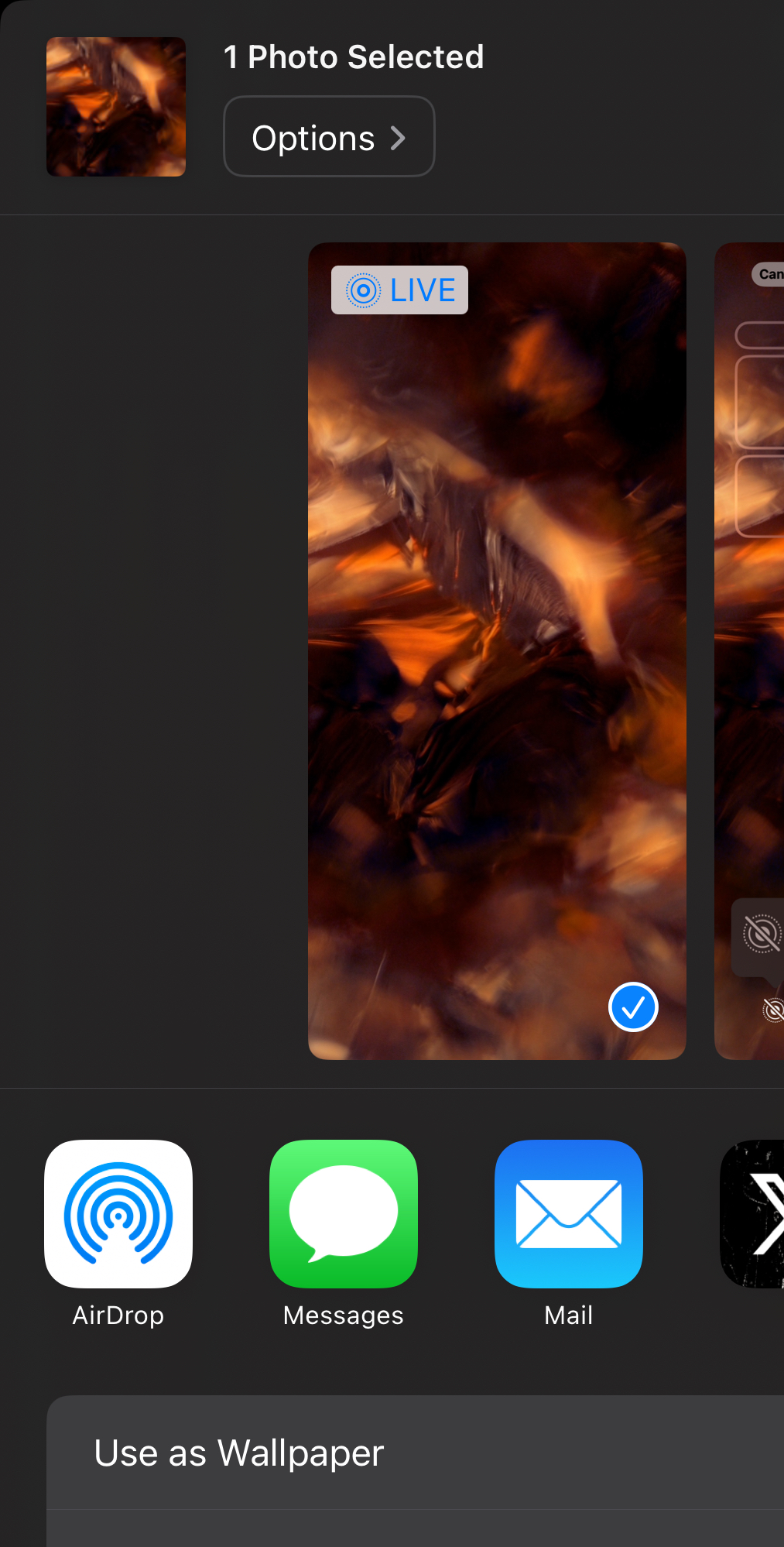

He sounded like he already tried so I wanted to see how he was adding the metadata. As for testing with the PhotoKit. Take a look at this

file if you haven't already, this project is using PHAssetCreationRequest kit to save to the album as Live Photo. It still doesn't work.

That project you linked will not work in iOS17 set as live wallpaper since it includes old pairing of jpg and mov via only identifier, which is not the case now {because other keys added for that motion thing}

Regarding adding hard coded metadata you can do it simple e.g. adding metadata in assetWriter?.metadata =

[addone, addtwo, addthree ... 4 x .. n ]. you just iterate though an array of values [0, 0.02, 0.03 etc] and add whatever values in order to fill all video total duration.

let addone = addSampleTime()

lett addtwo = addSampleTime2()

let addthree = addLiveTime()

let Allmediatimed = "3 0.00823200028389692 16939217 155 5.94521753062658e-15 -6.44374300689783e-15 0.27380958199501 0.575768828392029 1.92900002002716 3.30649042129517 4 0 -1 0 0 0 0 0 0 0 0 0 9.80908925027372e-45 -0.17872442305088 3212927435 33811 49646"

private func addSampleTime()->AVMetadataItem { // here you can add ofc. input as float 0.0 .. 0.99 and format it as String float + " s"

let item = AVMutableMetadataItem()

let keyContentIdentifier = "Sample Time" // metadatakey quickTimeMetadataKeyContentIdentifier

let keySpaceQuickTimeMetadata = "mdta"

item.key = keyContentIdentifier as (NSCopying & NSObjectProtocol)?

item.keySpace = AVMetadataKeySpace(rawValue: keySpaceQuickTimeMetadata)

item.value = "0 s" as (NSCopying & NSObjectProtocol)?

item.dataType = "com.apple.metadata.datatype.UTF-8"

return item

}

private func addSampleTime2()->AVMetadataItem { // here you can add ofc. input as float 0.0 .. 0.99 and format it as String float + " s"

let item = AVMutableMetadataItem()

let keyContentIdentifier = "Sample Duration" // metadatakey quickTimeMetadataKeyContentIdentifier

let keySpaceQuickTimeMetadata = "mdta"

item.key = keyContentIdentifier as (NSCopying & NSObjectProtocol)?

item.keySpace = AVMetadataKeySpace(rawValue: keySpaceQuickTimeMetadata)

item.value = "0.03 s" as (NSCopying & NSObjectProtocol)?

item.dataType = "com.apple.metadata.datatype.UTF-8"

return item

}

private func addLiveTime()->AVMetadataItem { //this is repetitive add it whenever you add the 2 keys above

let item = AVMutableMetadataItem()

let keyContentIdentifier = "Live Photo Info" // metadatakey quickTimeMetadataKeyContentIdentifier

let keySpaceQuickTimeMetadata = "mdta"

item.key = keyContentIdentifier as (NSCopying & NSObjectProtocol)?

item.keySpace = AVMetadataKeySpace(rawValue: keySpaceQuickTimeMetadata)

item.value = self.Allmediatimed as (NSCopying & NSObjectProtocol)?

item.dataType = "com.apple.metadata.datatype.UTF-8"

return item

}

Code can be simplyfied with a single function and enum as input type, have limited time to work on this .

The exiftool will report them exactly how you hard coded so no problem to add into your asset metadata.