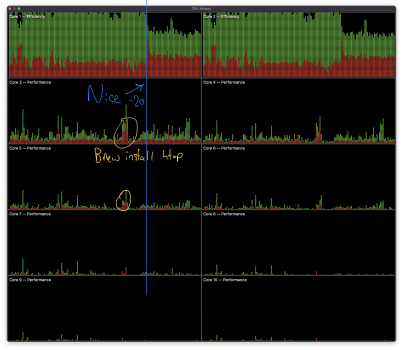

I've been super fascinated on how Apple is managing the efficiency cores. I've thrown some huge multi-core workloads at this thing and seen the 8 cores light up and happily (very desktop-like performance) blow through the task. Mostly I see some feathering here and there on the first 4 (assuming these are high performance.) Sometimes I'll see activity on last 4. Interesting thing as well is with Parallels Tech Preview it runs all the VM's activities on efficiency cores. I'm trying to get some idea what it determines efficiency and what not. Also, I get the sense these cores can scale up as well and operate at high-power when needed.

Anyone with more background on what's going on behind the scenes? I'm somewhat familiar with Arm, but normally I've seen them choose one over the other to run IOT devices. Ex., Tegra using 4 cores only and ignoring the low-power cores.

Anyone with more background on what's going on behind the scenes? I'm somewhat familiar with Arm, but normally I've seen them choose one over the other to run IOT devices. Ex., Tegra using 4 cores only and ignoring the low-power cores.