Thanks to a post by 666sheep who noticed this.

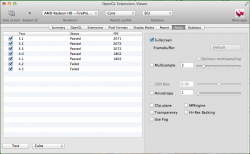

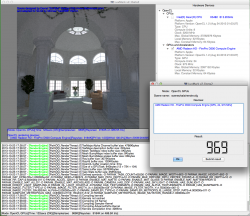

I dug out a 7870 I had put together with an EFI rom courtesy of Netkas' help.

As 666 had noted, it got id'd as FirePro D500.

So likely that nMP card will have same core and a device id very near this one.

Everyone wants to believe these workstation GPUs get special core chips made with Unicorn Hair. Sadly the Unicorn flew off and now they are all made of same silicon on same lines and with same design as retail gamer chips. A few features are laser snipped off sometimes, but sometimes just by hard coded device id.

I emphasize again, WORKSTATION GPU CHIPS ARE SAME DESIGN AS RETAIL GAMER CHIPS, differences are created via laser cuts and device id s which are changed via tiny 10K or 40K resistors in various positions. I'm not saying that there is anything wrong with this, it's how they pay for R & D for a chip once and sell different variants to different markets. Just don't believe that your $300 7970 is worth $3,300 because you placed a resistor differently. (Wink to Cupertino)

Especially on a mac where nobody is going to bother writing two different drivers.

Anyhow, specs don't match perfectly but no chance that this isn't a D500 core.

D300 is also mentioned in drivers. Notice that D700 is right above Radeon 7950, just as in real life.

So, maybe there is a corresponding list of device id s in the driver.

But anyone with Mavericks installed can have a look.

S/L/E/AMDRadeonX4000GLDriver.bundle

then open and up and look inside at AMDRadeonX4000GLDriver:

Radeon HD Tahiti XT PrototypeRadeon HD - FirePro D700Radeon HD 7950Radeon HD - FirePro D500Radeon HD Aruba XT PrototypeRadeon HD Aruba PRO PrototypeRadeon HD Tahiti Unknown PrototypeRadeon HD Pitcairn XT PrototypeRadeon HD Pitcairn PRO PrototypeRadeon HD - FirePro D300Radeon HD Wimbledon XT PrototypeRadeon HD Neptune XT PrototypeRadeon HD Pitcairn Unknown PrototypeRadeon HD Verde XT Prototype

So, same kext runs them all.

Here was kext as it was in 10.8.5:

Radeon HD Tahiti XT PrototypeRadeon HD 7950Radeon HD Tahiti PRO PrototypeRadeon HD Aruba XT PrototypeRadeon HD Aruba PRO PrototypeRadeon HD Tahiti Unknown PrototypeRadeon HD Pitcairn XT PrototypeRadeon HD Pitcairn PRO PrototypeRadeon HD Wimbledon XT PrototypeRadeon HD Neptune XT PrototypeRadeon HD Pitcairn Unknown PrototypeRadeon HD Verde XT Prototype

7870 doesn't match specs listed, looks like they downclocked the RAM a bit while adding some. Other numbers aren't an exact match either but, this is the core.

I can also tell you that 7770 ISN'T D300. And FWIW, it isn't 6950 either. Anyone have a 6970 to check? Maybe 7750. Several other cards to test. One will be close enough to show up as D300.

I dug out a 7870 I had put together with an EFI rom courtesy of Netkas' help.

As 666 had noted, it got id'd as FirePro D500.

So likely that nMP card will have same core and a device id very near this one.

Everyone wants to believe these workstation GPUs get special core chips made with Unicorn Hair. Sadly the Unicorn flew off and now they are all made of same silicon on same lines and with same design as retail gamer chips. A few features are laser snipped off sometimes, but sometimes just by hard coded device id.

I emphasize again, WORKSTATION GPU CHIPS ARE SAME DESIGN AS RETAIL GAMER CHIPS, differences are created via laser cuts and device id s which are changed via tiny 10K or 40K resistors in various positions. I'm not saying that there is anything wrong with this, it's how they pay for R & D for a chip once and sell different variants to different markets. Just don't believe that your $300 7970 is worth $3,300 because you placed a resistor differently. (Wink to Cupertino)

Especially on a mac where nobody is going to bother writing two different drivers.

Anyhow, specs don't match perfectly but no chance that this isn't a D500 core.

D300 is also mentioned in drivers. Notice that D700 is right above Radeon 7950, just as in real life.

So, maybe there is a corresponding list of device id s in the driver.

But anyone with Mavericks installed can have a look.

S/L/E/AMDRadeonX4000GLDriver.bundle

then open and up and look inside at AMDRadeonX4000GLDriver:

Radeon HD Tahiti XT PrototypeRadeon HD - FirePro D700Radeon HD 7950Radeon HD - FirePro D500Radeon HD Aruba XT PrototypeRadeon HD Aruba PRO PrototypeRadeon HD Tahiti Unknown PrototypeRadeon HD Pitcairn XT PrototypeRadeon HD Pitcairn PRO PrototypeRadeon HD - FirePro D300Radeon HD Wimbledon XT PrototypeRadeon HD Neptune XT PrototypeRadeon HD Pitcairn Unknown PrototypeRadeon HD Verde XT Prototype

So, same kext runs them all.

Here was kext as it was in 10.8.5:

Radeon HD Tahiti XT PrototypeRadeon HD 7950Radeon HD Tahiti PRO PrototypeRadeon HD Aruba XT PrototypeRadeon HD Aruba PRO PrototypeRadeon HD Tahiti Unknown PrototypeRadeon HD Pitcairn XT PrototypeRadeon HD Pitcairn PRO PrototypeRadeon HD Wimbledon XT PrototypeRadeon HD Neptune XT PrototypeRadeon HD Pitcairn Unknown PrototypeRadeon HD Verde XT Prototype

7870 doesn't match specs listed, looks like they downclocked the RAM a bit while adding some. Other numbers aren't an exact match either but, this is the core.

I can also tell you that 7770 ISN'T D300. And FWIW, it isn't 6950 either. Anyone have a 6970 to check? Maybe 7750. Several other cards to test. One will be close enough to show up as D300.

Attachments

Last edited: