Hey everyone!

It is known that Netflix 4k support is on Kaby Lake processors only.

In addition to running on Windows 10 Edge browser only as well.

http://www.theverge.com/2016/11/21/13703152/netflix-4k-pc-windows-support

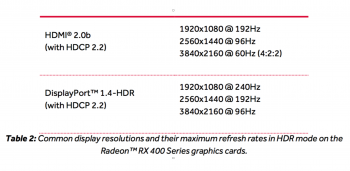

All in all, it is all due to Kaby Lake having HDCP 2.2 DRM hardware inside the CPU.

While the 2016 MacBook Pros run on Skylake, which has no HDCP 2.2.

However, the AMD GPUs on the 15" models has HDCP 2.2 according to AMD.

Any idea if this means 15" MBPs might one day get Netflix 4k support without Kaby Lake, or will the machines never be able to because of the Skylake chips? That would make the 2016 MacBook Pro 15" be obsolete quick and not future proof for 4K.

Have anyone tried installing windows, using the Edge browser and tried Netflix 4k on the 2016 15" MacBook Pro?

I would want to return it if it cannot do 4K with HDCP 2.2 protection.

For people saying "MacBook Pro 15" screen is not enough for 4K!", I meant plug the MacBook to a HDCP 2.2 compliant 4K screen.

Thank you very much for answering everyone!

It is known that Netflix 4k support is on Kaby Lake processors only.

In addition to running on Windows 10 Edge browser only as well.

http://www.theverge.com/2016/11/21/13703152/netflix-4k-pc-windows-support

All in all, it is all due to Kaby Lake having HDCP 2.2 DRM hardware inside the CPU.

While the 2016 MacBook Pros run on Skylake, which has no HDCP 2.2.

However, the AMD GPUs on the 15" models has HDCP 2.2 according to AMD.

Any idea if this means 15" MBPs might one day get Netflix 4k support without Kaby Lake, or will the machines never be able to because of the Skylake chips? That would make the 2016 MacBook Pro 15" be obsolete quick and not future proof for 4K.

Have anyone tried installing windows, using the Edge browser and tried Netflix 4k on the 2016 15" MacBook Pro?

I would want to return it if it cannot do 4K with HDCP 2.2 protection.

For people saying "MacBook Pro 15" screen is not enough for 4K!", I meant plug the MacBook to a HDCP 2.2 compliant 4K screen.

Thank you very much for answering everyone!