So I recall Phil mentioned that the new Mac Pro supports channel bonding on Thunderbolt 2.

I'm thinking, could Apple possibly allow users to interconnect say three Mac Pros together using Thunderbolt to communicate with one another and act as one coherent unit?

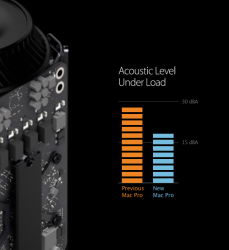

I think this would greatly increase the versatility of the new Mac Pro. Can you imagine the render performance of combining 6 powerful graphics cards, 3 processors, and terabytes of very fast solid state storage? Or maybe even more?! All these computers even at maximum still only using the fraction of the power of a regular server and the noise level less than the older Mac Pro.

Perhaps I'm just dreaming, but for this could seem possible with the increased bandwidth on six Thunderbolt 2 ports and the new Mac Pro modular design.

I'm thinking, could Apple possibly allow users to interconnect say three Mac Pros together using Thunderbolt to communicate with one another and act as one coherent unit?

I think this would greatly increase the versatility of the new Mac Pro. Can you imagine the render performance of combining 6 powerful graphics cards, 3 processors, and terabytes of very fast solid state storage? Or maybe even more?! All these computers even at maximum still only using the fraction of the power of a regular server and the noise level less than the older Mac Pro.

Perhaps I'm just dreaming, but for this could seem possible with the increased bandwidth on six Thunderbolt 2 ports and the new Mac Pro modular design.