Just wondering what will be the real benefit of:

Amfeltec SKU-075-02

or

Amfeltec SKU-075-06

According to the manual:

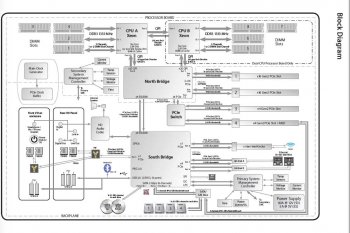

The “PCI / PCIe Expansion Backplane” (backplane) is a four 32-bit PCI and two x16 PCI express slot backplane (each x16 PCI Express connector has one PCI Express lane).

So lets think about 2 scenarios:

Amfeltec SKU-075-02

1. Using PCIe slot 2 as an expansion:

What will be the speed difference between 2 identical video cards installed in PCIe 1 on the backplane and the other on the PCIex16 on the expansion chassis.

2 Highpoint 7101A installed in the same manner, what will be the speed and throughput difference if you have 4 NVMe's installed?

Amfeltec SKU-075-06

Speed and throughput difference between the video cards and NVMe's installed as above but through the mini PCIEx1 (1 lane). mini PCIEx1 has the same width as the X16 on the expansion board.

Amfeltec SKU-075-02

or

Amfeltec SKU-075-06

According to the manual:

The “PCI / PCIe Expansion Backplane” (backplane) is a four 32-bit PCI and two x16 PCI express slot backplane (each x16 PCI Express connector has one PCI Express lane).

So lets think about 2 scenarios:

Amfeltec SKU-075-02

1. Using PCIe slot 2 as an expansion:

What will be the speed difference between 2 identical video cards installed in PCIe 1 on the backplane and the other on the PCIex16 on the expansion chassis.

2 Highpoint 7101A installed in the same manner, what will be the speed and throughput difference if you have 4 NVMe's installed?

Amfeltec SKU-075-06

Speed and throughput difference between the video cards and NVMe's installed as above but through the mini PCIEx1 (1 lane). mini PCIEx1 has the same width as the X16 on the expansion board.

Attachments

Last edited: