Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

potential for 2 SSDs in nMP using GPUs

- Thread starter Loa

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The two GPUs are not strictly speaking identical, as one bears the SSD. What if one was to place two of those GPUs in the same nMP? Would the system recognize the second SSD?

Almost certainly not possible. There aren't enough PCIe lanes for a second SSD. The GPUs are not the same shape, either. There's a definite left and right.

There's also an identical thread: https://forums.macrumors.com/threads/1690812/

I'm wondering if it would be possible for a third party adapter to allow for a second SSD. It seems like there is enough room for it to fit.

I'm wondering if it would be possible for a third party adapter to allow for a second SSD. It seems like there is enough room for it to fit.

lol ... read the post above, there's not enough PCIe lanes. Unless you put a controller in there maybe, then you'd be stealing bandwidth from the main SSD. Just put the disks out on the TB 2 people!

there's always enough PCIe lanes, but bandwidth?

There's already at least one PCIe switch in there to multiply the number of PCIe lanes for the IO ports.

There's no need to dedicate lanes from the CPU chip to every device - you can trade a little performance for connectivity.

Look at how the thermal budget is handled - there's not enough power and cooling to run everything full tilt at once. Try, and it will back off a bit.

You can budget bandwidth as well. Put a PCIe switch on the second GPU, and get the GPU 16 lanes and the PCIe SSD whatever it needs (PCIe 3.0 x2 would be fine - the MacBook PCIe SSDs are PCIe 2.0 x2).

Most of the time both the GPU and the SSD would run at full speed - the only time there would be "throttling" is if you're saturating the PCIe x16 link to the GPU and hitting the disk at the same time.

All engineering is about compromises - in spite of what the ad copy and keynotes say. I think that it's a reasonable compromise to sacrifice some theoretical peak performance for a second SSD.

It would be very interesting to see the PCIe topology map for the system to see how the 48 PCIe lanes are distributed (and multiplied).

lol ... read the post above, there's not enough PCIe lanes. Unless you put a controller in there maybe, then you'd be stealing bandwidth from the main SSD. Just put the disks out on the TB 2 people!

There's already at least one PCIe switch in there to multiply the number of PCIe lanes for the IO ports.

There's no need to dedicate lanes from the CPU chip to every device - you can trade a little performance for connectivity.

Look at how the thermal budget is handled - there's not enough power and cooling to run everything full tilt at once. Try, and it will back off a bit.

You can budget bandwidth as well. Put a PCIe switch on the second GPU, and get the GPU 16 lanes and the PCIe SSD whatever it needs (PCIe 3.0 x2 would be fine - the MacBook PCIe SSDs are PCIe 2.0 x2).

Most of the time both the GPU and the SSD would run at full speed - the only time there would be "throttling" is if you're saturating the PCIe x16 link to the GPU and hitting the disk at the same time.

All engineering is about compromises - in spite of what the ad copy and keynotes say. I think that it's a reasonable compromise to sacrifice some theoretical peak performance for a second SSD.

It would be very interesting to see the PCIe topology map for the system to see how the 48 PCIe lanes are distributed (and multiplied).

There's already at least one PCIe switch in there to multiply the number of PCIe lanes for the IO ports...

Wrong, there's no multiplying at all, which is why there is no second SSD connector.

Wrong, there's no multiplying at all, which is why there is no second SSD connector.

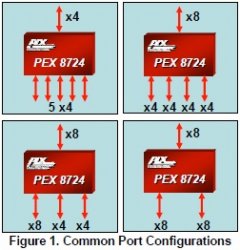

Perhaps you should look at http://www.plxtech.com/download/file/1822 on http://www.plxtech.com/products/expresslane/pex8724#technicaldocumentation .

The first image shows a PLX PCIe switch multiplying 4 PCIe lanes into 20 lanes. The other three images show multiplying 8 lanes to 16 lanes.

The new Mini Pro has one such PLX switch already, more could be added quite easily.

Attachments

Last edited:

The other three images show multiplying 8 lanes to 16 lanes.

No, they show 8 PCIe 3.0 lanes being divided into 16 PCIE 2.0 lanes.

Anandtech explains what's going on here, using the same image you quoted. 7.8 GB/s of data goes into the PEX 8723, and is divided into three 2.5 GB/s connections to the Thunderbolt 2 controllers. There's no multiplying connections, no throttling, no trading of performance for connectivity... and no potential for 2 SSDs in the box.

Thank you for the link to the Anandtech page - I missed that PCI topology info.

The PLX switch is PCIe 3.0 for both input and output. It is multiplying the number of lanes. Twelve is more than eight. Eight multiplied by 1.5 is twelve.

The T-Bolt 2.0 controller is a PCIe 2.0 device, so it's only using 5.0 GT/s instead of the potential 8.0 GT/s on the switch's outputs. This does mean that although the number of lanes is multiplied, the slow T-Bolt 2.0 controllers are not oversubscribed on bandwidth on the PLX switch input.

Do note that the PCH has a 2 GB/s link to the CPU, and downstream links of 4 GB/s - a bit of oversubscription.

Of course there's potential - all you need to do is to add another PLX switch. Why be so stubborn about not considering the possibility of adding another PCIe switch?

Since the 2 GB/s allocated to the SSD is more than adequate, put the PLX switch on the circular motherboard at the bottom and multiply the PCIe x4 link into two PCIe x4 links, one for each GPU card. The performance of dual SSDs wouldn't be exactly twice the performance of a single SSD - but it would be pretty blazing fast.

No, they show 8 PCIe 3.0 lanes being divided into 16 PCIE 2.0 lanes.

Anandtech explains what's going on here, using the same image you quoted. 7.8 GB/s of data goes into the PEX 8723, and is divided into three 2.5 GB/s connections to the Thunderbolt 2 controllers.

The PLX switch is PCIe 3.0 for both input and output. It is multiplying the number of lanes. Twelve is more than eight. Eight multiplied by 1.5 is twelve.

The T-Bolt 2.0 controller is a PCIe 2.0 device, so it's only using 5.0 GT/s instead of the potential 8.0 GT/s on the switch's outputs. This does mean that although the number of lanes is multiplied, the slow T-Bolt 2.0 controllers are not oversubscribed on bandwidth on the PLX switch input.

...no trading of performance for connectivity...

Do note that the PCH has a 2 GB/s link to the CPU, and downstream links of 4 GB/s - a bit of oversubscription.

...and no potential for 2 SSDs in the box.

Of course there's potential - all you need to do is to add another PLX switch. Why be so stubborn about not considering the possibility of adding another PCIe switch?

Since the 2 GB/s allocated to the SSD is more than adequate, put the PLX switch on the circular motherboard at the bottom and multiply the PCIe x4 link into two PCIe x4 links, one for each GPU card. The performance of dual SSDs wouldn't be exactly twice the performance of a single SSD - but it would be pretty blazing fast.

Last edited:

all you need to do is to add another PLX switch. Why be so stubborn about not considering the possibility of adding another PCIe switch?

Because doing that would mean sharing bandwidth that doesn't need to be shared. If you want to put an extra SSD somewhere on Anandetach's diagram, the sensible place to put it is on Thunderbolt ports. Given that there's a total of 7.5 GB/s of bandwidth allocated to Thunderbolt connections, it makes no sense to have a second SSD sharing bandwidth that's already allocated elsewhere, even on on a system that has no external devices plugged in. It makes far more sense to put it somewhere where it's only going to be sharing bandwidth in rare cases where there are lots of other TB2 devices connected.

Because doing that would mean sharing bandwidth that doesn't need to be shared. If you want to put an extra SSD somewhere on Anandetach's diagram, the sensible place to put it is on Thunderbolt ports. Given that there's a total of 7.5 GB/s of bandwidth allocated to Thunderbolt connections, it makes no sense to have a second SSD sharing bandwidth that's already allocated elsewhere, even on on a system that has no external devices plugged in. It makes far more sense to put it somewhere where it's only going to be sharing bandwidth in rare cases where there are lots of other TB2 devices connected.

So, you want to route the unused PCIe x4 lanes from the existing PLX switch on the IO board to an SSD on the 2nd GPU card, and borrow bandwidth from the T-Bolt array rather than the 2nd GPU.

Fine. At least you're acknowledging the "potential" for a 2nd SSD.

We'll just have to wait and see which solution the Apple engineers choose for the 2nd version of the new Mini Pro to add the second internal SSD. In the end it will be determined by compromises - whether it's easier/cheaper to route two lanes (or four) from the IO daughtercard through the bottom motherboard to the GPU daughtercard, or easier/cheaper to put a PCIe switch on the motherboard or GPU daughtercard - and where it makes the most sense to oversubscribe PCIe bandwidth.

_________

Over-subscription is not a bad thing - it's a very useful and necessary engineering compromise.

I recently retired three Cisco Catalyst 4506 switches - each cost me about $150K. I bought them early in the millennium because I needed about 700 GbE ports - and the Cat4 switches were industry leading at only a few hundred dollars per port. (How many of you have tossed a half million dollars of computer gear into the e-waste bin?)

One problem was, however, that they were oversubscribed. They had port groups of 8 GbE ports that had 1 GbE bandwidth for the group of 8 ports - 8 to 1 oversubscription.

It was mostly a non-issue, though, since most network traffic is bursty and it was seldom the case that adjacent ports would be busy. (When doing cluster work, though, we'd rewire the ports so that no two ports in the cluster shared bandwidth - a pain in the butt.)

The new switch (Cisco Nexus 5548 with 2248 expanders) is barely over-subscribed - 40 Gbps bandwidth per 48 GbE ports. Switch bandwidth is 960 Gbps (it has 48 ports of 10 GbE).

_____

Gigabit Ethernet is dying - why doesn't the new Mini Pro have 10 GbE?

Obvious answer - because Apple wants to force T-Bolt on you. Industry standard 10 GbE networking would make T-Bolt less needed.

Last edited:

So, you want to route the unused PCIe x4 lanes from the existing PLX switch on the IO board to an SSD on the 2nd GPU card, and borrow bandwidth from the T-Bolt array rather than the 2nd GPU.

What a load of nonsense! I'm saying if you want another SSD, plug it into a thunderbolt port, because it would be dumb to connect it anywhere else.

Fine. At least you're acknowledging the "potential" for a 2nd SSD.

No, I'm acknowledging the potential for 2nd through to 37th SSDs... externally.

We'll just have to wait and see which solution the Apple engineers choose for the 2nd version of the new Mini Pro to add the second internal SSD.

Have you understood nothing from this thread? There isn't going to be a second internal SSD, because there are not enough PCIe lanes to support it without compromising on speed. Apple engineers already chose their solution: external storage connected by Thunderbolt 2.

This isn't going to magically change because you found a bit of space inside the box where an SSD would physically fit, because there's nothing sensible to connect it to inside the box.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.