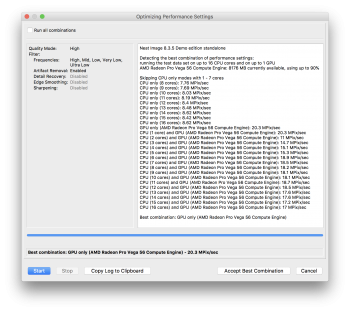

Hello, I was hoping if anyone has the new iMac Pro (8 core preferably, but any model will do) would be able to download the Demo Version of Neat Image and run the performance test?

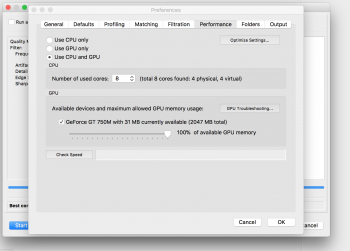

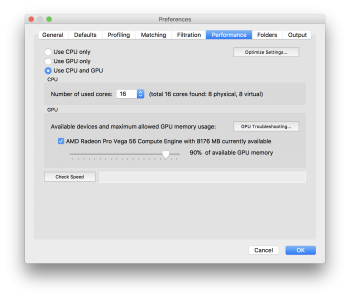

It's in the performance tab of the preferences.

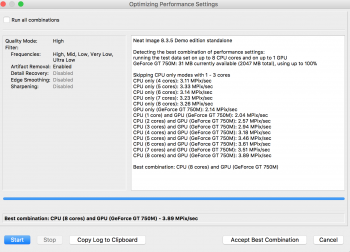

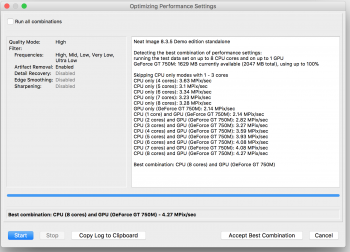

I've uploaded some screenshots of it. You have the option of running the performance test with the CPU and GPU. I use this program quite frequently and was curious as to how it would perform vs. my 2014 MacBook Pro. Thanks!

It's in the performance tab of the preferences.

I've uploaded some screenshots of it. You have the option of running the performance test with the CPU and GPU. I use this program quite frequently and was curious as to how it would perform vs. my 2014 MacBook Pro. Thanks!