It depends on when the product was purchased, if you were one of the unfortunate to have bought it within the latter half before the next release of OS X, the lifecycle has ended. A great example is Snow Leopard. SL 10.6.8 is arguably one of the best (performing) release version of OS X thus far.Sorry, but please enlighten me as to which Mac hardware/software is unsupported 1 year after its release and has had only a 1 year life cycle (if you can't, then I will accept your explanation of which OS X product has had only a 2 year life cycle).

Then that would suggest that Apple's incapable of writing software for older cards which can support 64 bit drivers. This is admission that Apple can't do what others have done, to include major Linux distros and Microsoft. You're discounting the possibility that the decision to stop support on older hardware is to force people to buy a newer machine.Now it is true the Mountain Lion does require 2009 or later Mac Minis, but OTOH, it will also run on 2007 iMacs, and the reason that it will not run on older machines is that the transition to a 64-bit kernel can not support the 32-bit graphics drivers used by older video cards.

The single biggest difference between SL and Lion is ASLR, with that Apple cut support for Rosetta and implemented a full ASLR code. Compared to Windows XP launched in Oct 2001 (still supported today), that platform did not support full ASLR, neither did the initial release of Windows Vista. Still users could upgrade older machines to Vista and install SP2 and have full ASLR support that used to run XP. I think you're making excuses for Apple and not recognizing when they deserve harsh criticism.

Pure 64 bit kernels have been around for a very long time, it's just that Apple's simply late to the event.This is the price of transitioning to a completely 64-bit kernel for improved memory addressing and performance, and it is not the kind of transition that is frequently made (the only previous transition that was equivalent to this one was the transition from PowerPC to Intel Core2Duo). Prior to Mountain Lion, new versions of OS X could usually run on 5 year old hardware (and in the case of the iMac and MBP, they still can), and I anticipate that this will again be the case, now that the transition is complete.

Apple isn't guilty of being the first to stop support altogether for the sole purpose of generating more revenue/sales and I'm certain they won't be the last. I didn't have to upgrade from SL to ML for 8GB of RAM support for my MBP, the laptop's RAM support limit is less about what OS it's running and more about what the limitations of the hardware is unless you're using a dinosaur of a computer.

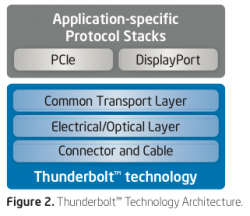

No matter what good people has to say about Apple computers, when it comes to their laptops all I can say is adapters, adapters, adapters. When you buy a MBP you think you can simply take it home like a PC, connect it to an external monitor VGA/DVI or HDMI, plug in both USB ports with your peripherals, it's not quite that simple.I do agree that Thunderbolt has had a very disappointing launch, although I'm still hoping that wider adoption will eventually drive down prices.

Majority of PC's don't require adapters for external monitors, the USB ports on the MBP are too close together as to only allow the slimmest of connectors to work side-by-side. God forbid you have a DVI monitor at home then go on the road for a presentation to find out you need a HDMI connection, you'd need to have 2-3 adapters in your bag to support all the common monitor/projector connections. Then if you're going to pack a Thunderbolt external HDD, how are you going to connect the external monitor and the HDD at the same time? The only 2 ways I know of is to buy that expensive Matrox/Belkin unit that acts like a MM box or an expensive external Thunderbolt HDD with a pass-thru feature.

Even on older PC's, the cheaper ones, a lot of them are USB 2.0 but they do include an Express Card slot, which can support a USB 3.0 card + dongle.

Last edited by a moderator: