I've just bought a M4 Mac mini and considering 4k or 5k monitor should buy. But now I am confused with the WindowServer's theory.

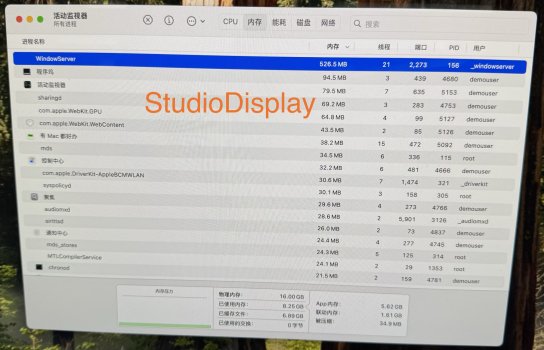

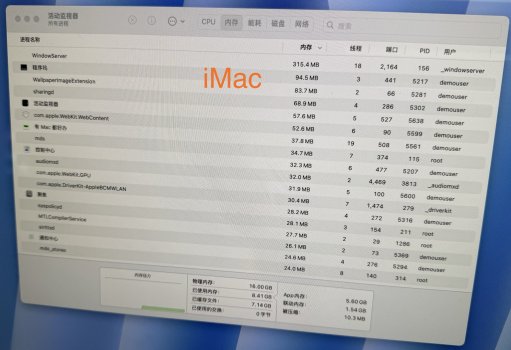

In theory higher resolution will cause more RAM usage in WindowServer, So I went to Apple Store and checked how much RAM WindowServer would take on StudioDisplay(5k) and iMAC(~4k). But I found that it's so weird that No WindowServer task could found in iMac and Mac mini with StudioDisplay. So if I spend more money to buy an Apple StudioDisplay, I can get more usable RAM?

But it's just not make sense, no matter what display I connect, macOS still need to process with the windows and pixels. Why I can't find WindowServer when macOS work with all that 'Apple made Displays'? Is that RAM used by WindowServer can really be saved out if I used Apple's Displays? Anyone knows about it~

In theory higher resolution will cause more RAM usage in WindowServer, So I went to Apple Store and checked how much RAM WindowServer would take on StudioDisplay(5k) and iMAC(~4k). But I found that it's so weird that No WindowServer task could found in iMac and Mac mini with StudioDisplay. So if I spend more money to buy an Apple StudioDisplay, I can get more usable RAM?

But it's just not make sense, no matter what display I connect, macOS still need to process with the windows and pixels. Why I can't find WindowServer when macOS work with all that 'Apple made Displays'? Is that RAM used by WindowServer can really be saved out if I used Apple's Displays? Anyone knows about it~