More benchmarks and an old discussion focussed on the non-creative and ergo the least interesting part of a 3D animation project. The cloud, dedicated render machines are solutions that comes to mind. If you want to contribute, find benchmarks for simulations and handling of particles systems, building large complex scenes etc that may slow the creative process.Sure...

View attachment 2182748

View attachment 2182746

View attachment 2182749

If the 4090 takes 15 hours to complete a short 3 minute animated rendering then how many days for M1 Ultra? A week?

View attachment 2182760

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

3D Rendering on Apple Silicon, CPU&GPU

- Thread starter vel0city

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

If the 4090 takes 15 hours to complete a short 3 minute animated rendering then how many days for M1 Ultra? A week?

From the same video he points out that on another scene one frame took 40min on a 3090 and over 23 hours a frame on a 3080 likely due to vram issues, so the M1Pro would be faster than a 3080 (probably a M2 MacBook Air would be faster than a 3080

Sir Wade Neistadt is more on the animation than rendering side of things; I wouldn't really take much away from rendering numbers (other than the 4090 is fast), but he raises some more interesting points with regards to workflow and benefits to the artist that having such a fast card provides. This is an area that the MacBook Pro excels at, with its fast ssd, large vram and unix based os (windows is terrible in terms of latency).

He also brings up one of the areas that's not really touched on elsewhere which is rig evaluation speeds, particularly in Maya where you can offload it onto the GPU for much faster evaluation (and also GPU cache for faster feedback). It would be awesome to get this on the AS GPUs as it would be really fast and you wouldn't need to swap things to GPU memory as it's already there.

Either way, kind of feel that the benchmark scores were possibly the least interesting takeaway from the video. There were some interesting points there though.

Link to video:

Guys, do you honestly not see that it is a problem that being on PC allows you 5-20x better performance in real world use cases? A magnitude of performance difference matters. All of a sudden new workflows emerge. I you haven’t tried working with a high performance computer you can oc continue with an already established workflow. But your competiton won’t wait for you!

And please don’t downplay lookdev and the rendering feeback loop. Anyway, as can be seen in blender 3.5 data, an Ultra is about similar per as a 6800. Or 1/6 of a 4090. Or 1/12 of dual 4090s.

A next gen m2 ultra might according to the most positive extrapolation double these values and a 4x version approaching a single 4090. i hope they reach these level since that would make the other benfits of a macpro the platform as a whole at least viable.

And please don’t downplay lookdev and the rendering feeback loop. Anyway, as can be seen in blender 3.5 data, an Ultra is about similar per as a 6800. Or 1/6 of a 4090. Or 1/12 of dual 4090s.

A next gen m2 ultra might according to the most positive extrapolation double these values and a 4x version approaching a single 4090. i hope they reach these level since that would make the other benfits of a macpro the platform as a whole at least viable.

Guys, do you honestly not see that it is a problem that being on PC allows you 5-20x better performance in real world use cases? A magnitude of performance difference matters.

I guess the question is what real world use cases are? For day to day work (vfx), there's little difference between my M1 Max 14" and my studio workstation; in terms of simulation speed, time to first pixel etc the laptop punches above it's weight (fast ssd and memory works wonders; depends on solver). Was going to post some simulation benchmarks in support of this, but looks like SideFX got rid of the Apple Silicon forum when it went out of beta. Generally though CPU based results were looking pretty compelling particularly for things like FLIP which hit the CPU, Memory and SSD pretty hard.

GPU wise, I don't think that anyone is expecting AS to rival 4090 when it's using OPTIX for renders; if the M2/3 has dedicated hardware then the gap will close significantly (although I'd assume the 4090 would have a significant lead because Octane / Cycles / Redshift have been optimising for Nvidia for over a decade).

And please don’t downplay lookdev and the rendering feeback loop. Anyway, as can be seen in blender 3.5 data, an Ultra is about similar per as a 6800. Or 1/6 of a 4090. Or 1/12 of dual 4090s.

For lookdev time to first pixel is important, and, going by YouTube videos, the Max was beating the equivalent PC laptop quite nicely in Redshift at least (tbh found the time to first pixel in GPU renderer's pretty slow particularly for heavy scenes with volumes - both Nvidia and AS wise).

A magnitude of performance difference matters. All of a sudden new workflows emerge. I you haven’t tried working with a high performance computer you can oc continue with an already established workflow. But your competiton won’t wait for you!

TBH finding the large amount of memory more compelling in terms of new workflows; finally it's possible to do final quality simulations on the GPU or to load an entire production environment onto it for lookdev and lighting (throw USD in the mix and it's pretty game changing). Both of these things are either prohibitively expensive or impossible on current Nvidia hardware. Still a little way off (and really needs the dedicated raytracing hardware), but find it more compelling.

I assume all you estimates are for in rendering? As said above, one silent workstation for creative work and then a rendering computer well tucked away from the workplace to avoid noise and heat.Guys, do you honestly not see that it is a problem that being on PC allows you 5-20x better performance in real world use cases? A magnitude of performance difference matters. All of a sudden new workflows emerge. I you haven’t tried working with a high performance computer you can oc continue with an already established workflow. But your competiton won’t wait for you!

And please don’t downplay lookdev and the rendering feeback loop. Anyway, as can be seen in blender 3.5 data, an Ultra is about similar per as a 6800. Or 1/6 of a 4090. Or 1/12 of dual 4090s.

A next gen m2 ultra might according to the most positive extrapolation double these values and a 4x version approaching a single 4090. i hope they reach these level since that would make the other benfits of a macpro the platform as a whole at least viable.

Guys, do you honestly not see that it is a problem that being on PC allows you 5-20x better performance in real world use cases? [...] A next gen m2 ultra might according to the most positive extrapolation double these values and a 4x version approaching a single 4090. i hope they reach these level since that would make the other benfits of a macpro the platform as a whole at least viable.

These are good and practical points. But please do consider that Apple is a newcomer to the desktop GPU market, while Nvidia has been doing it since forever. Nvidia's lead is the key areas is undisputed, but much of that lead is because they offer features that Apple yet lacks (like hardware RT), have iterated the hell out of their SIMT architecture since Tesla, and overall have a much more mature implementation. And of course, the fact that they can throw more die area and power at the problem doesn't hurt either. I mean, 4090 has 128 SMs (with a SM being more or less equivalent to an Apple GPU core) and is clocked much more aggressively to boot.

So while Nvidia's lead is, as said, undisputed at the moment, we should consider how things will develop for Apple going forward. M1 was still pretty much an iPhone GPU, just scaled up. Its register file was relatively small and there were some obvious problems scaling GPU clusters beyond certain size. Already the next iteration was a massive step forward. M2 Max is actually faster in Blender 3.5.0 than M1 Ultra — that's after one hardware iteration and roughly a year of software optimisations. If you look at Blender CUDA scores for Nvidia hardware (pur compute, without hardware raytracing), Apple is catching up very quickly — in fact, M2 Max performs similar to 15-16TFLOPS Nvidia GPUs while still being much more power efficient. If M2 Ultra solves the scaling issues, this would mean that Apple is only one generation behind in general-purpose compute.

Nvidia's trump card of course are still hardware ray tracing (benefiting Blender specifically) and larger high-end GPUs. But these advantages won't stay there forever. If Apple's next-gen GPUs come with competent hardware retracing (and there are good reasons to assume they might), Apple laptops at least could start posing some serious competition to Nvidia. And Apple still has ample opportunity to make their GPU cores larger or add more of them. My point is that while Nvidia will try to innovate, it might be more difficult for them because their architecture is already more optimised and refined. You can see this with Ada — impressive performance improvements, but almost all of that came from exploiting the process (more SMs, higher clocks) and overlocking the memory. So unless Nvidia comes up with something radically new and innovative, which will allow them to improve performance without increasing power consumption, they might start stagnating fairly soon. While Apple will undoubtedly have consistent GPU performance improvements for the next few generations.

... and AMD? Well, AMD seems to be in a bit of a dead end actually GPU-wise. They have their nice multi-chip tech that allows them to lower the manufacturing costs, but they seem to struggle when it comes to core feature innovation. In fact, they had to bold on some quick and dirty patches to pretend feature parity with Nvidia (like their very lazy RT implementation while they struggle to come up with a proper solution) or the limited VLIW of RDNA3 just so that they can claim 2x improvement in TFLOPS (which has limited practical impact for real code). I hope they have a new architecture that addresses these issues.

Respect all contributions.

However I really could not understand why are we talking about there is better GPU solutions. Yes there are way better ones, it is a fact.

Yes there are much more powerful dGPU's or complete systems. I can not deny that.

I am using Mx machines everyday and do not care about other hardware/os or GPU's.

In sake of forum topic which is

It will be really helpful if anyone go for a direction about how could we reach better workflows with AS Mx machines.

By the way I have try latest Arnold release for C4D on base spec Ultra. Results are good enough for me.

Arnold is the best CPU renderer I ever use. It is completely workable and not far behind from Redshift just now.

I could prefer Arnold for high density/Polycount scenes and of course for shading system (toon shader is very powerful).

Somehow Arnold renders feels way better for me.

However I really could not understand why are we talking about there is better GPU solutions. Yes there are way better ones, it is a fact.

Yes there are much more powerful dGPU's or complete systems. I can not deny that.

I am using Mx machines everyday and do not care about other hardware/os or GPU's.

In sake of forum topic which is

3D Rendering on Apple Silicon, CPU&GPU.

There should be other topics about performance a v.s. b.It will be really helpful if anyone go for a direction about how could we reach better workflows with AS Mx machines.

By the way I have try latest Arnold release for C4D on base spec Ultra. Results are good enough for me.

Arnold is the best CPU renderer I ever use. It is completely workable and not far behind from Redshift just now.

I could prefer Arnold for high density/Polycount scenes and of course for shading system (toon shader is very powerful).

Somehow Arnold renders feels way better for me.

Yes, I am aware about SideFX and Houdini. But I don't know anything about Houdini, it's learning curve looks like a bit painfulI've not gone through all 68 pages of posts, but not sure if you all saw that SideFX has put out Houdini with AS support. I don't think the Karma renderer is ready just yet, but you can use Mantra. It's a good step forward!

Houdini Indie Option looks like charming, other options looks like pricey

What do you think about Learning Curve ? Can you make an estimation for new users ?

I guess it is in the nature of forum discussions that we will never get our points across to all or that we will reach consensus. I personally work mainly in Houdini since many years but not with sims. In order to make anims I have used Redshift before and Octane lately. I can do most work on my mac mbp m1max but render on separate PC or farm. However, some of the work I do is lookdev and using a PC for this part (in my case an old pc with a 3090) has been at least a magnitude faster. I would prefer if this task was possible to do as effectively on a mac. That’s all folks, no hidden agenda or lack of understanding.

As you confirm it is safe to use Houdini on Mx macs what do you think about Houdini Learning Curve ? Looks like you have a lot of experience on it. Is there ''hidden gems'' for new users or any huge pluses compare to other softwares.I guess it is in the nature of forum discussions that we will never get our points across to all or that we will reach consensus. I personally work mainly in Houdini since many years but not with sims. In order to make anims I have used Redshift before and Octane lately. I can do most work on my mac mbp m1max but render on separate PC or farm. However, some of the work I do is lookdev and using a PC for this part (in my case an old pc with a 3090) has been at least a magnitude faster. I would prefer if this task was possible to do as effectively on a mac. That’s all folks, no hidden agenda or lack of understanding.

I have started with Maya long time ago than switch to C4D. I use C4D/Zbrush for animations/characters, time to time use Blender/Evee as a fast render option. Have got a medium grade PC with 3070, if deadline is too tight I use it only for rendering long scenes, this way I keep working on Ultra for modeling/texturing/compositing.

If I decide and if I will add Houdini to my workflow I tend to use Redshift as Render engine, is it ok for a new user ?. What do you suggest ?

Apple may have a problem with GPU cost. The M2 Max GPU uses as many transistors as the RTX 3090, but scores less than half as much in Blender. RTX 3090 scores about 3800 points with CUDA using 28 billion transistors, while 38-core M2 Max scores about 1500 points using about 27 billion transistors (assuming the GPU accounts for 40% of the SoC).Apple still has ample opportunity to make their GPU cores larger or add more of them.

https://opendata.blender.org/benchm...on&group_by=compute_type&group_by=device_name

Apple M2 Max Processor - Benchmarks and Specs

Benchmarks, information, and specifications for the Apple M2 Max

Apple may have a problem with GPU cost. The M2 Max GPU uses as many transistors as the RTX 3090, but scores less than half as much in Blender. RTX 3090 scores about 3800 points with CUDA using 28 billion transistors, while 38-core M2 Max scores about 1500 points using about 27 billion transistors (assuming the GPU accounts for 40% of the SoC).

It is definitely true that Nvidia has a big advantage in utilizing the GPU die area. They don’t have the large caches and the SoC functionality.

At any rate, we’re Apple and Nvidia compete in a PC GPU market, Apple would stand no chance. Fortunately for them, they make their own hardware and define their own targets. I’d expect that going forward Nvidia will continue to offer extreme performance for the gaming market while Apple will focus more on reasonable energy-efficient baseline for their consumer products and more flexible hardware for the pro market. Apples value proposition here will probably be something along the lines of “sure, we are slower than Nvidia gaming top end, but you can use our hardware on problem sizes where Nvidia will choke”. And Apple actually has the potential to deliver good value there. Something like 4090 does offer extreme levels of performance, but it will get in trouble on more complex tasks since there is simply not enough bandwidth to feed all those processing units. And GPUs with more RAM or more bandwidth are an entirely different price category. Apples $10K workstation with 128GB of GPU RAM and useable 30TFLOPs of compute power might actually be a good deal for many high-end professionals, especially once it gains hardware RT.

Edit: actually, scratch that. I looked at the die shots of Ada and M2 Max/Pro and tried to do some pixel counting. Both Apple's core and Nvidia's SM come to roughly 2.5-2.7mm2, so surprisingly close in size. Still a net win for Nvidia all things considered (especially since they pack the matrix unit per SM), but not a huge lead I have suggested initially.

Last edited:

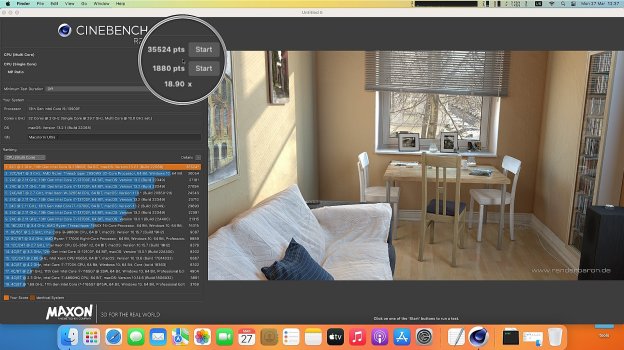

This is my cinebench R23 rendering benchmark done on Intel Core i9-13900F and Ventura OS. Then we can compare with MacPro or Mac Studio . Seems that 3D rendering scores are much higher . Can you get similar results on a mac ?

Based on Blender CPU benchmarks, the 13900F is slightly slower than the M1 Ultra and roughly 45% faster than M2 Max. For cost-efficient CPU rendering, newest AMD desktops processors are the way to go.

Blender - Open Data

Blender Open Data is a platform to collect, display and query the results of hardware and software performance tests - provided by the public.

Apples value proposition here will probably be something along the lines of “sure, we are slower than Nvidia gaming top end, but you can use our hardware on problem sizes where Nvidia will choke”.

The Moana scene might be a good benchmark. Are there any results on how long the M1 Ultra takes to render the Moana scene in recent months? How much VRAM should a scene need for Apple's GPU to be competitive against Nvidia's GPUs?

By the way, could we start a wiki post to show the status of 3D programs in macOS with performance test results and update it only when macOS and Metal compatibility of those programs improves?

That's what I use, Indie. It's cheap and fun to learn. Yes, it is a bit daunting, but it's really cool once you get the hang of it.Yes, I am aware about SideFX and Houdini. But I don't know anything about Houdini, it's learning curve looks like a bit painful.

Houdini Indie Option looks like charming, other options looks like pricey.

What do you think about Learning Curve ? Can you make an estimation for new users ?

Is an Nvidia SM == to an Apple Core? Seems like a Nvidia GPC is closer to an Apple GPU core since it appears an Apple GPU core has everything needed to make graphics happen while an SM is missing things (like the texture processing side).It is definitely true that Nvidia has a big advantage in utilizing the GPU die area. They don’t have the large caches and the SoC functionality. Besides, it does seem that Nvidia SMS are more area-efficient than Apples GPU cores. The 4090 uses roughly 4.5mm2 per SM, while Apple is somewhere over 5mm2 per core - this is probably because groups of SMs share some hardware functionality (like the rasterizer and texture units), while Apple GPU cores appear to be more self-sufficient. Not to mention that Apples approach requires dedicating large amounts of the die to the SLC and the memory controllers. Since mass-producing humongous dies is not practical, Apple has to rely on multi-chip technology which comes with its own challenges and additional cost.

At any rate, we’re Apple and Nvidia compete in a PC GPU market, Apple would stand no chance. Fortunately for them, they make their own hardware and define their own targets. I’d expect that going forward Nvidia will continue to offer extreme performance for the gaming market while Apple will focus more on reasonable energy-efficient baseline for their consumer products and more flexible hardware for the pro market. Apples value proposition here will probably be something along the lines of “sure, we are slower than Nvidia gaming top end, but you can use our hardware on problem sizes where Nvidia will choke”. And Apple actually has the potential to deliver good value there. Something like 4090 does offer extreme levels of performance, but it will get in trouble on more complex tasks since there is simply not enough bandwidth to feed all those processing units. And GPUs with more RAM or more bandwidth are an entirely different price category. Apples $10K workstation with 128GB of GPU RAM and useable 30TFLOPs of compute power might actually be a good deal for many high-end professionals, especially once it gains hardware RT.

I use C4D/Zbrush for animations/characters, time to time use Blender/Evee as a fast render option. Have got a medium grade PC with 3070, if deadline is too tight I use it only for rendering long scenes, this way I keep working on Ultra for modeling/texturing/compositing.

If I decide and if I will add Houdini to my workflow I tend to use Redshift as Render engine, is it ok for a new user ?. What do you suggest ?

My question would be, what benefits would you be looking to get from Houdini; how do you envision Houdini supplementing your existing workflow?

The learning curve can be bit daunting at times, but with it you get a lot of freedom and flexibility (often find with Houdini that the things that are hard in other software are easy, but sometimes the reverse is true).

I don't think the Karma renderer is ready just yet, but you can use Mantra. It's a good step forward!

Out of curiosity, what are you missing from Karma?

Haven't used Karma in production yet, but it seems pretty solid and 19.5 brought most to the missing productionction features (deep, matte object, robust crypto matte support etc). The main things I'm missing is texture baking and dispersion. Other than that it's an improvement on Mantra in most regards. Going back to using Mantra these days, feels like a step back for most things (find LOPs pretty nice once you get used to it).

I only use Mantra! If you use the USD pipeline then you need to use Karma. At least that is my understanding.My question would be, what benefits would you be looking to get from Houdini; how do you envision Houdini supplementing your existing workflow?

The learning curve can be bit daunting at times, but with it you get a lot of freedom and flexibility (often find with Houdini that the things that are hard in other software are easy, but sometimes the reverse is true).

Out of curiosity, what are you missing from Karma?

Haven't used Karma in production yet, but it seems pretty solid and 19.5 brought most to the missing productionction features (deep, matte object, robust crypto matte support etc). The main things I'm missing is texture baking and dispersion. Other than that it's an improvement on Mantra in most regards. Going back to using Mantra these days, feels like a step back for most things (find LOPs pretty nice once you get used to it).

Short answer: learning curve depends on your background. It is very easy if you’re an engineer or write code from time to time. Biggest hurdle might be understanding their way of thinking. The point as the primitive and the difference to a vertex. Then there are many contexts for things like geo and sims and comp that uses it’s own nomenclature. Luckily these days there is a lot of good intro material and you can choose to just know about the specifics you need. If you only need it for particles and volumes, you mightnot need to know much about coding or proceduralism for example (even if it is of course preferable). Redshift has one of the best integrations so thats good. TheAs you confirm it is safe to use Houdini on Mx macs what do you think about Houdini Learning Curve ? Looks like you have a lot of experience on it. Is there ''hidden gems'' for new users or any huge pluses compare to other softwares.

I have started with Maya long time ago than switch to C4D. I use C4D/Zbrush for animations/characters, time to time use Blender/Evee as a fast render option. Have got a medium grade PC with 3070, if deadline is too tight I use it only for rendering long scenes, this way I keep working on Ultra for modeling/texturing/compositing.

If I decide and if I will add Houdini to my workflow I tend to use Redshift as Render engine, is it ok for a new user ?. What do you suggest ?

ASi architecture seems to fit great for most of the Houdini workflows. Surprisingly fast for everything except rendering.

Edit: you will still want to to most modelling in other apps. And maya seems still to be best for animation.

It should be clear that they don't target the same market or design principles. One of them needs 350 Watt TDP for that performance, the other is probably only 50 Watt.Apple may have a problem with GPU cost. The M2 Max GPU uses as many transistors as the RTX 3090, but scores less than half as much in Blender. RTX 3090 scores about 3800 points with CUDA using 28 billion transistors, while 38-core M2 Max scores about 1500 points using about 27 billion transistors (assuming the GPU accounts for 40% of the SoC).

https://opendata.blender.org/benchmarks/query/?device_name=NVIDIA GeForce RTX 3090&device_name=Apple M2 Max&compute_type=CUDA&compute_type=METAL&blender_version=3.4.0&group_by=blender_version&group_by=compute_type&group_by=device_name

Apple M2 Max Processor - Benchmarks and Specs

Benchmarks, information, and specifications for the Apple M2 Maxwww.notebookcheck.net

Have in mind that you are comparing something that just isn't as optimised for Apple Silicon. Obviously the numbers will be severely skewed when one of them has over a two decade long optimisation advantage.

Is an Nvidia SM == to an Apple Core? Seems like a Nvidia GPC is closer to an Apple GPU core since it appears an Apple GPU core has everything needed to make graphics happen while an SM is missing things (like the texture processing side).

The hardware architectures are sufficiently different so that any attempt to direct equate building blocks will be flawed. You are correct that in terms of potentially repeatable hardware building blocks the closest equivalent to Apple core is a Nvidia's GPC.

But I like to compare Apple's cores to Nvidia's SM, because they are the equivalent unit when it comes to shader execution and the basic compute capability. Both feature 128 ALUs organised in four partitions¹ and a block of fast local memory that can be shared among a group of related threads (threadgroup/thread block in Metal and CUDA lingo respectively). I think this comparison is more useful when talking about performance in general and the hardware to software mapping specifically.

¹Well, technically Nvidia has two 16-wide SIMD per partition with different capabilities while Apple uses 32-wide unified SIMD, but it's close enough. Nvidia also packs additional hardware resources like the matrix engine and special function units etc.

It should be clear that they don't target the same market or design principles. One of them needs 350 Watt TDP for that performance, the other is probably only 50 Watt.

Have in mind that you are comparing something that just isn't as optimised for Apple Silicon. Obviously the numbers will be severely skewed when one of them has over a two decade long optimisation advantage.

Script that, looking at the actual core size on die shots suggests that Ada SM and Apple's GPU core are practically equal in size.

Last edited:

I only use Mantra! If you use the USD pipeline then you need to use Karma. At least that is my understanding.

Fair enough; I really like Mantra (still the one to beat for volume rendering!), and would probably still be using it if I wasn't in USD land.

I get the feeling that Mantra is pretty much on maintenance atm (in the initial release of 18.0 they moved Mantra under the 'legacy rendering' tab until people complained

Edit: you will still want to to most modelling in other apps. And maya seems still to be best for animation

Modelling is really frustrating; in other apps (Maya / Blender) I miss the proceduralism of Houdini, and in Houdini I miss the viewport feel of other apps (things like manipulator handles, selection and shortcuts etc).

Animation wise they just need to wrap up the kinefx stuff in an animator friendly bow and tweak the animation graph a bit. KineFX has so much potential with being able to combine animation with physics and retargeting etc.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.