What are you talking about, we are not measuring the GPU performance? Then what you want to say? What's the point of running Valley benchmark and you emphasis that's not CPU limiting (anyway, I already proved that it is)?

Yes, we are measuring the card's performance, BUT in a cMP.

You said "I believe unflashed GPU's has a limitation. A 380X must have done much better. Here's what I get with a flashed 270X. Check minimum FPS please. Didn't overclock the card." It is not about GPU performance?

Yes, purely about GPU performance in a Mac Pro. After examining Valley benches all day, I have come to a point his score is not abnormal. 380X is hitting the ceiling too.

You only focus on the min FPS but ignore everything else. And I already show you that min FPS is not reliable in Valley benchmark because that can go very low during scenes transition. Guess what? I can get even lower min FPS in Valley with the lowest setting on my cMP (same OS, same W3690, same RX580)

View attachment 774659

What's that mean? It means this particular measurement is NOT reliable.

Unless you can get higher min. FPS after benching over and over, it's a sign of a problem.

Also, tell me, why we need a playable frame rate for comparing performance. It's not gaming, just benchmark, I already gave you an example. 6FPS is 100% stronger than 3FPS, what's wrong with that? You made an assumption that we need "playable" for benchmarking, why? where it come from? What's the difference between "6FPS vs 3FPS" and "60FPS vs 30FPS"? numbers are just numbers, 100% stronger is 100% stronger. Going lower setting just make the test more easily become CPU limiting (not necessary the whole process, but lets say 10% of the time is CPU limiting, then the result already unable to accurately tell the difference in GPU performance).

It's just realistic, real world like.

I already show you the CPU usage in my last post, please show me yours.

I will be on holiday for two weeks after Friday. Will glady do all the benches you asked for. I don't even have a cMP hooked up to a monitor right now.

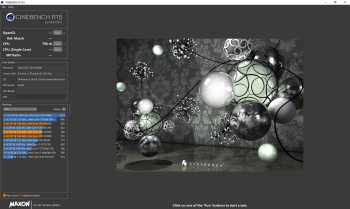

EFI is completely irrelevant to GPU performance. 1060 perform badly because the Nvidia web driver has much higher overhead than AMD driver in macOS. Please feel free to try that in Cinebench (you already know this is 100% CPU limiting benchmark), same result will be observe, again, because it's CPU limiting, and Nvidia GPU has higher overhead.

This is new info to me. So, nVidia driver sucks, but info I put is still true. A flashed 680 will shine, because it will use Apple's driver. True?

(BTW, what is GTX 3GB? Where the R9 390 come from?

Tested these cards on same PC. 3 GB GTX 1060 is faster than an R9 390. Sold R9 390.

If you want to prove that Mac EFI can significant affect the performance. Please do the following.

1) Install a AMD card that with the original PC VBIOS

2) Open Activity Monitor (to make sure CPU is not limiting during benchmark)

3) make sure there is nothing opened and the computer is quite idle

4) Run Valley benchmarks at highest setting (window mode, that will allow us to monitor CPU usage)

5) re-run Valley benchmarks 2 more times to make sure the results are consistent

6) Flash that AMD card with Mac EFI (the EFI ROM must be created by the original PC VBIOS)

7) re-do step 2-5 on the same cMP (with same spec, of course)

8) comparing the result

I had HD7950, R9 280, R9 380 before I moved to 1080Ti and RX580. I can tell there is absolutely no performance difference by just flashing the card. I didn't keep all the record, so, can't show you. But please prove me wrong. I am more than happy to learn. I am more than happy to be corrected. But I need some reliable evidences to show me that an AMD GPU performance can be significantly improved by adding the Mac EFI.

I won't do it, not just because it's too much work, but I do believe you.