The problem:

Like many of you frustrated by a lack of new technology in the Mac Pro, I've been looking around for the ideal SATA3 PCIe card for the Mac. My requirements were:

Alas, such a card does not exist. There are lots of PCIe SATA3 cards with x2 bus interfaces (such as the Apricorn Velocity Solo x2), but thats going to be a bottleneck for a pair of current SSDs in RAID0. There are also very expensive RAID controllers from Areca and Atto but that's not what I want either.

However, member All Taken recently put me onto the Highpoint 2720SGL which offers an 8x interface with native OS X support. The only problem with it was that it wasn't bootable in the Mac Pro (it is on a Hackintosh though). The reason this eluded me before, was the fact that Highpoint doesn't list it among their officially supported Mac products. The fact is, it works flawlessly without issue. There are no sleep or other issues I've come across after running it for a couple of weeks.

The Solution:

So while I ultimately wanted to have both my OS/Apps and Aperture Photo Library all on a single large SSD RAID0 volume, I recently decided this may never happen, so I opted to separate them.

The solution I decided on was:

My thinking was that my OS and Apps would benefit most from top notch small random read/write performance, which the M4 excels at. And, of course, the Aperture library (storing photos of 25-100MB in size) would benefit most from sequential read/write performance where RAID0 arrays excel. While I could have just purchased a pair of 256GB SSDs for the Aperture Library, I opted for a trio of drives to have more capacity and to really try to push performance. And, as you can imagine, that spare port on the 2720 is crying out for a fourth drive.

I had purchased the Crucial M4 some time ago when the prices came down to around $1/GB and was running it as my boot drive off the SATA2 backplane. It was trivial to connect it to the Apricorn Solo x2 and drop it in the top slot.

The rest all came together on Black Friday when the the 256GB Vertex 4 was available from Newegg for $175 CAD (it was $159 on Amazon at that time for US customers) and the 2720SGL was also on sale (and still is).

Why the Vertex 4:

I've been fairly critical of the Sandforce drives in the past, and, of course, OCZ has been one of the major vendors for Sandforce drives. On top of that, their reputation for using early adopters as beta testers is widely publicized. So why would I buy an OCZ product? Well, normally, I wouldn't for those exact reasons, however, in the case of the Vertex 4, a few things are different... (1) the drive uses a proven Marvell controller (2) the drive has been on the market for several months without any wide-spread complaints of issues (3) the performance is top notch (4) the price was rock bottom.

I did a lot of reading on the Vertex 4 and there's no MLC based drive that can write faster. There's an interesting and cool reason for this. As you may know, SLC NAND is single-bit per cell technology and it's super fast to write data to although it's very expensive. MCL NAND on the other hand, is dual-bit per cell so it's much more affordable but it takes a lot longer to write data to, hence write speeds suffer. This (and the endurance benefits) is why you see SLC NAND typically used in enterprise drives. It seem OCZ took advantage of the fact that you can treat MLC NAND somewhat like SLC NAND if you're only writing one bit per cell at any given time, and thus gain some write performance. Of course, this will only work up to 50% of the drives capacity. After which, the garbage collection needs to reorganize the data from single bit per cell to double bit per cell to free up NAND. This is pretty effective if you never write more than 50% of your free capacity at any given time. You effectively get SLC write speeds using MLC NAND when writes are not more than 50% of available free space.

While this was not a factor in my decision, I think it's a novel approach to improving performance without resorting to compression.

Additional hardware:

Besides, the Highpoint 2720 card, I also needed a couple of cables, and some way to mount the 3x Vertex 4 SSDs.

Here's the other stuff I purchased:

Installation:

The M4 on the Apricorn Solo X2...

The Vertex SSDs in their mounting bracket... note, there's room for 1 more

In the Mac Pro optical bay carrier...

The Mac Pro interior with cables running from the 2720 to the optical bay... (the idea for routing the cables comes from ConcordRules thread - Thanks!)

Everything installed...

Setting up RAID on the Highpoint 2720:

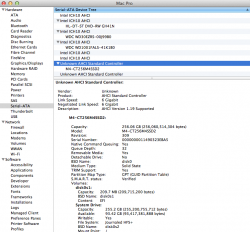

OS X recognizes the 2720 as a Parallel SCSI/SAS controller...

Drives will show up as SCSI/eSATA drives with an orange icon. You can manage them in Disk Utility, just like any other drive. And therefore you have all the software RAID options at your disposal that OS X natively offers.

If you want to take advantage of other RAID modes the 2720 offers, you can do so... you simply need to install their web RAID management interface (for the 2722) to allow you to access the card's firmware through a browser. I tried this and it works fine. However, it's RAID0 stripe size options were limited so one option (32K IIRC) so after playing around with it, I rebuilt my final RAID array using disk utility. More on performance and stripe size options coming up.

PCIe Slot:

All the recent Mac Pro's have a pair of 4x (electrical) slots at the top that connect through the ICH and a pair of 16x slots at the bottom connected to the X58 IOH.

I believe the top two x4 slots are switched... in other words, even though cards in both those slots will negotiate a x4 connection, they will be sharing that x4 (2GB/s) bandwidth. At any rate, what I found was even more damning... it appears there's something in the architecture of the Mac Pro that's limiting bandwidth of those top slots to 1GB/s or about half what I expected.

The 2720 with 3x SSDs in one of the top slots could not sustain transfers more than about 1000MB/s. In one of the x16 slots, I could get sustained transfer rates around 1370MB/s which is more in line with the limit of the three drives in RAID0.

Since I have a pair of recent Apple displays with mini display port connectors that I'm driving with a pair of GT120's, I'm currently running the Highpoint in one of the crippled x4 slots but I hope to rectify that soon once I get a single slot graphics card with dual MDP ports.

Drive benchmarking tools:

These are the drive benchmarking tools that I'm familiar with:

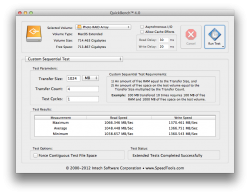

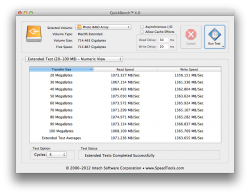

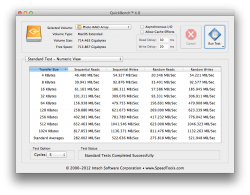

I purchased Quickbench. It seemed like a small investment compared to the hardware and allowed me to test a number of things to greater depth than any of the free tools. Having said that, the sequential speeds reported by AJA, Blackmagic, and Quickbench are all consistent which is a good sign and a solid endorsement for the free tools if that’s all you need to test.

Optimal stripe size:

I've always subscribed to the school of thought that stripe size should be a function of the type of data you're storing/accessing... a small stripe size for smaller files... and larger stripe sizes for larger files. When SSDs came along, the write amplification penalty due to large NAND page and block sizes also supported the use of larger stripe sizes. So when I started into this project, I was fully expecting to run as large a stripe size as I could.

In my research however, I found another guy that had been testing various RAID0 stripe sizes with the Vertex 4 (on a PC) and found that they performed better with smaller strip sizes... right down to 4KB. I decided to do a bunch of stripe size testing of my own before committing to one on my array.

Using the x16 slot for my testing, I tried 128K, 64K, 32K and 16K (the smallest OS X RAID0 allows) and the results were consistently better with decreasing stripe size. I forgot to take a screen shot of the benchmark tool on the larger stripes, but if I recall correctly, the differences were significant between 128K and 16K for larger sequential transfers (on the order of around 100MB/s but still less than 10%) and insignificant at lower 4k transfer sizes.

OS X vs Highpoint RAID0:

I compared RAID0 performance using the 2720 firmware vs. Disk Utility in OS X and results were identical in both scenarios.

Single vs Dual vs Triple SSDs in RAID0:

I also opted to test the Vertex 4 and 2720's ability to scale as drives were added to the array. Here are the max sequential read / write speeds in each case:

Single Drive: 489MB/s / 465MB/s

Dual Drive RAID0: 956MB/s / 927MB/s

Triple Drive RAID0: 1066 MB/s 1370 MB/s

Note that the read speeds don't scale well linearly beyond two drives. I'm not sure if this is the card or the drives. However write speeds do scale well as drive count increases (at least up to 3 drives).

Final Configuration:

For simplicity, I opted to setup the final 3x 256GB RAID0 array in disk utility with a 16K stripe.

Here’s the benchmarks: (attached)

Like many of you frustrated by a lack of new technology in the Mac Pro, I've been looking around for the ideal SATA3 PCIe card for the Mac. My requirements were:

- Bootable RAID0 (native OS X support)

- SATA3

- No bottlenecks (or PCIe x4 minimum)

- Affordable (sub $200)

Alas, such a card does not exist. There are lots of PCIe SATA3 cards with x2 bus interfaces (such as the Apricorn Velocity Solo x2), but thats going to be a bottleneck for a pair of current SSDs in RAID0. There are also very expensive RAID controllers from Areca and Atto but that's not what I want either.

However, member All Taken recently put me onto the Highpoint 2720SGL which offers an 8x interface with native OS X support. The only problem with it was that it wasn't bootable in the Mac Pro (it is on a Hackintosh though). The reason this eluded me before, was the fact that Highpoint doesn't list it among their officially supported Mac products. The fact is, it works flawlessly without issue. There are no sleep or other issues I've come across after running it for a couple of weeks.

The Solution:

So while I ultimately wanted to have both my OS/Apps and Aperture Photo Library all on a single large SSD RAID0 volume, I recently decided this may never happen, so I opted to separate them.

The solution I decided on was:

- 256GB Crucial M4 on Apricorn Velocity Solo x2 for OS/Apps

- 3x256GB OCZ Vertex4 on the Highpoint 2720SGL for Aperture Libraries

My thinking was that my OS and Apps would benefit most from top notch small random read/write performance, which the M4 excels at. And, of course, the Aperture library (storing photos of 25-100MB in size) would benefit most from sequential read/write performance where RAID0 arrays excel. While I could have just purchased a pair of 256GB SSDs for the Aperture Library, I opted for a trio of drives to have more capacity and to really try to push performance. And, as you can imagine, that spare port on the 2720 is crying out for a fourth drive.

I had purchased the Crucial M4 some time ago when the prices came down to around $1/GB and was running it as my boot drive off the SATA2 backplane. It was trivial to connect it to the Apricorn Solo x2 and drop it in the top slot.

The rest all came together on Black Friday when the the 256GB Vertex 4 was available from Newegg for $175 CAD (it was $159 on Amazon at that time for US customers) and the 2720SGL was also on sale (and still is).

Why the Vertex 4:

I've been fairly critical of the Sandforce drives in the past, and, of course, OCZ has been one of the major vendors for Sandforce drives. On top of that, their reputation for using early adopters as beta testers is widely publicized. So why would I buy an OCZ product? Well, normally, I wouldn't for those exact reasons, however, in the case of the Vertex 4, a few things are different... (1) the drive uses a proven Marvell controller (2) the drive has been on the market for several months without any wide-spread complaints of issues (3) the performance is top notch (4) the price was rock bottom.

I did a lot of reading on the Vertex 4 and there's no MLC based drive that can write faster. There's an interesting and cool reason for this. As you may know, SLC NAND is single-bit per cell technology and it's super fast to write data to although it's very expensive. MCL NAND on the other hand, is dual-bit per cell so it's much more affordable but it takes a lot longer to write data to, hence write speeds suffer. This (and the endurance benefits) is why you see SLC NAND typically used in enterprise drives. It seem OCZ took advantage of the fact that you can treat MLC NAND somewhat like SLC NAND if you're only writing one bit per cell at any given time, and thus gain some write performance. Of course, this will only work up to 50% of the drives capacity. After which, the garbage collection needs to reorganize the data from single bit per cell to double bit per cell to free up NAND. This is pretty effective if you never write more than 50% of your free capacity at any given time. You effectively get SLC write speeds using MLC NAND when writes are not more than 50% of available free space.

While this was not a factor in my decision, I think it's a novel approach to improving performance without resorting to compression.

Additional hardware:

Besides, the Highpoint 2720 card, I also needed a couple of cables, and some way to mount the 3x Vertex 4 SSDs.

Here's the other stuff I purchased:

Installation:

The M4 on the Apricorn Solo X2...

The Vertex SSDs in their mounting bracket... note, there's room for 1 more

In the Mac Pro optical bay carrier...

The Mac Pro interior with cables running from the 2720 to the optical bay... (the idea for routing the cables comes from ConcordRules thread - Thanks!)

Everything installed...

Setting up RAID on the Highpoint 2720:

OS X recognizes the 2720 as a Parallel SCSI/SAS controller...

Drives will show up as SCSI/eSATA drives with an orange icon. You can manage them in Disk Utility, just like any other drive. And therefore you have all the software RAID options at your disposal that OS X natively offers.

If you want to take advantage of other RAID modes the 2720 offers, you can do so... you simply need to install their web RAID management interface (for the 2722) to allow you to access the card's firmware through a browser. I tried this and it works fine. However, it's RAID0 stripe size options were limited so one option (32K IIRC) so after playing around with it, I rebuilt my final RAID array using disk utility. More on performance and stripe size options coming up.

PCIe Slot:

All the recent Mac Pro's have a pair of 4x (electrical) slots at the top that connect through the ICH and a pair of 16x slots at the bottom connected to the X58 IOH.

I believe the top two x4 slots are switched... in other words, even though cards in both those slots will negotiate a x4 connection, they will be sharing that x4 (2GB/s) bandwidth. At any rate, what I found was even more damning... it appears there's something in the architecture of the Mac Pro that's limiting bandwidth of those top slots to 1GB/s or about half what I expected.

The 2720 with 3x SSDs in one of the top slots could not sustain transfers more than about 1000MB/s. In one of the x16 slots, I could get sustained transfer rates around 1370MB/s which is more in line with the limit of the three drives in RAID0.

Since I have a pair of recent Apple displays with mini display port connectors that I'm driving with a pair of GT120's, I'm currently running the Highpoint in one of the crippled x4 slots but I hope to rectify that soon once I get a single slot graphics card with dual MDP ports.

Drive benchmarking tools:

These are the drive benchmarking tools that I'm familiar with:

- Xbench – (free) last updated in 2006 before SSDs and notoriously inconsistent

- AJA – (free) sequential read/write tests only and doesn’t appear to work on boot volumes

- Blackmagic – (free in the app store) sequential read/write tests only

- Disktester – ($40) No comment

- Quickbench – ($15) offers sequential and random tests for a reasonable price

I purchased Quickbench. It seemed like a small investment compared to the hardware and allowed me to test a number of things to greater depth than any of the free tools. Having said that, the sequential speeds reported by AJA, Blackmagic, and Quickbench are all consistent which is a good sign and a solid endorsement for the free tools if that’s all you need to test.

Optimal stripe size:

I've always subscribed to the school of thought that stripe size should be a function of the type of data you're storing/accessing... a small stripe size for smaller files... and larger stripe sizes for larger files. When SSDs came along, the write amplification penalty due to large NAND page and block sizes also supported the use of larger stripe sizes. So when I started into this project, I was fully expecting to run as large a stripe size as I could.

In my research however, I found another guy that had been testing various RAID0 stripe sizes with the Vertex 4 (on a PC) and found that they performed better with smaller strip sizes... right down to 4KB. I decided to do a bunch of stripe size testing of my own before committing to one on my array.

Using the x16 slot for my testing, I tried 128K, 64K, 32K and 16K (the smallest OS X RAID0 allows) and the results were consistently better with decreasing stripe size. I forgot to take a screen shot of the benchmark tool on the larger stripes, but if I recall correctly, the differences were significant between 128K and 16K for larger sequential transfers (on the order of around 100MB/s but still less than 10%) and insignificant at lower 4k transfer sizes.

OS X vs Highpoint RAID0:

I compared RAID0 performance using the 2720 firmware vs. Disk Utility in OS X and results were identical in both scenarios.

Single vs Dual vs Triple SSDs in RAID0:

I also opted to test the Vertex 4 and 2720's ability to scale as drives were added to the array. Here are the max sequential read / write speeds in each case:

Single Drive: 489MB/s / 465MB/s

Dual Drive RAID0: 956MB/s / 927MB/s

Triple Drive RAID0: 1066 MB/s 1370 MB/s

Note that the read speeds don't scale well linearly beyond two drives. I'm not sure if this is the card or the drives. However write speeds do scale well as drive count increases (at least up to 3 drives).

Final Configuration:

For simplicity, I opted to setup the final 3x 256GB RAID0 array in disk utility with a 16K stripe.

Here’s the benchmarks: (attached)