Tutors Premiere Tutor

Sorry my friend but this expectation are a bit unrealistic

If this expectation is a bit unrealistic, I promise you it is not my first one, nor shall it be my last one, for an old wise woman, who shall be my consummate tutor for so long as I inhabit this shell, said, more than once, to me while rearing me:

"Son, set your goals like this door frame [while pointing to it]. Set them to the top [from the floor] and should you get only half way there, your level of achievement would be just above the door handle and you’ll feel that you still have a long way to go to reach success, in your mind. But set them to the top of the baseboard [from the floor] and should you get only half way there, you will not have far to go to reach success, in your mind. But while you might not like how far you have to go if you've set your goals very high, even when you get only part of the way there, some will look, with amazement, at what you’ve done, though inside you might feel like a complete failure.”

Yes, GPU render is fast, but only for small scene(like the one in Cinebench), there is a reason why in every demo video of Octane(or other GPU renderer) it just show a very simple setup like a car, a few object or a basic indoor/outdoor scene.

If you work on simple stuff like product shots etc, than it can be a good/fast option,

I agree that demos for OctaneRender, as well as those for other GPU and CPU renderers, tend to use small scenes. The reason that I refer to demo scenes in this thread is because others can more easily replicate testing, given the public availability and relatively small download times for such scenes.

... .but as soon as you begin to throw in your scene million polygons, lot of big textures, many lights, etc, you will find that GPU is still very far behind a modern CPU renderer(biased or unbiased), for that reason nobody in production industry is using that for final shots.

Just browse on Otoy Octane forum gallery to see real work(not simple benchmark scene) with real render time(hardware specs in signature), just few examples:

https://render.otoy.com/forum/viewtopic.php?f=5&t=38660

https://render.otoy.com/forum/viewtopic.php?f=5&t=38648

https://render.otoy.com/forum/viewtopic.php?f=5&t=38505

https://render.otoy.com/forum/viewtopic.php?f=5&t=38641

https://render.otoy.com/forum/viewtopic.php?f=5&t=38400

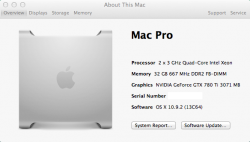

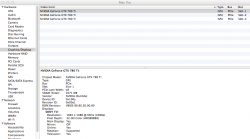

Video ram size limitations on current GPUs [as compared with the large amounts of system memory that I have and can add to my clock tweaked 4, 6, 8, 12, 16 and 32 core machines] does require an alteration in the workflow to get the most from GPU rendering technology. My tool chest that I use for home related repairs, does not rely on just one screw driver or one saw or one hammer, etc. I use the appropriate tool for the needed repair. Likewise, my tool chest for artistic work relies on many tools whose functions are similar, but specific ones are now best positioned for the job at hand. Some jobs, or maybe just parts thereof, are best accomplished by CPU rendering, some by GPU rendering and yet others by a combination of both technologies.

Don't know about you but I'm really not impressed, everyone with a decent modern xeon machine can match or exceed that in term of speed/quality.

Whether one's impressed by GPU rendering varies. I, for one, am impressed by what it can do now with OctaneRender, Thea Render [

http://www.thearender.com/cms/ ] and Redshift [

https://www.redshift3d.com/products/redshift/ ], and even more so by potential uses for GPU rendering as GPUs are accompanied by greater amounts of ram. Also, I'm impressed by developers like the folks at Redshift who have developed:

"Out-of-Core Architecture - Redshift uses an out-of-core architecture for geometry and textures, allowing you to render massive scenes that would otherwise never fit in video memory. A common problem with GPU renderers is that they are limited by the available VRAM on the video card – that is they can only render scenes where the geometry and/or textures fit entirely in video memory. This poses a problem for rendering large scenes with many millions of polygons and gigabytes of textures. With Redshift, you can render scenes with tens of millions of polygons and a virtually unlimited number of textures with off-the-shelf hardware."

Maybe I'm more easily impressed than are most. Different strokes for different folks.

Is there a chance to see some of your scenes?

Very soon, as I consummate another phase of my worldly transformations, you may find that you can see more of my scenes than you'd like to see, but they most likely will not be posted in this thread. However, the one thing that living in this shell for more than 60 years has taught me, is to never say, "never." My aspirations here are about maximizing CPU/GPU related performance and thus I'd prefer to deal only with publicly available benchmark scenes that allow others to easily replicate what I do and to make comparisons.

----------

@Echoout

Of course even a scene with 10 polygons can be a real work scene if that's what you are doing, and in that case GPU renderer can be a great solution. I was just saying that as soon as you rise scene complexity this solution will soon become slower than classic CPU renderer, so if you are expecting a Cinebench score of 17.000 points just because you are on GPU you will be most likely disappointed, unless you work with very simple setup

Let's say that GPU renderer speed strongly depends on what you are working on, claiming a general 34x speed increase is not very fair

This is a perfect example of why I prefer to use generally available benchmark scenes, although I apologize if I didn't make it crystal clear that what I was doing was done fully in the context of rendering a standard Cinebench 15 benchmark scene.