Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

MP All Models Apple M2 Ultra Benchmarks Show Cupertino Didn’t Beat Intel, AMD, Or NVIDIA

- Thread starter Jethro!

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

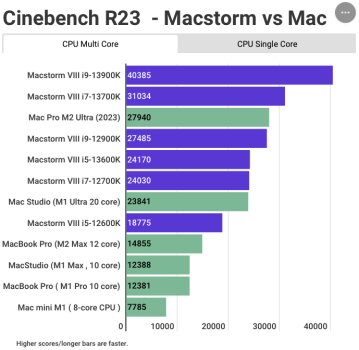

Holy crap. Not only did the i9 obliterate the M2Ultra, the i7 beat it. And am I understanding that right, the previous gen i9 is basically even with the M2 ultra?

That's because Cinebench has optimized SSE/AVX code but the (ARM) NEON code is translated by a library and is not optimized.

My 12th gen i9 [12900KS] is noticeably faster than my M1 Studio Ultra when utilising the cores [on the same apps]. Not that the studio is a slouch.Holy crap. Not only did the i9 obliterate the M2Ultra, the i7 beat it. And am I understanding that right, the previous gen i9 is basically even with the M2 ultra?

No need to discuss how the 4090 compares.

Win 11 for Nvidia use only, everything else is done on my Mac.

It is to be expected.

The shared memory of the M1 did a lot to boost many benchmarks. However, Apple can not bend the laws of physics and come out with both low power usage AND top performance at heavy load. To think Apple could beat Intel, AMD and Nvidia in both areas would be unrealistic. Computer design is still the art of compromise.

The shared memory of the M1 did a lot to boost many benchmarks. However, Apple can not bend the laws of physics and come out with both low power usage AND top performance at heavy load. To think Apple could beat Intel, AMD and Nvidia in both areas would be unrealistic. Computer design is still the art of compromise.

At some point Apple will have to design a chip that is allowed to use more power as nobody seems to care about performance / watt on desktop computers.

Except if it's between graphic cards with nearly the same performance, then for some reason it matters.

Except if it's between graphic cards with nearly the same performance, then for some reason it matters.

At some point Apple will have to design a chip that is allowed to use more power as nobody seems to care about performance / watt on desktop computers.

Except if it's between graphic cards with nearly the same performance, then for some reason it matters.

You know where apple could use performance/watt to some benefit? If they went into making something like a Synology. It would be StoragePod or something like that. Could be a Home Hub, but also provide Time Machine storage. Give you your own private iCloud on your hardware with limitless iCloud@home Photos. Put in some Wifi AP stuff in there.

Would be a hit, and there, the low power serves a function as people would keep it on 24/7...

Of course it's so practical there is zero chance they would do something like that.

Along with a low power tv app could stream to AppleTV without requiring a Mac to be turned on.You know where apple could use performance/watt to some benefit? If they went into making something like a Synology. It would be StoragePod or something like that. Could be a Home Hub, but also provide Time Machine storage. Give you your own private iCloud on your hardware with limitless iCloud@home Photos. Put in some Wifi AP stuff in there.

Would be a hit, and there, the low power serves a function as people would keep it on 24/7...

Of course it's so practical there is zero chance they would do something like that.

I suspect that the margin on those however would be lower then Apple interested in.

That's because Cinebench has optimized SSE/AVX code but the (ARM) NEON code is translated by a library and is not optimized.

That's a few leaps of logic. There are translation libraries that cause no performance loss. Most translate at compile time - so what gets built into the app is real NEON code.

SSE/AVX on ARM does not mean loss of performance.

Edit: They seem to use sse2neon:

GitHub - DLTcollab/sse2neon: A translator from Intel SSE intrinsics to Arm/Aarch64 NEON implementation

A translator from Intel SSE intrinsics to Arm/Aarch64 NEON implementation - DLTcollab/sse2neon

Sse2Neon is a compile time header library. Nothing is being emulated. What comes out is real NEON code running natively.

Last edited:

It is to be expected.

The shared memory of the M1 did a lot to boost many benchmarks. However, Apple can not bend the laws of physics and come out with both low power usage AND top performance at heavy load. To think Apple could beat Intel, AMD and Nvidia in both areas would be unrealistic. Computer design is still the art of compromise.

At some point Apple will have to design a chip that is allowed to use more power as nobody seems to care about performance / watt on desktop computers.

Except if it's between graphic cards with nearly the same performance, then for some reason it matters.

You are both 100% spot on, and this is exactly the point that many of us have been making (and sadly which the Mac Studio brigade seem to not understand, no matter how many times we explain) -- When it comes to workstations/raw power: NOBODY CARES ABOUT LOW POWER DRAW, and this is why Mac Silicon fails (currently).

If apple can somehow manage to design a (workstation class) chip, specifically for the Mac Pro only, that is not bound by power, then I believe they could potentially take on Nvidia, Intel and AMD. The problem with this supposition, is, will they? (hiiiiiighly doubtful, since their operating model now functions from the bottom up, instead of top down, as it should be), so these are more or less just glorified iPhone chips.

Update: It sounds like they may actually be thinking of going in the direction I mentioned above, which means the M3 Mac Pro might be interesting (although probably still handicapped with expansion like the M2 joke)

Last edited:

If by kicking, you mean kicking @$$, then I agree. I think the real comparison is between the M2 Ultra in the Mac Pro and the new Xeons and Threadripper CPU's that Apple could've used instead. I think there's no comparison in that situation.Good to see the competitors are kicking…

Apple’s departure from Intel’s ecosystem dates back to the introduction of the T2 chip, perhaps earlier. I don’t think Apple is going back.

Apple’s departure from Intel’s ecosystem dates back to the introduction of the T2 chip, perhaps earlier. I don’t think Apple is going back.

Timeline of Apple's focus on performance per watt...

- 2003-2005: PowerPC G5 was stuck at 130nm-90nm so cannot be placed into Mac laptops & 3GHz G5 could not be delivered

- 2005: PowerPC G5 90nm to Intel 65nm transition for the Mac because then until today ~80% of PCs will be laptops

- 2006-2020: Intel has monopoly of all PC OEMS as soon as Jan 2006 MBP 15" Core Duo 65nm of Apple

- 2007: 1st iPhone released with Samsung ARM chip also used for DVD players

- 2008: Apple buys P.A. Semi for iPhone chips & eventually Mac chips in 2020

- 2010: Apple A4 45nm, first SoC Apple designed in-house. First used in the 1st iPad

- 2011: Report: Apple didn't select Intel chips for the iPhone

- 2011: Steve Job resigns and dies a week later Tim Cook is appointed CEO

- 2013: Apple A7 28nm, 1st 64-bit smartphone SoC

- 2014-2020: Intel is stuck at 14nm for 6 years

- 2015-2017: Skylake QA was abnormally bad by Apple's standard

- 2017: iPhone 8 and 8 Plus, and iPhone X ships with Apple A11 Bionic 10nm

- 2017: Apple T2 chip launched to imrpove Intel Macs.

- 2018: Apple plans to use their own chips in the Mac by 2020

- 2018: iPhone XS & XS Max ships with Apple A12 Bionic 7nm

- 2020: 1st and only 10nm Intel chip used in Mac was released in May 2020

- 2020: Intel 14nm to Apple Silicon 5nm transition for the Mac on Nov 2020 because then until today ~80% of PCs are laptops

- 2023: TSMC 3N (3nm) being tested for September's iPhone... M3 Mac chip expected to use it by Q1 2024

- 2023: Intel's 1st 24-core CPU laptop chip still on Intel 7 when Apple Silicon has been on 5nm since Nov 2020 & will be on 3N by Q1 2024

- 2023: Intel proposes x86S architecture that reduces largely disused legacy x86 microarchitecture from the silicon die

- 2024: All but one 14nm Intel chip will stop shipping. Xeon E-series embedded processors based on the Rocket Lake architecture that will linger for a while longer.... more than a decade of 14nm...

Going forward ARM PCs will start eating into x86 laptops that make up ~80% of all x86 PCs shipped worldwide. When ARM PCs have excess SoCs they may then go after x86 desktops.

This is still a segment where computing power is more valuable than energy efficiency.When it comes to workstations/raw power: NOBODY CARES ABOUT LOW POWER DRAW, and this is why Mac Silicon fails (currently).

When you move upwards to HPC systems energy efficiency rules. The fastest system at the Top500 list use most power, more than 20 MW. At the same time they are among the most energy efficient. The energy efficiency have improved more than 20 times the last 10 years, in 2013 the best one got 3 Gflops/w, today that has increased to 65 Gflops/w. No one in their right mind buy an old HPC system, the electricity bill is horrific.

NOBODY CARES ABOUT LOW POWER DRAW

I do. My Studio saves me ~$20 a month in electric bills compared to my iMac Pro.

You know where apple could use performance/watt to some benefit? If they went into making something like a Synology. It would be StoragePod or something like that. Could be a Home Hub, but also provide Time Machine storage. Give you your own private iCloud on your hardware with limitless iCloud@home Photos. Put in some Wifi AP stuff in there.

Would be a hit, and there, the low power serves a function as people would keep it on 24/7...

Of course it's so practical there is zero chance they would do something like that.

The old Time Capsule essentially did this. If they ever make another one, perhaps they could ventilate it this time...

So in 3 years you can put that saving of $720.00 towards a new Studio M3 or M4 where i will upgrade My CPU mem and GFX card in my workstation and save alot more that the new M4 studio maxed out will cost.I do. My Studio saves me ~$20 a month in electric bills compared to my iMac Pro.

Power Efficiency is not a priority in the work place. Workflow is priority as 12 months in when i can improve my workflow with upgrades you cant. after 3 years my workflow could be 50% more efficient than yours covering any energy cost's.

For note books ( long Battery Life ) Mac mini's and studio home use apple Silicone based machines are perfect as long as you buy one that will do all you want for many years. If not after 18 months you might wish you had not purchased the base model as no upgrades available.

For a Pro Machine, it needs to be up gradable and the new Mac pro Studio 8.1 is not a true workstation. Power efficiency plays no part in the workstation environment. work flow is king.

i will upgrade My CPU mem and GFX card in my workstation and save alot more that the new M4 studio maxed out will cost. ... Power Efficiency is not a priority in the work place.

If that's your thing great. Not interested. Power maybe not a concern when someone else is paying the bill.

For a Pro Machine, it needs to be up gradable

That just is not how real professionals work. They purchase or lease machines with support. No one who is getting paid to use a computer can afford to sit around while they diagnose a problem with a machine. That is someone else’s problem.

Slightly out of context but applies as well to upgrades.

Data centers tend to look for ways to cut down on overhead. One of which is power consumption via performance per watt. This is especially important in locations with very high $/kWh.This is still a segment where computing power is more valuable than energy efficiency.

When you move upwards to HPC systems energy efficiency rules. The fastest system at the Top500 list use most power, more than 20 MW. At the same time they are among the most energy efficient. The energy efficiency have improved more than 20 times the last 10 years, in 2013 the best one got 3 Gflops/w, today that has increased to 65 Gflops/w. No one in their right mind buy an old HPC system, the electricity bill is horrific.

A hope I had for Apple was for them to enter the server space with Apple Silicon. The power savings it brings would be enticing.

You know where apple could use performance/watt to some benefit? If they went into making something like a Synology. It would be StoragePod or something like that. Could be a Home Hub, but also provide Time Machine storage. Give you your own private iCloud on your hardware with limitless iCloud@home Photos. Put in some Wifi AP stuff in there.

Would be a hit, and there, the low power serves a function as people would keep it on 24/7...

Of course it's so practical there is zero chance they would do something like that.

Absolutely it would be an easy buy over an Synology… except it would be APFS rather than BTRFS, and it wouldn’t let you use it directly as a cloud solution, you’d have to rent an equivalent amount of iCloud+ storage because it would just be an iCloud device... Etc.

Absolutely. None of the companies with whom I work buys third party RAM or does CPU/GPU upgrades over the life of a machine. What good would AppleCare+ be if in order to use it one needed to first remove the third party RAM? While adding RAM would not void the coverage, Apple requires it be removed before service. Just not worth the hassle/risk.Slightly out of context but applies as well to upgrades.

Well i would say those companies need to employee an IT tech who could save them a fortune in upgrades. Paying through the nose for upgrades is not the cheapest way forward. Plus removing Upgraded ram or GPU along with storage is not rocket science in a Mac pro 7.1.

Yes apple care might want the machine back for service in its original purchased form, but if you use internal raid storage you would not want any personal data files going back to apple anyway.

Even with the New 8.1 studio Pro with PCIe available you would have to remove any on board storage as i don't see any options for storage devices from apple. maybe a 3rd party supplier like highpoint card would have to be removed.

Perhaps another reason Apple change the format of the Mac studio Pro so you cant add 3rd party Ram or GPU devices you have to buy a whole new machine if you require more Ram or built in storage from now on.

Yes apple care might want the machine back for service in its original purchased form, but if you use internal raid storage you would not want any personal data files going back to apple anyway.

Even with the New 8.1 studio Pro with PCIe available you would have to remove any on board storage as i don't see any options for storage devices from apple. maybe a 3rd party supplier like highpoint card would have to be removed.

Perhaps another reason Apple change the format of the Mac studio Pro so you cant add 3rd party Ram or GPU devices you have to buy a whole new machine if you require more Ram or built in storage from now on.

I was watching this Jan 25, 2019 video on the then rumored Sony PS5 using AMD APUs that shipped 22 months later.

1st link starts at the beginning

The first part talked about the low cost early gen Sony transistor radio and TV. When these were 1st introduced they were of low quality than their vacuum tube counterparts. What made Sony succeed in those products was that these were "good enough" for most budget-limited persons and not "perfect". Those vacuum tube radios and TVs are now largely defunct making way for improvements in transistor radios and TVs.

For the past decade this is repeating again with chips with "good enough" APUs & SoCs vs "perfect' x86 CPUs and dGPUs. What got my attention was when narrator mentioned what will happen by 2023.

2nd link jumps to that segment

This is why I was saying that before 2030 Apple's Ultra/Extreme chips will likely outperform desktop x86 & desktop dGPU in terms of raw performance at a better performance per watt. Then the Pro/Max chips and maybe even with the M chips within the 30s.

Intel's proposing a legacy x86 reduced Intel x86S to remove obsolete tech. This would negatively impact those dependent on legacy software and peripherals.

1st link starts at the beginning

The first part talked about the low cost early gen Sony transistor radio and TV. When these were 1st introduced they were of low quality than their vacuum tube counterparts. What made Sony succeed in those products was that these were "good enough" for most budget-limited persons and not "perfect". Those vacuum tube radios and TVs are now largely defunct making way for improvements in transistor radios and TVs.

For the past decade this is repeating again with chips with "good enough" APUs & SoCs vs "perfect' x86 CPUs and dGPUs. What got my attention was when narrator mentioned what will happen by 2023.

2nd link jumps to that segment

This is why I was saying that before 2030 Apple's Ultra/Extreme chips will likely outperform desktop x86 & desktop dGPU in terms of raw performance at a better performance per watt. Then the Pro/Max chips and maybe even with the M chips within the 30s.

Intel's proposing a legacy x86 reduced Intel x86S to remove obsolete tech. This would negatively impact those dependent on legacy software and peripherals.

Last edited:

At some point these desktop chips are not going to be able to take the shortcut of increasing heat to increase performance. All the heatsink technology in the world won't prevent them from hitting a thermo-wall. They will have to reengineer, and Apple is well ahead in that game.At some point Apple will have to design a chip that is allowed to use more power as nobody seems to care about performance / watt on desktop computers.

Apple's limited to the Mac segment of the whole PC market.At some point these desktop chips are not going to be able to take the shortcut of increasing heat to increase performance. All the heatsink technology in the world won't prevent them from hitting a thermo-wall. They will have to reengineer, and Apple is well ahead in that game.

But wait... the largest ARM SoC brand is entering the PC market wholly focusing on the Windows side.

AMD, Nvidia and Intel should be very affraid with Qualcomm. They will 1st go after laptops. This is ~80% of all PCs shipped. When they have excess capacity they will then delve into SFF PCs.

Yes, but it is a hard area to enter, it is so much more than CPU efficiency. Interconnect, coprocessors, storage and not at least how well it handles the type of calculation made. It also must run very well on Linux. Usually it is a question of how much data the system can crunch per hour for X M$, including overhead.Data centers tend to look for ways to cut down on overhead. One of which is power consumption via performance per watt. This is especially important in locations with very high $/kWh.

A hope I had for Apple was for them to enter the server space with Apple Silicon. The power savings it brings would be enticing.

I am not sure Apple is ready to invest enough resources to enter this segment, it is a new different area for them. They would also need to develop another level of support.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.