I have used multiple AI pipelines for months.

Training: A 100 in the cloud, H100 provides more clustering and micro-GPU granularity flexibility. Apple is nonexistent in this space; I wonder if Apple will ever complete this space.

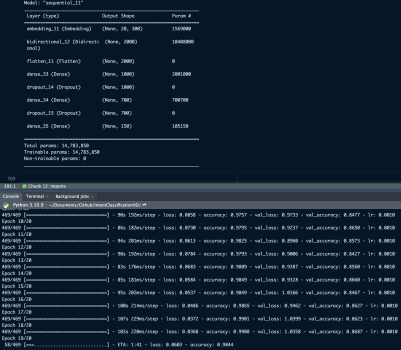

Inference: I want to spend only a little money on cloud-running inferences; this is where I spend most of the time after I have trained models ready for action. This is the most frustrating part of my workflow using NVidia 4090 or 3090. Very unstable, need to limit power to 70%, underclock by 20-25%. These run out of memory often; 24 GB is a joke these days. I have 128-256 GB RAM available, which GPU can't use.

I was forced to convert some of my models to COREML, it was a joke a year ago, but Apple finally woke up and has been pretty decent in recent months. My 16 " M1 max MacBook pro sometimes performs better with 64 GB unified memory. In some cases, it's my only option.

Yes, I have an extra step to convert my Models trained on A 100; I had to build a wrapper on the existing code base to support AS. In the end, I have the best of both worlds. I could spend 5K on A5000, but it's like jets taking off in my backyard, not to mention the power draw and stability issues.