Gaming GPU vs workstation GPU, the gaming GPUs are great for gaming but don't do as well at computations. Workstation GPUs do better at computations but aren't as good for gaming.

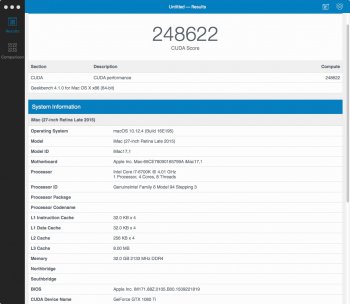

My D700 and my wife's GTX 680 are both in the 3.1-3.5 tflop range, my D700 kills her GTX 680 in computational benchmarks like OpenCL, her GTX 680 wins when doing rendering benchmarks like Valley.

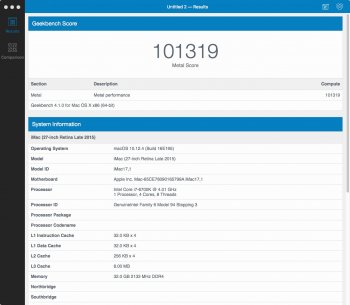

This is an incorrect assessment of the situation. TFLOPs is a measurement of raw computational power. If the RX 470 has more TFLOPs than the D700s, then it has more raw computational horsepower. However, this raw power is very rarely the bottleneck in benchmarks, for both OpenCL and OpenGL/Metal. Many OpenCL benchmarks have been written for or tuned for the AMD architecture, and thus run extremely inefficiently on the NVIDIA architecture (since they are fundamentally different). Most compute code written/tuned for NVIDIA uses CUDA, as it exposes more of the underlying architecture to the application. There are a few OpenCL examples like Oceanwave and a face recognition benchmark that run much faster on NVIDIA than AMD, but again, that's probably because they were written on NVIDIA and thus have an implicit bias for that architecture.

As always, it really just boils down to the applications you want to run. If you care about LuxMark, then buy an AMD card. If you care about DaVinci Resolve, then buy an NVIDIA card.