Lou,

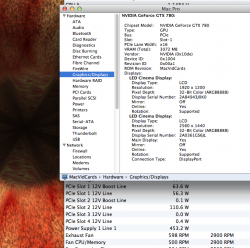

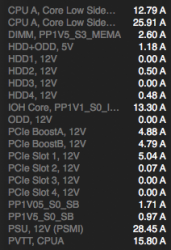

Count me in the MVC fan club too; I have sung praises multiple times. I'm also using a 6+8 pin card.

That being said, I like to be an informed buyer. I'd much rather hear the whole story and make my own decision.

- Some people here make a living off of their MP and perhaps have a much lower tolerance for risk than, for example, a gamer. They might not want an out of spec solution at all.

- Some people might want the card but won't balk about $40 to add a second power supply just to be safe.

- And others, like myself, might be completely comfortable simply running 6+8 cards directly.

The bottom line is, everybody has different preferences, priorities, and comfort levels. It is helpful, therefore, to hear all sides of the story and make an informed decision.