I have a 4 GB GTX 1050 Ti and the normal RAM usage on the video card is 40-60% with 3x4k and two programs that use the GPU regularly. It was actually difficult picking a GPU to support 3x4k as GPU specs are a bit on the vague side as to what they can support but it's pretty obvious that you're not going to run 3x4k when there are only 2 video outs. I suspect that I got something that's pretty close to the minimum card. Getting a more expensive card is no guarantee of support either.

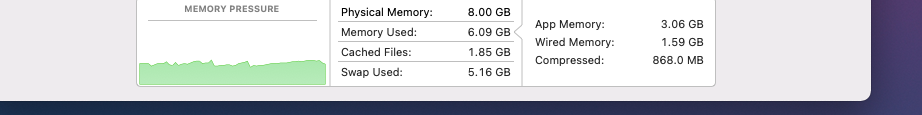

In general, there's no downside to more RAM.

How are you monitoring the video card ram usage? What are your programs that exercise the GPU? (And I've suffered the same experience of trying to figure out what a card would support - and I probably overbought my gpu card and would only ever use its 4gb dedicated gpu ram if I took up serious gaming, which is not my thing; now it sits in a drawer because nvidia and I don't notice any difference)

But your numbers support my point really: you're running a pretty maximal screen setup - three 4K monitors - and some specialised programs, and you're on average ONLY using about 2gb of video card memory.

Given that the macbook air only supports the internal and one external monitor (acc to apple specs), the amount of video memory 'saved' is going to be nothing like the 8gb dedicated the other user had - again, except maybe in some exceptional use cases. (Or to guess, his 8gb dedicated video card was probably way more than needed on for video ram)

Again, I'm still on the side that apart from up-front cost, there's no downside to more ram and for serious users, a good idea (within reason). But that also doesn't mean everyone who runs even the single (supported) large external monitor will need that extra 8gb - and a pretty large proportion of laptop users rarely use external screens at all. (Even though I'd still get the extra memory myself)