The problem with your examples is that you're comparing resampled still frames from videos. Of course resampling the images or grabbing frame captures from the same video converted to different formats will always result in a different hash, pixels dropped, pixels created. So I don't see how comparing file hashes is a meaningful comparison. This is going to be true even if you don't use any sort of interpolation and you're going to have very slight changes to the pixel counts with each frame you're looking at from frame to frame.3rd.

I know there are forensics tools that can do image comparison, but are you familiar with Python at all? You could go a step further and use this guys extremely simple script for physically pinpointing all of the differences between two static images.

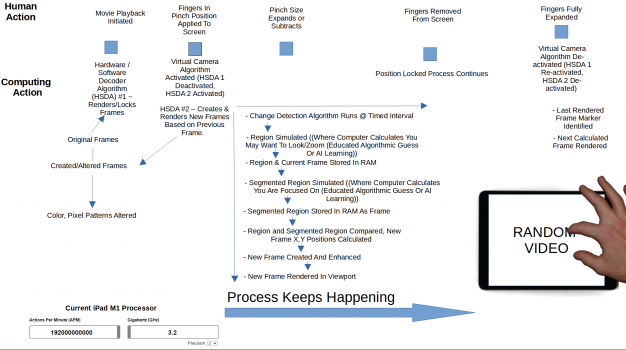

Still, even if you compared a thousand frames, it doesn't answer the question about pinch to zoom, upscaling/downscaling and whether an iPad is capable of that level of motion interpolation in real-time. Everything I've learned in 30 years tells me it's not possible with the current iPad processor, so it's doing something else entirely.

You could create an experiment where you have at least two iPads, one of them a control, put them both under a microscope and devise a method to play a video whilst continuously performing some mechanically duplicated pinching gestures, record the changes to the pixels in the microscope frame by frame and then compare the results. Either that or Apple can just tell everyone what's going on under the hood.

I don't think an iPad has the processing power to do real-time motion interpolation in video WHILE zooming in and out.