Yep. I expect at some point Apple will switch to a hybrid multi-slot, but they wont remove the ability to read SD.I expect CFExpress to become more popular in mid to high-end cameras in the next few years, but it still has relatively low penetration in consumer cameras as a whole. I know there are those who think SD cards are obsolete simply they don't personally use them, but it is still *far* more widespread (and used in different device types) than CFExpress.

Sony has taken an interesting approach with their hybrid SD/CFe Type-A readers, but they seem to be in a minority because CFExpress Type B looks set to become the standard. AFAIK, there are no hybrid readers that support SD and CFe-Type B, so at some point there might be a switch the CFe-Type B only readers on Macs. I think it will take a long time though (5+ years).

I'm not against CFExpress at at - it's a better standard, and at the high end is now cheaper than SDXC UHS-II/III. However, SDXC even if UHS-I form is good enough for a lot of people who just want to take some photos, record audio, or take FHD video. It's like USB-A - extremely widespread and good enough for the job, even if there are faster USB-C devices.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

M1 Max MBP has all these unnecessary ports….WTF?!

- Thread starter AdonisSMU

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Huh... Imagine that! People using Macs for professional video production. That's something new! Unheard of! Never happened before!The assertion by folks that "we need HDMI 2.1" doesn't match my experience at all. Maybe in video production houses / TV studios with high-end AV gear...but not in typical western corporate offices.

I'll admit that HDMI 2.1 is still very new, and I've only seen it in TV's so far. BUT, HDMI 2.1 is the only way to get full 4K60 10-bit 4:4:4 through HDMI. For that reason alone, it's very practical right now. In addition, we now have 8K video cameras, which means 8K video production, and how to view that video? There are no 8K DisplayPort monitors I know of; and even if there were, people would want to view the video on televisions... and there are no 8K TV's with 8K DisplayPort inputs, only HDMI 2.1. So if HDMI 2.1 isn't in the 2022 MBP, there will be stronger demand by 2023 or 2024.

In short, I think HDMI 2.1 in a Mac is only a matter of "when," and not "if." I can buy a PC and slap an AMD or nVidia card and drive 8K via HDMI 2.1, and that's not possible with a Mac... something that people in Cupertino are surely aware of.

Last edited:

I work in a western office, and we do video work... I think you'd be amazed how many businesses do work on video shooting, editing, transferring.... And not even "high end" many YouTubers even can make use of it. I mean.. that statement is just SO uneducated it hurts to read.Absolutely! Pretty much every monitor I have come across in corporate offices has HDMI and mostly FHD or sometime QHD monitors, plus just about every conference room with a large flat-screen TV or projector has an HDMI cable.

I have only rarely seen monitors with DisplayPort over USB-C or Thunderbolt in corporate offices, and I'm talking about large banks, utilities, and IT companies, not small to medium enterprises. I don't recall ever using a 4K monitor, but have seen a couple of 4K TVs in meeting rooms.

The assertion by folks that "we need HDMI 2.1" doesn't match my experience at all. Maybe in video production houses / TV studios with high-end AV gear...but not in typical western corporate offices.

I have spent years doing IT in an advertising agency, that grew from 200 to a few thousand, then years IT consulting for multiple small/medium businesses at once, and now work IT for a company that does Wearhouse robotics management. They have used Video production work, Thunderbolt/USB-C for scratch disk storage and external fiber cable connections. 4K screens for video and photo editing and reviewing.

I don't know HOW you managed to somehow work IT in all those field and NOT seen 4k screens being used or video being shot. Have you only ever worked entry helpdesk ticketing or something?

My statement is "uneducated"? I don't think so. If you read my post carefully, you will see that I clearly state that *my personal experience* (as an IT professional) is that I very, very rarely see 4K monitors in corporate offices. My experience is my "education" - but of course other people's experience will differ.I work in a western office, and we do video work... I think you'd be amazed how many businesses do work on video shooting, editing, transferring.... And not even "high end" many YouTubers even can make use of it. I mean.. that statement is just SO uneducated it hurts to read.

I have spent years doing IT in an advertising agency, that grew from 200 to a few thousand, then years IT consulting for multiple small/medium businesses at once, and now work IT for a company that does Wearhouse robotics management. They have used Video production work, Thunderbolt/USB-C for scratch disk storage and external fiber cable connections. 4K screens for video and photo editing and reviewing.

I don't know HOW you managed to somehow work IT in all those field and NOT seen 4k screens being used or video being shot. Have you only ever worked entry helpdesk ticketing or something?

Of course I understand that some (or even most?) large organizations may have *some people* who are involved in "high-end" video production possibly requiring the use of color-correct 4K (or greater) monitors that support high frame rates. It's also just as likely that large corporations outsource their video work, particularly if video is nothing to do with their business.

You say that you have "spent years doing IT in an advertising agency" - and that is the fundamental difference. Advertising agencies are media focused and are not what I would call typical corporate office users, such as financial services, government, public service, utilities and so forth.

Even within your organization, how many people are actually involved in video editing? The admin staff, the copywriters? Do they all need 4K screens with HDMI 2.1? I really doubt that, unless the company gets a very good deal on bulk purchases. Why buy expensive monitors for employees who only need to work in office productivity apps? Makes no financial sense for a business.

Note that I mentioned "high-end" video production, which is probably something you are used to in the advertising world. Many corporate videos (and I've been involved in making a few) do not require or use high-end capture or editing gear. Quite a few videos (tech training etc.) are made using web-cams and screen capture. The better ones are captured with modest DSLR/mirrorless/video cameras (1080p or 4K), and there is absolutely no need for HDMI 2.1 120Hz monitors to edit these.

I don't even see the need for it myself, and I've got some nice cameras (Blackmagic and Panasonic) and edit in Davinci Resolve Studio and FCPX. My monitors? a 10 year old Dell 1080p and another FQD (2560x1440) screen, and they are just fine for editing 4K video. Would they do for cinematic release color grading? No...but then that is not my use case.

I would bet that if you took a random sample of Fortune 500 companies and toured their offices that you would not find many monitors requiring HDMI 2.1 - I would be astonished if it were more than a fraction of a percent. BTW, my company an (AWS partner) is just moving to new offices. Monitors? One or two 1080p (22-24") per desk... Even when I worked at Amazon Web Services, I didn't get a 4K monitor (although I had quite a nice Samsung wide screen), but very little 4K screens anywhere that I could see - and Amazon is not short of money!

Your response sounds "uneducated" about the "real world" to me

Last edited:

OK - I understand that in the professional video editing world, you do need a capability to edit 4K60 10-bit 4:4:4. But...you already can do that via the TB4 ports.Huh... Imagine that! People using Macs for professional video production. That's something new! Unheard of! Never happened before!

I'll admit that HDMI 2.1 is still very new, and I've only seen it in TV's so far. BUT, HDMI 2.1 is the only way to get full 4K60 10-bit 4:4:4 through HDMI. For that reason alone, it's very practical right now. In addition, we now have 8K video cameras, which means 8K video production, and how to view that video? There are no 8K DisplayPort monitors I know of; and even if there were, people would want to view the video on televisions... and there are no 8K TV's with 8K DisplayPort inputs, only HDMI 2.1. So if HDMI 2.1 isn't in the 2022 MBP, there will be stronger demand by 2023 or 2024.

In short, I think HDMI 2.1 in a Mac is only a matter of "when," and not "if." I can buy a PC and slap an AMD or nVidia card and drive 8K via HDMI 2.1, and that's not possible with a Mac... something that people in Cupertino are surely aware of.

My post was more about what kind of monitors are commonly found in corporate offices.

My understanding is that the HDMI port is intended for "normal boring corporate usage"™ - for connecting to the usual FHD and QHD monitors and projectors that you find in lots of offices. I don't think Apple intended it to be the primary video output for high-end video editing screens.

Sure, as HDMI standards improve and new screens adopt it, the situation will change. For now, there will be a small number of people who want to directly connect from the HDMI port to an HDMI 2.1 screen. Presumably you can just buy a cable or an adaptor for one of the TB ports, such as this one:

Cable Matters 48Gbps USB C to HDMI 2.1 Adapter, Support 8K 60Hz / 4K 240Hz HDR, Thunderbolt 4 to HDMI 2.1, HDMI 2.1 to USB C Adapter, Compatible with iPhone 16/15 - Max Resolution on Mac is 4K@60Hz

Cable Matters 48Gbps USB C to HDMI 2.1 Adapter, Support 8K 60Hz / 4K 240Hz HDR, Thunderbolt 4 to HDMI 2.1, HDMI 2.1 to USB C Adapter, Compatible with iPhone 16/15 - Max Resolution on Mac is 4K@60Hz

www.amazon.com

It the same as people who whinge about the SD-card slot, saying that this is a not a professional standard. Yes, a lot of pro photographers are using CFExpress/XQD and cinema cameras are using CFast 2.0, Arri, Red etc...but it's a tiny number of people compared to the millions who have cameras with SD card. Apple is selling to a mass market, and simply configure their machines for the majority of buyers.

Again, if you need high resolution at 60fps in 10-bit 4:4:4, I think TB3/4 has you covered, right?

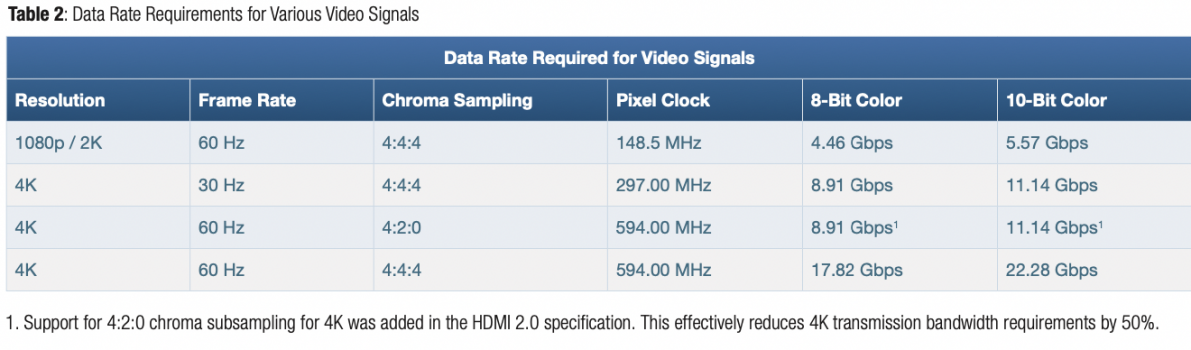

I think HDMI 2.0 tops out at 18Gbps, so it isn't good enough for 4K, 60Hz, 4:4:4, 10-bit, but looks like TB3/4 DisplayPort (1.3/1.4?) has no problem with 22.28Gbps. At 120Hz? No, it won't because HDMI 2.1 needs up to 48Gbps, which may well be why the MacBook Pro and Studio don't support HDMI 2.1 if the underlying video buses are limited to 40Gbps (by PCIe or Thunderbolt limits).

Attachments

Last edited:

As an Amazon Associate, MacRumors earns a commission from qualifying purchases made through links in this post.

You made a few mistakes -- it's understandable, you probably don't work with this stuff.OK - I understand that in the professional video editing world, you do need a capability to edit 4K60 10-bit 4:4:4. But...you already can do that via the TB4 ports.

My post was more about what kind of monitors are commonly found in corporate offices.

My understanding is that the HDMI port is intended for "normal boring corporate usage"™ - for connecting to the usual FHD and QHD monitors and projectors that you find in lots of offices. I don't think Apple intended it to be the primary video output for high-end video editing screens.

Sure, as HDMI standards improve and new screens adopt it, the situation will change. For now, there will be a small number of people who want to directly connect from the HDMI port to an HDMI 2.1 screen. Presumably you can just buy a cable or an adaptor for one of the TB ports, such as this one:

Cable Matters 48Gbps USB C to HDMI 2.1 Adapter, Support 8K 60Hz / 4K 240Hz HDR, Thunderbolt 4 to HDMI 2.1, HDMI 2.1 to USB C Adapter, Compatible with iPhone 16/15 - Max Resolution on Mac is 4K@60Hz

Cable Matters 48Gbps USB C to HDMI 2.1 Adapter, Support 8K 60Hz / 4K 240Hz HDR, Thunderbolt 4 to HDMI 2.1, HDMI 2.1 to USB C Adapter, Compatible with iPhone 16/15 - Max Resolution on Mac is 4K@60Hzwww.amazon.com

It the same as people who whinge about the SD-card slot, saying that this is a not a professional standard. Yes, a lot of pro photographers are using CFExpress/XQD and cinema cameras are using CFast 2.0, Arri, Red etc...but it's a tiny number of people compared to the millions who have cameras with SD card. Apple is selling to a mass market, and simply configure their machines for the majority of buyers.

Again, if you need high resolution at 60fps in 10-bit 4:4:4, I think TB3/4 has you covered, right?

View attachment 1996050

I think HDMI 2.0 tops out at 18Gbps, so it isn't good enough for 4K, 60Hz, 4:4:4, 10-bit, but looks like TB3/4 DisplayPort (1.3/1.4?) has no problem with 22.28Gbps. At 120Hz? No, it won't because HDMI 2.1 needs up to 48Gbps, which may well be why the MacBook Pro and Studio don't support HDMI 2.1 if the underlying video buses are limited to 40Gbps (by PCIe or Thunderbolt limits).

First... yes, you can use DisplayPort to get greater than 4K60 8-bit color... except that no television I know of has DisplayPort. LG, Sony and Samsung don't. So if you want to view your 8K video on a large display... it cannot be done, unless you use a Windows PC.

Second... that Cable Matters cable adapter won't even deliver 4K60 HDMI on Macs, so it's useless. I wasn't aware that DP Alt Mode formally supports HDMI rates above 18 Gbps, so I don't know how it works under Windows, but I'm a Mac user.

Third... just because HDMI 2.1 *CAN* run at 48 GHz, it doesn't mean it *HAS TO*. If a vendor only wants to support 4K60 with 10-bit, they can just implement 6 Gig PHYs and that's all. Or they can implement 4K120 uncompressed by using 8 Gig PHYs, for 32 GHz, or (more likely) use 10-bit PHY's (40 Gbps) to get 4K120 with 10-bit color. I can't for the life of me understand why so many people think that HDMI 2.1 products need to implement every single feature of HDMI 2.1, because no display currently can support both 4K120 and 8K60. Even at 8K, displays are using 40 GHz because the panels are 10-bit, not 12-bit. So there's no point in driving the full 48 GHz to a 10-bit panel. Anyway... the takeaway is that 8K60 10-bit color requires 40 Gbps and so does 4K120 4:4:4 10-bit. So almost nobody is going up to the full 48 GHz speed right now.

Four, your chart is incorrect. The data rate for 4K60 8-bit color is 17.82 Gbps, but the data rate for 4K60 10-bit color in HDMI is 20.05 Gbps.

As an Amazon Associate, MacRumors earns a commission from qualifying purchases made through links in this post.

Funny to see these folks arguing the 'need' for better HDMI specs or removable storage.

When I worked at NBC Universal and at two ad agencies before that, we had dedicated hardware for video ingest and playback. You are going to have something like a Blackmagic box hooked up with HDMI, SDI maybe even component output if you have some old school guy that needs an old Sony monitor or something.

But most pro video production is using SDI, not HDMI.

When I worked at NBC Universal and at two ad agencies before that, we had dedicated hardware for video ingest and playback. You are going to have something like a Blackmagic box hooked up with HDMI, SDI maybe even component output if you have some old school guy that needs an old Sony monitor or something.

But most pro video production is using SDI, not HDMI.

Thanks - you've provided some useful information. You're right; I do not work in professional video production - I'm very much at the informal hobbyist level, and don't even have a 4K TV or any 4K screens, so none of this actually matters for my personal use.You made a few mistakes -- it's understandable, you probably don't work with this stuff.

First... yes, you can use DisplayPort to get greater than 4K60 8-bit color... except that no television I know of has DisplayPort. LG, Sony and Samsung don't. So if you want to view your 8K video on a large display... it cannot be done, unless you use a Windows PC.

Second... that Cable Matters cable adapter won't even deliver 4K60 HDMI on Macs, so it's useless. I wasn't aware that DP Alt Mode formally supports HDMI rates above 18 Gbps, so I don't know how it works under Windows, but I'm a Mac user.

Third... just because HDMI 2.1 *CAN* run at 48 GHz, it doesn't mean it *HAS TO*. If a vendor only wants to support 4K60 with 10-bit, they can just implement 6 Gig PHYs and that's all. Or they can implement 4K120 uncompressed by using 8 Gig PHYs, for 32 GHz, or (more likely) use 10-bit PHY's (40 Gbps) to get 4K120 with 10-bit color. I can't for the life of me understand why so many people think that HDMI 2.1 products need to implement every single feature of HDMI 2.1, because no display currently can support both 4K120 and 8K60. Even at 8K, displays are using 40 GHz because the panels are 10-bit, not 12-bit. So there's no point in driving the full 48 GHz to a 10-bit panel. Anyway... the takeaway is that 8K60 10-bit color requires 40 Gbps and so does 4K120 4:4:4 10-bit. So almost nobody is going up to the full 48 GHz speed right now.

Four, your chart is incorrect. The data rate for 4K60 8-bit color is 17.82 Gbps, but the data rate for 4K60 10-bit color in HDMI is 20.05 Gbps.

Somehow we've gone from a discussion about the prevalence of 4K screens (and which of those require HDMI 2.1) in "standard corporate offices" to high-end systems for video production work, but it's an interesting topic in any case.

I can see that there is a gap in Apple's offering, particularly if conversion from DP to HDMI 2.1 is not reliable or possible. If you want to connect to a 4K120 or 8K display via HDMI, you may not be able to on a MacBook Pro or Studio with current configurations.

That said, I don't know how often high-end monitors (not TVs) are going to be using HDMI inputs. I would have thought that DisplayPort was more common? Perhaps not these days?

In any case, there was an argument being made (apparently) that (a) high-end video production needs HDMI2.1 to allow for 4K@120Hz, and (b) that lots of average corporate users have (or need) 4K displays.

I haven't seen anything on this thread yet that convinces me of either of those - but I am happy to be educated!

And I suppose that Apple must think there isn't enough of a requirement for HDMI 2.1 at present to warrant its inclusion on their machines. I tend to agree.

My impression is that DisplayPort is still the default for monitors and HDMI is the default for TVs and projectors. In the GPUs I've seen recently, 3 DisplayPort outputs and 1 HDMI output seems to be the norm. 2+2 and 3+2 are also somewhat common. The situation is reversed in monitors. Either there is a single input of each type, or then there is 1 DP + 2 HDMI. Multiple HDMI inputs seem to be more common in gaming monitors.That said, I don't know how often high-end monitors (not TVs) are going to be using HDMI inputs. I would have thought that DisplayPort was more common? Perhaps not these days?

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.