Well, they've only "cancelled" something that had never been announced, promised or (as far as I know) leaked on Geekbench... there are enough leaks to show that they were probably investigating the idea of a 4xMax "extreme" chip, but I don't think any details ever emerged of how far they got (I may be wrong). One would expect that, for every concept that reached production, Apple R&D had "investigated" and abandoned several other concepts. There are plenty of mundane reasons why the "extreme" idea might have been dropped: too difficult to fuse more than 2, too expensive/too low yield to produce, disappointing performance, inefficient use of space (duplicating stuff that they didn't need just to create more GPU cores or PCIe lanes)...

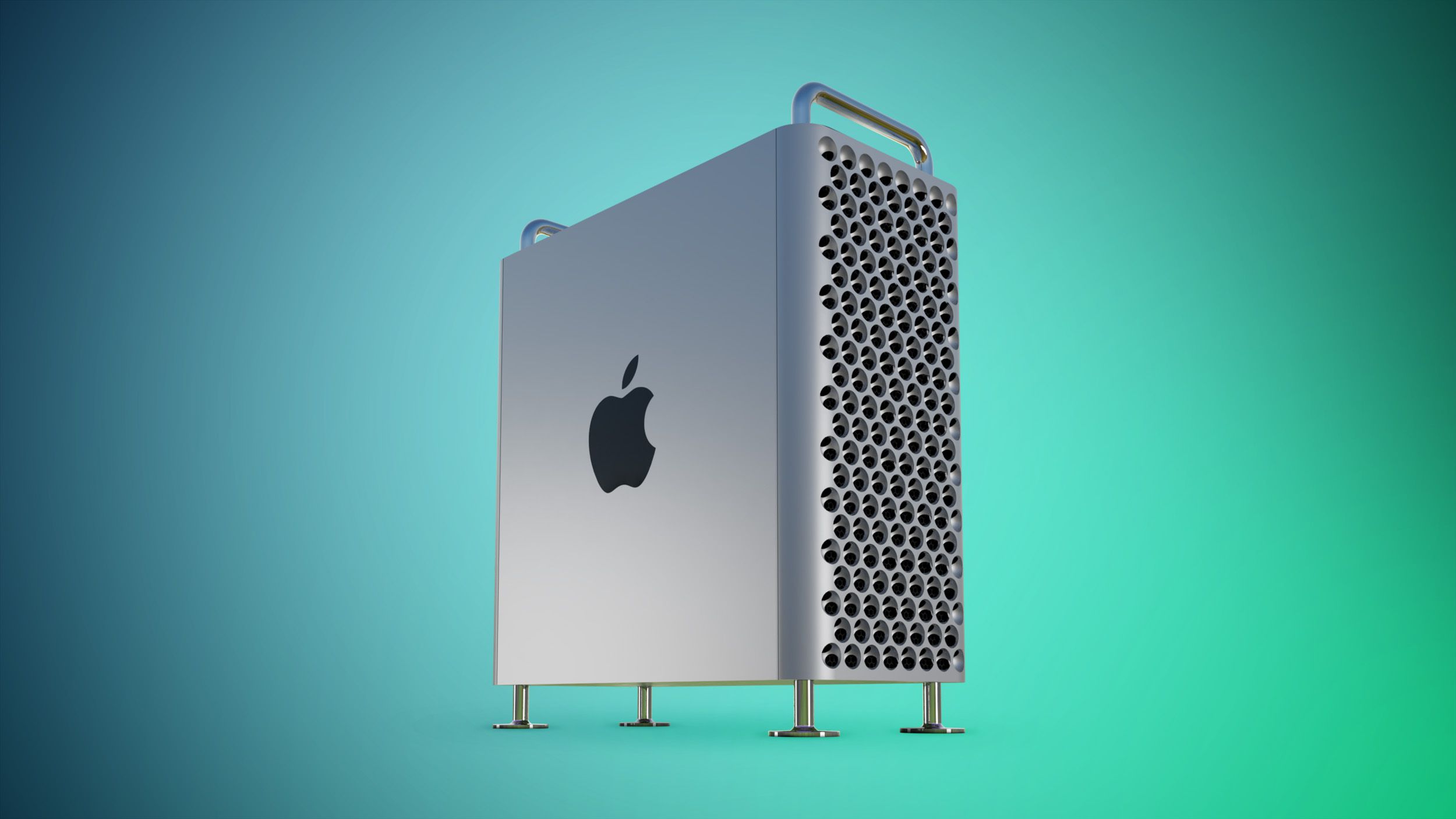

Which is why the current M2 Ultra Mac Pro exists - for a niche of people who need high-bandwidth PCIe for specialist I/O or audio/video cards and/or large internal SSD arrays, but

not discrete GPUs or DDR5 RAM, since integrated GPUs and unified memory are integral to the whole Apple Silicon concept.

Also, while they've kept some of the 2019 Chassis, the main (practical) Unique Selling Point was the MPX slot system that allowed large PCIe cards like GPUs to get extra power and feed back video to the main Thunderbolt controller without all of the fugly extra cabling that requires in an ATX PC - and

that has gone.

People were speculating about this before the somewhat disappointing M2 Ultra Pro appeared, and there was some mumbling about something called a "compute module":

An all-new "compute module" device has been spotted in Apple beta code, hinting that new hardware may soon be on the way. The new "ComputeModule" device class was spotted in Apple's iOS 16.4 developer disk image from the Xcode 16.4 beta by 9to5Mac, indicating that it runs iOS or a variant of it.

www.macrumors.com

(Which I think turned out to be something to do with Vison Pro...?)

...but yeah, a system of plug-in cards with Mx Max/Ultra/Extreme/Ludicrous for a scalable system sounded like a good idea, and a potential good use for the MPX card format - possibly even available as an expansion for the 2019 Pro. But, MPX is gone, and looking at the size of Max and Ultra mainboards in the Studio, MPX cards would be unnecessarily large.

Not sure that they

will be bothering to replace the 2023 Mac Pro - or do more than just bump the processor if/when the next Ultra chip is released. One good reason for keeping the 2019 case design would be that the 2023 MP was going to be "end of the line". It's main reason for existing is PCIe expansion, which will likely be a dwindling requirement, especially as Thunderbolt 5/USB4v2 will be significantly increasing the bandwidth available for external devices (including TB-to-PCIe enclosures, which didn't see any improvement from TB4).

Except it isn't any more. Even the 2019 was really "just a Xeon W tower with neater internals" - it got a slight head start over generic PC systems with the extra PCIe and RAM capacity of the then-newish Xeon W

but even then could be thrashed on straight performance/RAM/PCIe capacity by systems using multiple Scaleable Xeon chips, or

price/performance by AMD systems that didn't carry the "Intel Xeon Tax". At best, it was one of those "perfect if this is exactly what you need" products - in a price bracket where users needs tended to be increasingly specialised and generic PC hardware offered an unmatched level of choice.

Since 2020, Apple's innovative showpiece has been how much power they can cram into ultrabooks, tablets and small-form-factor devices with long battery lives and quiet cooling. The Apple Silicon GPU really can't compete with NVIDIA pr AMDs finest discrete cards but it

thrashes anything else you can fit in an ultrabook and realistically run off battery - esp. with natively optimised software. The core principles of Apple Silicon make it ideal for that - and also let it scale up to pretty powerful machines like the Studio - but they're really not what you want for a high-end workstation to run traditional workflows.

If you really need a "big box o' slots" and you're not software-locked into MacOS then your AMD system is probably the better tool for the job (and probably would already have been in 2019).

There's also the question of "who is going to need a super-powerful, monolithic personal workstation in the near future?" Cloud/thin-client computing (whether it's the "public" cloud or a rack of servers in the basement) seems to be the future - with the ability to "rent" extreme computing and storage capacity as and when you need it. Any current Mac has the legs to be a front end, something like a Mac Studio (assuming M4 Max/Ultra versions appear) already give you a ton of local power.

What there

has been a rumour of is Apple working on an AI server chip:

Apple is said to be developing its own AI server processor using TSMC's 3nm process, targeting mass production by the second half of 2025. According to a post by the Weibo user known as "Phone Chip Expert," Apple has ambitious plans to design its own artificial intelligence server processor.

www.macrumors.com

...but that's likely to be something rather different (I'd guess closer to the

NVIDIA Grace/Hopper - which in turn has a whiff of Apple Silicon re-imagned for AI/High Density Computing) and probably mainly designed to allow Apple to eat their own dogfood for their online services. It may well crop up in something called a "Mac Pro" but that would be a very different beast aimed at a different market. For one thing, the tools used for modern AI and server development/production tend to be Linux/Unix- rather than Windows- centric, which is handy, because MacOS

is Unix.

There have been "pro" Macs since long before the Mac Pro name existed - probably starting with the Macintosh II - and throughout the late 80s and early 90s they

ruled high-end DTP, graphics and the early days of video/multimedia production. The 16-bit DOS PCs of the era simply weren't up to that. Apple's problems

really started when PCs not only started playing catchup on the tech, but became ridiculously cheap because of economies of scale. Still, Mac carved out a niche in graphics/DTP/video/audio production which got decimated, but not eliminated, by the rise of the PC.

So, on one front, it would be embarrassing for Apple to walk away. Also, it would have jeopardised future support from the likes of Adobe and other "industry standard" software houses - which now had perfectly viable PC versions of their software... and more generally

developers liked their Mac Pros, so even if the Mac Pro wasn't a direct moneyspinner it was strategically important.

I think that's started to wane since ~2010 (of course - that's

also when Apple started dropping the ball on the Mac Pro so there's a chicken/egg question there). For one thing - there's the "good enough" problem: in the Good Old Days if you were a developer, if you were any sort of video editor, if you were producing audio or doing a lot of graphics work you

needed the power and expandability of the Mac Pro. But laptops, Minis and iMacs have been getting dramatically better in relation to desktop systems - today, there's not a lot you

can't do at a basic level on a MacBook Air, the only reason a developer needs a Mac Pro is if the product they're

developing needs a Mac Pro to run - and while people actually working in the movie/TV industry still need high-end kit, a Mac Studio will do everything even the fairly serious "prosumer" needs. The fact that the entry level price for the Mac Pro has risen from ~$2500 for the classic Cheesegrater to $7000 for the 2023 M2 Ultra Mac Pro (which only has the same performance as the $4k Studio - and will only outperform a M2 Max MacBook Pro on sufficiently parallel workloads) reflects that it's now a lot more of a "serious callers only" product (again - maybe chicken and egg, but we have to assume that Apple aren't totally stupid and market-research these things).

Interesting that you think that the iMac Pro was supposed to be the new Mac Pro (I totally agree - but haven't had floods of support in that view). My impression was that - when Apple made their famous early-2017 U-turn meeting - they would probably have just started showing the iMac Pro prototypes to key software/hardware developers and were getting severe pushback (the timing seems about right - whatever you think of the iMac Pro it clearly hadn't been kludged together in a hurry, and it would have been in an advanced state of development by then). As I said above, part of the reason for Apple continuing to make high-end Macs is to keep key developers on board, so they probably had to react.

I still think that the iMac Pro (on its own, without a complementary headless alternative) was a mistake and that a lot of "higher end" users have specialised display requirements and simply wouldn't want an all-in-one, however nice the built-in display was. Really, Apple had one job to do after that 2017 press conference: get on the phone to Foxconn, order up a few shipping containers full of Xeon mini-towers in nice aluminium tool-free cases and release an "official Hackintosh" (they wouldn't have

called it that, of course, the point is that getting MacOS to run well on generic Intel hardware was

not rocket surgery, especially if you were Apple and didn't have to fight the DRM). People who

liked the iMac Pro concept would still have bought it, but Apple would have had something to offer the people who probably

didn't buy the iMac Pro and either skipped to Windows, built their own Hackintosh or squeezed another year or two out of their old Cheesegraters (which were effectively Hackintoshes by that stage).