Cinema4D is everything else but poorly written, trust me.

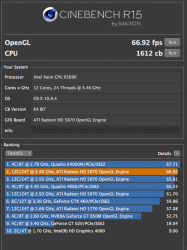

Interesting thing is that on Windows a stronger card also means a better OGL-result, just look at

http://cbscores.com/index.php?sort=ogl&order=desc. So to my mind the graphics-card-drivers for Mac are poorly written or Mac OS X is the bottle-neck. Feels familiar somehow... :-/

I agree with you whole heartedly that Cinema 4d (C4d) isn't poorly written [and neither is Cinebench]. I've used C4d user since 1991 when it was called "Fast Ray,” running it on my Commodore Amigas. In 1993, Fast Ray became Cinema 4d. I fully appreciate the relationship between the benchmark and the application as a true indicator of what one should expect in C4d based on one's benchmark score. I also use Cinebench (and Geekbench) to help me to outfit and to tune my builds. I find nothing distasteful about Maxon's creating a benchmark for users of it's software. Now, that Cinebench has gained wider acceptance is a tribute to Maxon's foresight. That same type of use expansion has begun to occur with Otoy's OctaneRender benchmark utility.

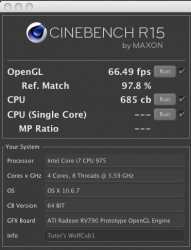

I have no doubt that higher clocked CPU speeds affect the OpenGL scores a little and that cannot be avoided completely because of the CPU's role in doing what Cinebench's OpenCL test has to do. However, one should not forget that overclocking a CPU also usually overclocks (a) the memory speed [unless the memory is downclocked in bios] (and that affects the OpenGL score a little because it plays a role in what the CPU does while that test is being run; so it's not just higher CPU overclocking) and (b) the QPI, which we used to (and sometimes still) refer to as the "bus speed." Increasing the bus speed has a slight impact. Also, depending on a Windows system's bios, users with Nehalem Xeons, Westmere Xeons and i7 CPUs (Nehalem, Westmere and the K versions since the intro of Sandy Bridge), are usually able to manipulate one or more of these variable independently.

Maxon hasn’t tried to dupe anyone. All one has to do is throughly read, “CINEBENCH R15 TECHNICAL INFORMATION AND FAQ” [

http://www.maxon.net/pt/products/cinebench/technical-information.html ], where it states, in relevant part:

1) “To prevent the scene being displayed much too slowly on old graphics cards or much too fast on the latest hardware, CINEBENCH estimates the graphics card performance so the scene will maintain a consistent duration (approximately 30 seconds). Faster graphics cards will display the scene much smoother than slower ones. If a graphics card can display a higher frame count than the original scene speed, subframes will be displayed and properly measured.”

2) “Graphics card performance as measured by CINEBENCH reflects the power of the graphics card in combination with the system as a whole. Unfortunately the system contribution cannot be specifically measured. The same graphics card in a faster computer will typically give better results than in a slower system. The overall performance depends on various factors including processor, memory bus and chipset.

The graphics benchmark in CINEBENCH is designed to minimize the influence of other system components. All geometry, shaders and textures are stored on the graphics card prior to measurement, and no code is loaded during the measurement process. This minimizes the system influence, but unfortunately cannot eliminate it entirely.”

3) “Graphics card drivers can greatly affect the benchmarking performance.”