Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

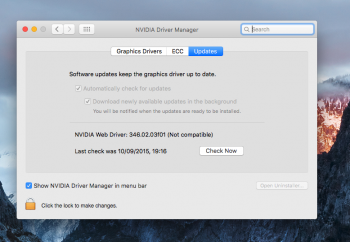

nvidia web drivers for 10.10.5

- Thread starter netkas

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Downloading El Cap GM1 to test web drivers now.

The latest I could get a hold of for 10.10.5 don't work.

If someone can point me in any 10.11 beta drivers I'd really appreciate it, I can't find any anywhere.

Attachments

^^^^This Web Driver works fine with the El Cap GM Candidate (Build No. 15A282a)

http://us.download.nvidia.com/tYGrxreBnE8b89jZ0VysebeSwZJehZTa/WebDriver-346.03.01b07.pkg

Lou

http://us.download.nvidia.com/tYGrxreBnE8b89jZ0VysebeSwZJehZTa/WebDriver-346.03.01b07.pkg

Lou

http://us.download.nvidia.com/tYGrxreBnE8b89jZ0VysebeSwZJehZTa/WebDriver-346.03.01b07.pkgThe latest I could get a hold of for 10.10.5 don't work.

If someone can point me in any 10.11 beta drivers I'd really appreciate it, I can't find any anywhere.

^^^^This Web Driver works fine with the El Cap G M Candidate (Build No. 15A282a)

http://us.download.nvidia.com/tYGrxreBnE8b89jZ0VysebeSwZJehZTa/WebDriver-346.03.01b07.pkg

Lou

Thanks, I'll give it a go. How do you find these things, I searched everywhere on Nvidia's site and couldn't find anything.

The links get posted here and Netkas forum.Thanks, I'll give it a go. How do you find these things, I searched everywhere on Nvidia's site and couldn't find anything.

It was just running on UDA it seems unless there is some evidence to the contrary. I'm not a guy who just believes in things on 'faith' alone.

But you're the guy who's willing to post this stuff as fact when there's absolutely no way for you to really know what's going on. Do you know how much code is shared between the OS X and Windows/Linux driver for NVIDIA? Do you know what "UDA" really means in terms of driver implementation for modern NVIDIA GPUs? Do you really know that the Kepler driver code is enough to make Maxwell GPUs work in some kind of compatibility mode? Do you really know that this is what NVIDIA was using to enable Maxwell GPUs up until the most recent releases?

My take on the new web driver performance improvements is pretty simple: it's clear that NVIDIA has been working on improving the CPU overhead in their driver. Nearly all the gains come in CPU-limited cases, while GPU-limited cases didn't seem to improve all that much. High-end GPUs, including the Maxwell ones like GTX 980 and GTX 980 Ti, saw benefits because they would tend to be more CPU limited when paired with a slow CPU like the one in a 2010-era Mac Pro. I don't think this has anything to do with "forwards compatible UDA driver code" for Maxwell, or whatever you're suggesting was used before.

Feel free to continue posting on this subject like you really know what's going on, I will continue to share my opinion as simply that: my opinion, based on my understanding and experience with the NVIDIA drivers for OS X. If you have hard evidence that I'm wrong, please feel free to share it, otherwise you can't simply state your opinion as fact followed by "unless there is some evidence to the contrary". I can't prove my opinion is correct, and neither can you.

But you're the guy who's willing to post this stuff as fact when there's absolutely no way for you to really know what's going on. Do you know how much code is shared between the OS X and Windows/Linux driver for NVIDIA? Do you know what "UDA" really means in terms of driver implementation for modern NVIDIA GPUs? Do you really know that the Kepler driver code is enough to make Maxwell GPUs work in some kind of compatibility mode? Do you really know that this is what NVIDIA was using to enable Maxwell GPUs up until the most recent releases?

My take on the new web driver performance improvements is pretty simple: it's clear that NVIDIA has been working on improving the CPU overhead in their driver. Nearly all the gains come in CPU-limited cases, while GPU-limited cases didn't seem to improve all that much. High-end GPUs, including the Maxwell ones like GTX 980 and GTX 980 Ti, saw benefits because they would tend to be more CPU limited when paired with a slow CPU like the one in a 2010-era Mac Pro. I don't think this has anything to do with "forwards compatible UDA driver code" for Maxwell, or whatever you're suggesting was used before.

Feel free to continue posting on this subject like you really know what's going on, I will continue to share my opinion as simply that: my opinion, based on my understanding and experience with the NVIDIA drivers for OS X. If you have hard evidence that I'm wrong, please feel free to share it, otherwise you can't simply state your opinion as fact followed by "unless there is some evidence to the contrary". I can't prove my opinion is correct, and neither can you.

The Maxwell cards show almost no gains over Kepler clock for clock with the web drivers. In some cases the 680 is winning. The benchmarks are so different to the performance that should be expected if you have seen the gains a Windows user gets with Maxwell over Kepler.

Nvidia said in their recent blog post that Maxwell beta support has been added for the first time.

End of the story unless you want to call Nvidia liars. You do so much for this community by helping people install their drivers. There's no point ruining that reputation with useless arguments. Life is so ****ing short and you really want to debate this ****ing crap?

The Maxwell cards show almost no gains over Kepler clock for clock with the web drivers. In some cases the 680 is winning. The benchmarks are so different to the performance that should be expected if you have seen the gains a Windows user gets with Maxwell over Kepler.

Nvidia said in their recent blog post that Maxwell beta support has been added for the first time.

End of the story unless you want to call Nvidia liars. You do so much for this community by helping people install their drivers. There's no point ruining that reputation with useless arguments. Life is so ****ing short and you really want to debate this ****ing crap?

Imagine 3 levels of GPU enablement:

1) A GPU just happens to be enabled by the NVIDIA web driver.

2) A GPU has beta support, as in, the company acknowledges that the GPU works with their drivers.

3) A GPU has official/full support as a Mac Edition product.

There are no official Mac Edition versions of any Maxwell card. NVIDIA's blog post suggests that the Maxwell GPUs have gone from (1) to (2). That is, for the first time ever, NVIDIA has publicly acknowledged that their web drivers enable the Maxwell GPUs, despite the fact there are no official Mac Edition products based on that GPU architecture. That is, they are (for the first time ever) officially acknowledging that they are enabling non-EFI cards to work with their web driver. "Support" has a lot of weight behind it -- does this mean they're going to start taking customer phone calls when people are having difficulties with their Maxwell cards under OS X? Back when these cards just silently worked, they sure weren't doing that.

The new drivers give significant performance improvements in very specific areas. The point I'm trying to communicate to you and everyone else is that these specific areas are in CPU-limited cases, based on my experience. There's a reason why Unigine Heaven on a GT 650M didn't get twice as fast, while other cases did get a massive improvement. There's a reason why higher-end GPUs got a larger speed boost than their lower-end cousins, as I mentioned earlier it's because those GPUs are usually completely CPU limited even at high resolution, ultra settings and max FSAA. This can easily be seen by running OpenGL Driver Monitor and enabling the "GPU Core Utilization" on any NVIDIA GPU, if it's less than 100% then the GPU is not being fully utilized.

My GTX 980 got a large improvement in World of Warcraft, but even at 2560x1600 with Ultra settings and FXAA, it's still barely pushing 75% utilization with the new drivers (up from 50% or lower with the older drivers). I see similar improvements with a GTX 680, i.e. both the overall framerate and the GPU utilization has gone up, but it's still not able to reach 100% utilization. This is a classic case of the game being CPU limited, and thus the large improvements to driver overhead found in the new drivers allow any CPU-limited case to perform much better with the newer drivers.

I believe that because the Maxwell GPUs aren't found in an official Apple or NVIDIA product, there is still plenty of GPU performance left on the table, even with the latest drivers. NVIDIA has obviously done work to make these GPUs function in OS X, but probably didn't spend as much time tuning the performance of the GPU under OS X as they did with something like GK107 or GK104, both of which are featured in official products. Personally, I hope that there will be more improvements coming, especially now that the driver overhead is so much lower than it used to be. My guess is that given the large amount of CPU overhead in the older drivers, there was less emphasis placed on the GPU performance of a high-end GPU like GM204 because it was CPU limited in the vast majority of cases. Or, in other words, why spend a huge amount of time making the GPU run fast if the CPU can't keep up with it? You could make the GPU infinitely fast and it wouldn't affect the benchmark scores in those cases, and this explains your point about no gains over older Kepler GPUs (i.e. they're both CPU limited, so the CPU is the determining factor in the overall performance, and if you use the same CPU in both cases you'll get the same score).

NVIDIA appears to have solved that problem. Now that their driver overhead is much lower, I'd guess/hope their focus will shift to the GPU-limited cases and we'll see continued improvements to their performance, particularly on the Maxwell GPUs. Fingers crossed, at least.

As I said before, this is just my opinion, based on years of experience with the NVIDIA drivers under OS X. Feel free to take it or leave it. I'd just prefer to avoid stating things as hard-and-fast facts when nobody on these forums can really know for sure, because aside from anything else, it creates confusion for newer folks.

I'm not going to read all that Agorath. I'm just happy you post download links and try to be helpful to everyone.

Just installed a clean El Cap GM1 with the web driver. Everything is fine but until Metal apps arrive it feels like I'm just using a snappier Yosemite.

Just installed a clean El Cap GM1 with the web driver. Everything is fine but until Metal apps arrive it feels like I'm just using a snappier Yosemite.

I'm not going to read all that Agorath.

Please stop posting like you know what you're talking about with respect to NVIDIA's Maxwell driver implementation for OS X then. You're just spreading mis-information that isn't helping anyone. The OS X driver has very little to do with D3D performance under Windows.

I'm not going to read all that Agorath.

I read it all. Really didn't take that long. Made sense to me!

Lou

Would be possible, but that would also mean the Nvidia Web Driver wasn't UDA compliant before 10.10.Likely isn't UDA compliant so that Apple could control how their OS was used by Hackintoshers and control Mac Pro upgrades.

The reason why I'm thinking about this stuff: Yesterday I did some benchmarks in my 3,1 both in OS X and Windows. Long story short: The GTX 570 driver refused to drive my GTX 760, although it was a recent built. Could also be a typical Windows issue, but maybe the generality of the Nvidia drivers isn't exposed to the user-end?

Btw, even the latest OSX Web Drivers have an enormous CPU overhead compared to AMD cards. In CPU bound games like CS:GO even a HD 5770 is faster than my GTX 760, while it will obviously destroy that card in more GPU bound stuff like Heaven benchmark.

Would be possible, but that would also mean the Nvidia Web Driver wasn't UDA compliant before 10.10.

The reason why I'm thinking about this stuff: Yesterday I did some benchmarks in my 3,1 both in OS X and Windows. Long story short: The GTX 570 driver refused to drive my GTX 760, although it was a recent built. Could also be a typical Windows issue, but maybe the generality of the Nvidia drivers isn't exposed to the user-end?

Btw, even the latest OSX Web Drivers have an enormous CPU overhead compared to AMD cards. In CPU bound games like CS:GO even a HD 5770 is faster than my GTX 760, while it will obviously destroy that card in more GPU bound stuff like Heaven benchmark.

UDA isn't fully forward compatible if too much time has elapsed between GeForce generations. It does get updated. You install a very old Nvidia driver on Windows for example and it won't allow a new Maxwell card to run. This is the issue we see when people try to use a GT120 and 7/9 series card in Windows 10.

But I'll take Nvidia's word and verifiable repeatable benchmarks over somebody's hypothesis any day. There are a number of hardware myths that became popular in this forum that would be laughed out of a PC forum is those Windows geeks saw what was being said around here about boot times, disk speeds, driver compatability, USB 3.1 and so on. I guess it's Apple's fault for installing a kind of faith-based cult like mentality in the Mac community.

SoyCapitan, you also take word from Nvidia about "new" memory architecture in GTX 970 (3.5 GB + 0.5).

Believing Nvidia is stupidest thing you can do.

Haha, but they aren't being dishonest about the total memory

From nvidia drivers highlight:Netkas,

I have a stupid question. I have a late 2012 iMac running OS X 10.10.5. Can I double-click install the latest compatible Nvidia web drivers on my iMac?

Release Notes Archive:

This driver update is for Mac Pro 5,1 (2010), Mac Pro 4,1 (2009) and Mac Pro 3,1 (2008) users.

BETA support is for iMac 14,2 / 14,3 (2013), iMac 13,1 / 13,2 (2012) and MacBook Pro 11,3 (2013), MacBook Pro 10,1 (2012), and MacBook Pro 9,1 (2012) users.

SoyCapitan, you also take word from Nvidia about "new" memory architecture in GTX 970 (3.5 GB + 0.5).

Believing Nvidia is stupidest thing you can do.

Yeah ! I still can't believe that their CEO got up on stage, played a bunch of slides with completely phony GPU test results then claimed they had the "world's fastest GPU" and that it was an "overclocker's dream" when very shortly thereafter actual tests revealed both to be complete and utter lies. Oh wait, that wasn't Nvidia...

Soy, I have enjoyed your opinion on many things, but here you are 100% wrongo bongo.

I've done quite a bit of digging in Nvidia drivers, found some great things I can't publicize yet, but I can state with 100% certainty that you are in error and not completely aware of the situation. Perhaps read your sig and think about it.

Yeah ! I still can't believe that their CEO got up on stage, played a bunch of slides with completely phony GPU test results then claimed they had the "world's fastest GPU" and that it was an "overclocker's dream" when very shortly thereafter actual tests revealed both to be complete and utter lies. Oh wait, that wasn't Nvidia...

Soy, I have enjoyed your opinion on many things, but here you are 100% wrongo bongo.

I've done quite a bit of digging in Nvidia drivers, found some great things I can't publicize yet, but I can state with 100% certainty that you are in error and not completely aware of the situation. Perhaps read your sig and think about it.

It was just partitioned memory and didn't stop the 970 from still being the best selling cards for many months after. Nvidia provided a technical description of why they had to partition the memory like that. It's not exactly unusual for full memory to not be available. We don't have access to every gig of our hard drives and solid disks (especially under Windows), yet Samsung still labels the full amount. There was a time when computers were sold with 4GB of memory yet the 32bit OS could only access 3.2GB. nobody cried like a baby once they learned the technical reasons why.

And the reason for the signature is because too often on Internet forums and comment sections people deviate from objectivity towards swipes and personal attacks with little regard to how it might hurt that person or stress the moderators. If you have an issue with what someone posts on the Internet debate the material, the evidence and the citations only with a truly independent and honest stance. If someone can never provide evidence that's usually the person to avoid debating with, if such debates are worth any time at all in the first place.

Last edited:

I've done quite a bit of digging in Nvidia drivers, found some great things I can't publicize yet,

Just out of curiosity, why can't you publicize something you found in nVidia's publicly released driver?

Just out of curiosity, why can't you publicize something you found in nVidia's publicly released driver?

It would give away something rather humorous that I prefer to keep to myself for now. But I will include the word xylophone here so that it will be easy to locate when the time comes.

People frequently fail to notice important little clues hidden in the OS. As an example that I have pointed out before, the fact that Apple keeps updating the Expansion Slot Utility despite the fact that Lion was last supported OS that needed it. Not everyone at Apple wants to bury old tech.

Latest version: 346.03.03f02

http://www.nvidia.com/download/driverResults.aspx/93555/en-us

(Only for El Capitan)

http://www.nvidia.com/download/driverResults.aspx/93555/en-us

(Only for El Capitan)

Last edited:

^^^^Actually it's right here:

https://forums.macrumors.com/thread...out-nvidia-pc-non-efi-graphics-cards.1440150/

In a thread created just for the purpose of listing latest Nvidia Web Drivers.

And the Link for the Driver you list is for El Cap 10.11.1, this thread is about Web Driver's for Yosemite 10.10.5. The Web Driver you posted won't work with 10.10.5

Lou

https://forums.macrumors.com/thread...out-nvidia-pc-non-efi-graphics-cards.1440150/

In a thread created just for the purpose of listing latest Nvidia Web Drivers.

And the Link for the Driver you list is for El Cap 10.11.1, this thread is about Web Driver's for Yosemite 10.10.5. The Web Driver you posted won't work with 10.10.5

Lou

Last edited:

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.