As I said. Easily the best trolling effort in a while. Congratulations. 🥳Not trolling. Chip design = ordering pizza. They’re actually very similar in terms of job description.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Qualcomm revealed X Elite's benchmark scores

- Thread starter sunny5

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

What you’re talking about is child’s play and easy to do. That’s not the hard part about chip development.

Like ordering pizza, it’s not hard to tell the pizza shop to add 7 toppings for $20 if their fabrication method allows them to do it. The issue is the fab, not the customer telling the pizza shop to add 7 toppings. Anyone can do what the customer did.

Those gains are due almost entirely to the foundry, not the design. Remember when Nvidia was stuck on 8nm with the RTX 30 series while AMD was using 7nm? AMD GPUs suddenly started outperforming nvidia’s. When Nvidia jumped back to using the leading process, they regained their lead. You’re giving way too much credit to fabless companies when what they’re doing is intellectually child’s play and insignificant. Fabrication is everything.

Chips on the same exact process have the same performance profile. When you see a performance delta between 2 chips on the same nm, it’s because one chip is using an updated process with higher PPA. Apple never uses the same process again. They use the first-gen then the second-gen and possibly the third-gen of a node. They never use the same exact gen of a node for multiple years. If they did, they would report no performance gain regardless of any design changes.

AMD, Nvidia, Apple, Qualcomm and other chip designers don’t do any hard engineering. They’re more like salesmen for the fab than engineers. Fabs do the real scientific and engineering work while designers just find customers to give the fab volume.

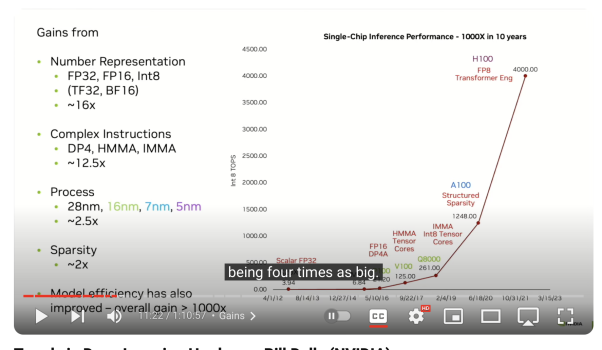

So Bill Dally says the effects of Process are 2.5x and the effects of "other", ie design, both HW and SW, are 400x. And some internet rando says "no, that 400x doesn't exist".

Well, who should I possibly believe???

Attachments

If they bin them the end user and marketing see different "chips".

I think you have the causality there substantially backwards there. "Sales Marketing" is spinning these are two chips. Extremely likely they know there is only one chip here. The 'spin' here is to create the illusion that have 'two' here when only have one. That the end users 'fall for it' would be something that the sales team set out to do. Not that they 'saw it' that way. They are producing the 'show'; not watching it.

What they have here is really a chip that lands between the plain Mn and the Mn Pro. They 'project' the 'Plus' onto the Mn and the "Elite' somewhere close to proximity of the Mn Pro or Mn (depending upon how much the benchmarks loop in graphics. In terms of primarily measuring graphics they don't want to be anywhere near the Mn Pro. )

For version 2 (or at latest v3 ) they probably should have more than one die. Right now though, this "Elite" / "Plus" branding is largely a misdireciton to cover up that Apple , AMD , Intel all have a broader range of dies and offerings while Qualcomm just has just one die.

The point is when they say 'elite' we can calibrate our conversation with which bin/offering/whatever they benchmarked.

What calibration? Apple doesn't slap multiple names on a die to create products. This whole binning with multple labels thing is an Intel / AMD game. (e.g., Ryzen 7400 and new Epyc 4004

AMD unveils EPYC 4004 CPUs: AM5 gets server-grade processors

Entry-level AMD 4004-series EPYC CPUs.

or similarly Intel's 10-11 gen desktop CPUs and Xeon E-2400 series.

)

Even in the Intel lines up something like a lowest end Celeron/Pentium isn't the same die as an Core i9.

This "Plus"/"Elite" stuff was far, far , far more created for comparisons against AMD/Intel offerings that Snapdragon X Elite/Plus actually primarily competes against rather than Apple's naming strategy. That's the motivation... not Apple's.

Apple's M3 and M3 Pro have about the exact same single threaded performance score. Either one of them beats whatever version 1 Elite/Plus you want to throw in there. GPUs is about the same level of immaterial matters which one. Sprinkling M-series over these discussions is about a 'hype' driven as when they sprinkling AI (& Copilot+) all over these launch discussions too. It isn't a good 'calibration' point. It is a 'catch the hype train' point.

The guy can say whatever he wants, but it's not supported by reality and semiconductor historical facts. When Nvidia played on an inferior node, they underperformed the competition. The same happened with AMD and Intel back when Intel had the foundry lead and then vice-versa once AMD was on the leading TSMC node. History has shown that whoever has access to the best foundry wins.So Bill Dally says the effects of Process are 2.5x and the effects of "other", ie design, both HW and SW, are 400x. And some internet rando says "no, that 400x doesn't exist".

Well, who should I possibly believe???

Design only matters in that you don't mess it up, but that's not a hard thing to do. It is incredibly hard to mess up a chip design because it's so easy to do. It's taken for granted that the chip designer isn't going to mess up the design because it's trivial. That doesn't mean they should be given any kudos when their chip performs well. It's 99.9% the fab.

Disappointing RAM/SSD capacity limits By the notebook vendors though Qualcomm claims 64 GB RAM support. Why are these limited to 1 TB storage except very few with more capacity.

Most of the systems' tech specs I've seen so far have M.2 drives. (**) Limiting to 1TB is very likely to keep the inventory overhead costs down ( If there are only two M.2 SSD types , then only have to stock two. ). Pretty good chance these systems from all of the vendors are a bit more margin sensitve here at the start than the x86-64 systems that have a long buyer history coupled to them ( so have more precise predictive analytics to drive better inventory overhead cost controls. )

SSDs also have just gone through a boom-bust cycle so that probably isn't helping if the costs are in the upward swing phase.

Once the major vendors have a better feel for how many of each type they are going to sell , then probably will see some later updates to BTO options. ( there are substantively more competitive AMD and Intel Windows systems coming later in 2024. )

(**) And most Windows PCs have a reasonable set of screws where you can just take the bottom off and get to the removable M.2 drive if really want to.

The SoC is probably too hot and needs extensive heat dissipation mechanism. Once Nuvia/Qualcomm can design some good efficiency cores, they will likely have smaller and lighter machines.Interesting. I was hoping for 12” or 11” ultra light but I don’t see that (at least not yet)

The SoC is probably too hot and needs extensive heat dissipation mechanism. Once Nuvia/Qualcomm can design some good efficiency cores, they will likely have smaller and lighter machines.

They don't necessarily need E-cores for the next iteration. Go to N3E and chop the number of P cores. Don't need a die with 12P cores to drive a smaller , ligher machine. The Windows scheduler hasn't been spectacularly great with Big.Little set ups so far.

Qualcomm probably needs a Ecore with a phone SoC. But skipping that for version 2 would probably be a good idea. This initial version they 'made do' with a CPU core targeting server loads. It lags in single threaded versus the major competition. Fixed that before try to weave in mulitple core types. Could possible do something like AMD with a 'compressed area' core with a cap on clocks, but not a different arch design. (change floorplan more than what is inside.) That would line up with their limited set of core overclocking set up they have now on a few Elite versions.

Rapidly doing zig-zags with core designs is not likely to track well with Windows. Give Windows a target and then stick with it for a while.

P.S. A Qualcomm Phone SoC with big.little will be dealing with Android (layered over Linux) ... not Windows. So try the Mixed core experiment there first. Then, if it works, maybe bring it to Windows. Trying to do both at the same time is a bit goofy. Apple did not. ( it was years and years and years after the phone SoC that they did another for larger Personal Computer space. )

P.P.S. It really isn't Intel that is 'winning' in the x86-64 space right now. AMD is the player that is on a growth path. No "E core" in sight in their line up. What they have is a core that eschews "Turbo" mode and hyper seeking single threaded drag racing. That is as much about not 'wasting' space/area as it is about saving energy.

(the problem the current higher Elite has is more so clocking the design past where the 'normal' was set in order to squeeze out as much performance as possible . )

Last edited:

Yup same here that or the Lenovo Slim 7x.Some user/s are envy because Qualcomm cannot keep up and come up with some meaningless info

Thinking of pre-ordering ASUS Vivobook S15 just to see how all of these work

Lenovo I know the ssd is user replaceable not sure about Asus.

Thinking of pre-ordering ASUS Vivobook S15 just to see how all of these work

Design only matters in that you don't mess it up, but that's not a hard thing to do. It is incredibly hard to mess up a chip design because it's so easy to do. It's taken for granted that the chip designer isn't going to mess up the design because it's trivial. That doesn't mean they should be given any kudos when their chip performs well. It's 99.9% the fab.

That is patently absurd on the face of it. If you modify the structure of your branch predictor in a way that improves its accuracy, your instruction flow becomes tighter, making execution faster and more efficient. How do you put that on the fab?

The structure of the branch predictor isn't a mystery or a bottleneck. It's already a solved problem and everyone knows how to design it.That is patently absurd on the face of it. If you modify the structure of your branch predictor in a way that improves its accuracy, your instruction flow becomes tighter, making execution faster and more efficient. How do you put that on the fab?

Stop interacting with that account, or look at its history. It exists only to make this “chip design is like making a pizza” claim every few months. You’ll be better off ignoring it entirely.That is patently absurd on the face of it. If you modify the structure of your branch predictor in a way that improves its accuracy, your instruction flow becomes tighter, making execution faster and more efficient. How do you put that on the fab?

Regarding the Qualcomm chips, I’m still laughing to myself that they’re comparing to a fanless design and that people saying Windows (who isn’t a chip maker…or a company) has “caught up” with this release. That’s a real head scratcher.

Regarding the Qualcomm chips, I’m still laughing to myself that they’re comparing to a fanless design and that people saying Windows (who isn’t a chip maker…or a company) has “caught up” with this release. That’s a real head scratcher.

It's funny that they mention a couple of benchmarks and forget about the rest. The previously posted review by Ryan Shrout shows that MBA has better thermal performance and is faster in many tests despite having only 4 P-cores and 4 E-cores compared with Elite X 12 P-cores.

MBA M3 is faster in GB 6 single thread test (+15%), Cinebench single thread test (+17%), Chrome browser test with Speedometer (+40%) and JetStream (+27%), Excel and Powerpoint (+6%, +16%), 3DMark Steel Nomad (+35%), 3DMark Wildlife Extreme Unlimited (+31%), 3DMark Solar Bay Unlimited (+29%) and Blender (+17%).

Last edited:

Ignore the guy. He's clearly just trying to get a rise. We've been polite, and he just comes back trying to double down to get lolz.That is patently absurd on the face of it. If you modify the structure of your branch predictor in a way that improves its accuracy, your instruction flow becomes tighter, making execution faster and more efficient. How do you put that on the fab?

Let him just go waste other people's time.

I searched GB for Snapdragon X Elite scores and collected what they had into a chart. There were a lot of the CRD entries, which IIUC, are prototype/test platforms. There were also scores from Dell, Acer, Asus, Samsung, HP and Lenovo, which I would guess are production devices. Averaging all the scores of the production model scores and came up with a single-core score of 2289, a multicore score of 12337.

Running GB on a M1 MBA gives me a score of 2385 / 8409. Thus, the SC score of the Elite X is, on average, a little lower than an original M1, the MC about 47% higher.

Granted, there was a lot of variation in the Elite X scores. The best performers were the Samsung devices (averaging a little better than the reference platforms), while the worst, by a significant margin, were the Dells.

Running GB on a M1 MBA gives me a score of 2385 / 8409. Thus, the SC score of the Elite X is, on average, a little lower than an original M1, the MC about 47% higher.

Granted, there was a lot of variation in the Elite X scores. The best performers were the Samsung devices (averaging a little better than the reference platforms), while the worst, by a significant margin, were the Dells.

The M.2 SSD looks like it is going to be really tight with the inner/"battery side direction" edge of the heat spreader. ( spreader needs to be rotated 180 degrees from placement in 2nd picture for mounting. The left edge of motherboard would be facing the rear vents. SSD connector in lower right of picture. ). Some M.2 SSD with a fancy heat sink of its own that was slightly over spec on width might have issues.

Two, reasonably sized fans should not be lacking for cooling capacity.

Doesn't look like there is room for a CAMM module with the two major 'cut-outs' for the fans.

[ The USB-A sockets aren't here. So there is a pretty good chance they are on a daughterboard on outer side of the fan on that side. ]

I think people touting the M.2 interfaces are going to end up disappointed. I don't think these are open to high performance without cutting into the overall bandwidth (not sure if I'm using the right term here) of the PCIe implementation. I don't think they're there for expansion at all to be honest.The M.2 SSD looks like it is going to be really tight with the inner/"battery side direction" edge of the heat spreader. ( spreader needs to be rotated 180 degrees from placement in 2nd picture for mounting. The left edge of motherboard would be facing the rear vents. SSD connector in lower right of picture. ). Some M.2 SSD with a fancy heat sink of its own that was slightly over spec on width might have issues.

Two, reasonably sized fans should not be lacking for cooling capacity.Pretty good chance this same baseline design can be used for different vendors SoCs.

Doesn't look like there is room for a CAMM module with the two major 'cut-outs' for the fans.

[ The USB-A sockets aren't here. So there is a pretty good chance they are on a daughterboard on outer side of the fan on that side. ]

... There were also scores from Dell, Acer, Asus, Samsung, HP and Lenovo, which I would guess are production devices. ... Averaging all the scores of the production model scores and came up with a single-core score of 2289, a multicore score of 12337.

Running GB on a M1 MBA gives me a score of 2385 / 8409. Thus, the SC score of the Elite X is, on average, a little lower than an original M1, the MC about 47% higher. Granted, there was a lot of variation in the Elite X scores.

How are they production models if not running production operating system? When there are more production systems being measured there should be less noise. Post Windows 11 freeze date would be a better filter on 'production' than the some non Qualcomm or prototype name.

One of the primary reasons these systems are releasing now instead of last Fall or Q1 was to wait for Windows. Windows is an aspect of 'production'.

There is going to be more variation though because going to have more vendors chasing different use cases. If everyone did exactly what the reference model does why would there be a need for 6-8 vendors and/or 20+ models. Single vs dual coolers , variations on thickness , etc.

I think people touting the M.2 interfaces are going to end up disappointed. I don't think these are open to high performance without cutting into the overall bandwidth (not sure if I'm using the right term here) of the PCIe implementation. I don't think they're there for expansion at all to be honest.

It isn't 'expansion' as much as 'replacement'.

Pretty good chance that they do. If the PCI-e was there because they were playing at the notion of x4 (or possibly x8) PCIe v4 GPU provisioning, then doing a x4 PCI-e v4 SSD isn't going to be that much of a stretch. Some reports has stated that there are x12 PCI-e v4 lanes and x4 v3 lanes ( for M.2 WiFi cards; which are shorter and different size ** ). If there really is a x8 there for contingent GPU usage and pragmatically have abandon it , then the notion that there isn't enough "backhaul" for a x4 link is not very likely.

Perhaps it is a 8+4 PCI controller cluster with only a x8 like bandwidth limit to the internal inter-cluster network, but that will do just fine for a sole x4 connection. Even more likely not a problem the PCI-e root controllers were split. Not an issue if a 8+4+4 PCIe root controller where the second '4' is that 'downclocked' v3 if have a x8 worth of backhaul to the internal network ( x8 backhaul and effectively x6 usage. )

There is no other "single drive" interface for this thing (there are no proprietary storage connector). It has to boot off of something. Response time for Wake-from-sleep/hibernation is going to be a factor. Slower SSD isn't going to help with that.

Qualcomm data sheet says

" SSD/NVMe Interface: NVMe SSD over PCIe Gen 4 "

Snapdragon X Elite

Snapdragon X Elite is the most powerful, intelligent, and efficient processor in its class for Windows. Featuring: built for AI, multi-day battery-life and more.

"... The Qualcomm SoC is said to have at least 12 PCIe 4 and 4 PCIe 3 lanes for connecting various kinds of devices. NVMe SSDs are supported with a throughput of up to 7.9 GB/s; ..."

x4 PCIe v4 is 64 Gbit/s ==> 8 GB/s. NVE protocol over that with a small drop.

If you try to drive a GPU and top end x4 SSD both at full bandwidth. Yeah, I wouldn't be surprised if you ran into problems. And if someone tried to build some dual internal x4 M.2 SSD system, that might get bottlenecked. But I doubt you are going to see such systems. I have no doubt some Windows vendors asked for it, but I doubt Qualcomm really wanted to do that. It is theoretically there on 'paper' for some checklist ( > 4 lanes for more configuration options) , but really not part of the target market for v1.

If try to put a future v5 SSD the system is only going to report v4. ( AMD/Intel systems that have a x8 PCI-e v5 provision can just re-task that to x4 v5 SSD M.2 if they skip a dGPU. In previous systems Apple would re-task discrete Thunderbolt controller to the 'dGPU' links of a Intel processor on 13" models that skipped a dGPU. )

The more likely second x4 PCI-e device pushed into these Qualcomm powered laptops would be the Celluar modem. Which are like the Wi-Fi bluetooth module and are running at x4 PCI-e v3. [ backhaul x8 and effectively x8 usage wouldn't be major problem. The radios wouldn't be hyper stressing their v3 connections. There is some buffer there on the usage side. ]

** Qualcom's FastConnect 7800; Wi-Fi 7 tops out at 5.8Gps which x4 v3 can cover. ( x1 v4 would be more energy efficient but not as widely compatible in the generic Windows Wi-Fi M.2 card market. )

P.S. In terms of picking a v4 M.2 SSD that is 'high performance' where choosing to run hotter, then I suspect other chassis besides this ASUS one will be better choices (e.g., will have more space around M.2 slot and perhaps better airflow ). The dual fan cut outs are not 'free' and compact the spacing on the motherboard tighter. Every implementor doesn't 'have to' do that. (and other systems will have more room; 16-17" )

P.P.S. for long term prospects ( Oryon v3 ? ) it is useful to have a x8 PCI-e provisioning so can build developer system so folks can experiment with dGPU cards. But short-intermediate term to 'pain' of getting pragmatcially divergent GPU drivers ready is probalby not worth the 'drama'. Focusing most Windows developers on the iGPU that is always there is far more critical. That x8 GPU thing is only for a fringe camp; not a target market.

Once open up to laptops where there is thermals for hefty dGPU then there is likely also room for a bigger CPU footprint also. Therefore, loosing some of the leverage have against inertial of x86-64 options. It is not where the bulk of the laptop market is going anyway ( dGPU is on a downward trend line 2024-25 in aggregate laptop market. ).

Last edited:

Not surprised. Dell somehow makes fantastic monitors and everything else is junk.the worst, by a significant margin, were the Dells.

Not surprised. Dell somehow makes fantastic monitors and everything else is junk.

"Makes" is a stretch in some of these cases.

" ...

The vast majority of laptops on the market are manufactured by a small handful of Taiwan-based original design manufacturers (ODM), although their production bases are located mostly in mainland China. ... That percentage grew to 32% in 1996, 50% in 2000, 80% in 2007 and 94% in 2011.[16][17] The Taiwanese ODMs have since lost some market share to Chinese ODMs, but still manufactured 82.3% of the world's laptops in Q2 of 2019, according to IDC.[18] ..."

Original design manufacturer - Wikipedia

One way to do a half dozen laptop designs is to just outsource, not only the manufacture, but the design. A subset of the manufactured laptops are something that the ODM primarily did themselves ( pretty close to be 'white box' laptops). Pick some cosmetic choices ( company color scheme, grade of plastic, etc. ) on the outside and slap a vendor badge on it.

Qualcomm has worked with Winstrom, Compal , and Quanta for the X Elite/Plus. ( https://forums.macrumors.com/thread...elites-benchmark-scores.2408596/post-32741193 )

Interesting to see Honor and Xaomi have disappeared ( perhaps downplayed trying to avoid "AI ban". )

According to the list below most of Dell's stuff is arriving " later (than June/July) 2024 " also.

https://9to5google.com/2024/05/20/s...e-heres-the-full-list-and-where-to-pre-order/

At least one of those systems are likely primarily "order from the ODM menu" systems.

I searched GB for Snapdragon X Elite scores and collected what they had into a chart. There were a lot of the CRD entries, which IIUC, are prototype/test platforms. There were also scores from Dell, Acer, Asus, Samsung, HP and Lenovo, which I would guess are production devices. Averaging all the scores of the production model scores and came up with a single-core score of 2289, a multicore score of 12337.

Running GB on a M1 MBA gives me a score of 2385 / 8409. Thus, the SC score of the Elite X is, on average, a little lower than an original M1, the MC about 47% higher.

Granted, there was a lot of variation in the Elite X scores. The best performers were the Samsung devices (averaging a little better than the reference platforms), while the worst, by a significant margin, were the Dells.

Interesting that there is a lot of variation across the models, and thanks for doing the work. Still single-core performance in the ballpark of M1 and multi-core significantly higher is not a bad place to be for their first-generation product, it will make Windows on ARM reasonably competitive with x86 laptops.

You can't really just average all the GB scores, especially when there's pre-production units in play which don't necessarily have to perform the same as retail. There's also zero guarantee and no way to tell that system wasn't being used for multi-tasking or something in the background at the same time which will reduce the GB scores.Interesting that there is a lot of variation across the models, and thanks for doing the work. Still single-core performance in the ballpark of M1 and multi-core significantly higher is not a bad place to be for their first-generation product, it will make Windows on ARM reasonably competitive with x86 laptops.

Like here's a M1 MBA with 1452/4881 scores: https://browser.geekbench.com/v6/cpu/6241784

That's not typical and it'd drive down the average. Now, there's enough M1s in the wild that outliers like that won't have a huge impact but on an unreleased product? Not the case.

I am not sure how useful it is to average the results of the different binned versions, specially the single core results. For single core:Averaging all the scores of the production model scores and came up with a single-core score of 2289, a multicore score of 12337.

- 84100 scores around 2800+ (Qualcomm)

- 80100 scores around 2600+ (Samsung) and 1100 (Dell)

- 78100 scores around 2300+ (Asus, Acer)

It appears that the Oryon core scores at 3.4 GHz around 2300+, at 4 GHz, 2600+ and at 4.2 GHz, 2800+.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.