A revisualization of Notebookcheck's Cinebench R24 performance and efficiency data.

Details: This is only results from one benchmark, Cinebench, which has gone from being one Apple's worst performing benchmarks in R23 to one of its best in R24. As I am interested in getting as close as possible to the efficiency of the chip itself, power measurements above subtract idle power which NotebookCheck does not do when calculating the efficiency of the device. With the release of Lunar Lake, an N3B chip, I've added M3, Apple's corollary to Lunar Lake, and estimated M3 Pro's efficiency based on its power usage in R23 and the base M3 CPU's power/performance in R23/R24 (NotebookCheck did not have power data for R24 for the M3 Pro). I feel it is maybe overestimating M3 Pro's efficiency a little, but not by enough to matter given the gulf between it and every other chip. The M3 was in the Air, given that Cinebench is an endurance benchmark, its score and power usage will both likely be higher in the 14" MacBook Pro. I did also have the Snapdragon X1E-84-100 but removed it since it was clutter and didn't add much. The Ultra 7 258 is one of the upper level Lunar Lake chips, but not the top bin - the 288 might improve efficiency/performance somewhat by having better silicon, but the effect will be small relative to the patterns we see.

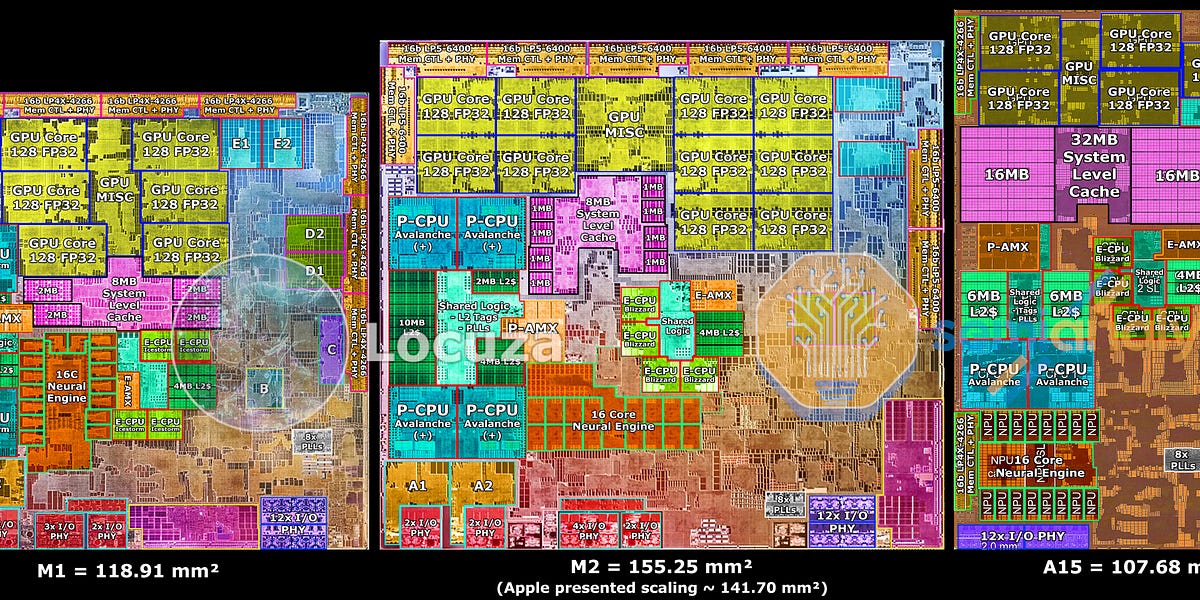

So what do we see? First off, Lunar Lake has great single core performance and efficiency ... for an x86 chip - helped perhaps by being on a slightly better node, N3B, than the M2 Pro (N5P), HX 370 (N4), and Snapdragon (N4). Despite this advantage, the Snapdragons on N4 and M2 Pro on N5P are still superior in ST performance and efficiency. The Intel 7 288V might increase performance to match or slightly beat the Elite 84 (not pictured), but it would be at the cost of even more power. That said, the efficiency and performance improvements here are enough to make x86 potentially competitive with this first generation of Snapdragons - at least enough that with compatibility issues, Intel can claim wins over Qualcomm and begin to lessen its appeal.

However, this has come at a cost of MT performance. The prevailing narrative is that without SMT2/HT, Intel struggles to compete against AMD and Qualcomm. And to certain extent that's true, but with only 4 P-cores and 4 E-cores in a design optimized for low power settings, it was never going to compete anyway. The review mentions it gets great battery and decent performance on "everyday" tasks in stark contrast to full tilt performance represented by Cinebench R24 and that after all this for thin and lights. The closest non-Apple Lunar Lake analog is the 8c/8T Snapdragon Plus 42 whose ST performance is a little lower than the 258V, but with much, much greater efficiency and whose MT performance and efficiency is much better than the Lunar Lake chip. However, the Snapdragon Plus 42 has a significantly cut down GPU which was already the weakest part of the processor. I'm not saying it can't provide compelling product, especially if priced well, but given the compatibility issues it's tougher sell for Qualcomm that it would've been last year. As for AMD, there is no current analog to the Lunar Lake in AMD's lineup. Sure, a down clocked HX 370 gets fantastic performance/efficiency at 18W ... but that's to be expected from a 12c/24T design which would frankly be cramped inside thin and lights - its not really meant for that kind of device. It's a Mx Pro level chip at heart and should be compared to the upcoming Intel Arrow Lake mobile processors. AMD's smaller Kraken Point is supposedly coming out next year with a more similar CPU but again is rumored to cut the GPU and according to the notebookcheck review, the Intel iGPU in Lunar Lake is already competitive with if not better than the AMD iGPU in the larger Strix Point. It's fascinating how AMD and Qualcomm both designed more workload-oriented CPU-heavy designs while Intel has basically designed Lunar Lake to be like the base M3, more well rounded.

But that brings us to the M3 and the comparisons here are pretty ugly for all of its competitors. Again, Apple tends to do very well in CB R24, so we shouldn't extrapolate from this one benchmark that it will be quite this superior to AMD, Intel, and Qualcomm in every benchmark. With that caveat aside ... damn. The ST performance and efficiency are out of this world and simply blow the other N3B chip, the Lunar Lake 258V, away with both a large performance gap and an even larger efficiency gap, nearly 3x. Even the M2 Pro and Snapdragons are just not that close to it. Sure in MT a down clocked Strix Point can match the base M3's performance profile at 18W, but that is a massive CPU by comparison and the comparable Apple chip to the HX 370, the M3 Pro, is leagues better than anything else in this chart, including the HX 370. I have to admit: while Apple adopted the 6 E-core design for the base M4, if the M4 Pro doesn't have its own bespoke SOC design and is a chop of the Max, then, depending on how Apple structures the upcoming M4 Max/Pro SOC, it'll be a shame to see Apple lose a product at this performance/efficiency point. The M3 Pro is rather unique. Also, its 6+6 design really highlights how improved the E-cores (and P-cores) were moving from the M2 to the M3, especially in this workload.

Meanwhile the two chips of comparable CPU design to the base M3, the Plus 42 and the 258V, are simply not a match for the base M3 in MT requiring double or more power to match its performance or otherwise offering significantly reduced performance at the same power level. Intel claimed to match/beat the M3 in a variety of MT tasks in its marketing material, but aside from specially compiled SPEC benchmarks, you can see how much power it takes for it to actually do that. Basically Apple can offer a high level of performance (for the form factor) in a fan-less design and its competitors, including the N3B Lunar Lake, simply cannot. Also like Lunar Lake, Apple also went for a balanced design here opting for powerful-for-its-class iGPUs to be paired with its CPUs (though obviously some of these chips, especially the Strix Point can also be paired with mobile dGPUs). There is a point to be made about the base MacBook Pro 14"'s price which is quite expensive, has a fan, and is still the base M3 with a low base memory/storage option - but even so, as we can see above, the base Apple chip is not without its merits at that price point/form factor. Oh and ... this is the performance/efficiency gulf of the newest generation of AMD, Intel, and Qualcomm processors with the M3 ... with the M4 Macs about to come out. 😬

Comparing the Snapdragon and HX370, the higher end Elite chips (e.g. the Elite 80) should be on the same multicore performance/W curve as the HX370 whilst being 20% smaller on a slightly older performing node (same density though). Single thread is a similar story but greatly exaggerated: the Oryon core size is again roughly 20% smaller than the Zen 5 core but with much greater ST performance and efficiency. This represents an advantage in manufacturing and perf/W of ARM chips relative to x86. That said, is this a big enough advantage to overcome compatibility issues? Maybe, maybe not. Further we don't yet know how Arrow Lake and Intel's new cores compare in size yet. Compatibility issues may go down over time, but these advantages Qualcomm enjoys may also shrink.

Another thing that has occurred to me looking at this chart comparing the Qualcomm Elite to the M2 Pro is I once estimated that the Qualcomm Elite was missing 20% of its multicore performance in CB R24 based on how 12 M2 P-cores should behave. However, here we see that for the same ST CB R24 performance the Elite 80 is ... 20% less efficient than the M2 Pro's P-core. If that's true at lower clocks as well (i.e. in a multithreaded scenario), then that alone could explain the discrepancy. That's a big if, but it's plausible.

References:

We tested the new MacBook Air M3 with a faster 10-core graphics card, Wi-Fi 6E and 16 GB RAM.

www.notebookcheck.net

Notebookcheck reviews the new Apple MacBook Air 15 with the M3 SoC, 16 GB of RAM and Wi-Fi 6E.

www.notebookcheck.net

Notebookcheck has tested the MacBook Pro 14 with the 11-core M3 Pro, 18 GB RAM and a 512-GB SSD which costs 2,499 Euro.

www.notebookcheck.net