x2I'd like to see some sustained transfer benches of you boot and scratch arrays, preferably with the AJA system test.

That is indeed quite a setup you've got there. Would be even better if you could provide some pics.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Raid card for Crucial RealSSD C300

- Thread starter banosr

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

BTW, don't ever try to place MLC based SSD's in a parity array, as they're not up to the write cycle frequency.

.

This doesn't make sense. A parity arrays does not increase number of writes to an individual disk. Sure you have to write two (or three) pieces of data but you'd had to do that if making duplicates anyway. It is rather hard to make duplicates without writing more than once.

If the write cycle frequency is too high for a single disk then parity (or full duplication ) don't make it any better worse.

Marginally has some impact if the RAID controller uses just one drive for parity. ( not sure why would want to do that since localizing the writes onto a single disk and hence a single queue. ). In that case the workload is not being distributed, but is it still no more than if had a single disk and no RAID.

However, in a distributed parity set up similar benifits that RAID-0 has in distributing the writes across devices is present ( just over a smaller subset of the disks. Some disk(s) are being left out of each write. Just not as low a number, but also higher reliability... so doesn't come for free. )

A hard disk will "wear out" over time just like a MLC disk will. Perhaps different specific failure modes but they both wear. Need to be aware of the write cycle limitations but that's conditional upon the workload. A mainstream 70/30 read/write ratio is likely OK. RAID with parity doesn't defacto mean the read/write ratio is 50/50 or 40/60 .

The next step, is a hardware RAID card, not a SATA controller (which is what's been linked so far, though true RAID cards have been mentioned = ATTO products as the only 6.0Gb/s RAID cards avaiable right now).

My other favorite (better configuration flexibility and lower cost), is Areca. Unfortunately, they don't have any 6.0Gb/s RAID products available right now.

nanofrog. Areca has a new card series that supports 6.0Gb/s

http://www.areca.us/products/1880.htm

doc

We've crossed this bridge before, and it always seems to be a mis-read/understanding of what was posted.This doesn't make sense. A parity arrays does not increase number of writes to an individual disk. Sure you have to write two (or three) pieces of data but you'd had to do that if making duplicates anyway. It is rather hard to make duplicates without writing more than once.

If the write cycle frequency is too high for a single disk then parity (or full duplication ) don't make it any better worse.

Marginally has some impact if the RAID controller uses just one drive for parity. ( not sure why would want to do that since localizing the writes onto a single disk and hence a single queue. ). In that case the workload is not being distributed, but is it still no more than if had a single disk and no RAID.

However, in a distributed parity set up similar benifits that RAID-0 has in distributing the writes across devices is present ( just over a smaller subset of the disks. Some disk(s) are being left out of each write. Just not as low a number, but also higher reliability... so doesn't come for free. )

A hard disk will "wear out" over time just like a MLC disk will. Perhaps different specific failure modes but they both wear. Need to be aware of the write cycle limitations but that's conditional upon the workload. A mainstream 70/30 read/write ratio is likely OK. RAID with parity doesn't defacto mean the read/write ratio is 50/50 or 40/60 .

The comment was aimed at using a striped SSD set for scratch, but there's other issues as well (been mentioned before), such as available bandwidth in the ICH.

If a user is willing to accept say the 40GB OWC unit and realize they'll need to implement a MTBR of 1 - 1.5 years (worst case = $100 per year at the current MSRP), then it could work for Photoshop's scratch space, and only one would be needed. But this may not be acceptable for everyone, particularly enthusiast/hobbyist users going by the budgets listed in various posts on RAID setup help.

There are solutions for bandwidth issues with the ICH of course (all other issues either not applicable, such as OS/applications drive use, or decided they're acceptable). ATTO's 6.0Gb/s non-RAID HBA's or the 6.0Gb/s RAID products (ATTO and Areca) for example, but that's rather expensive to most users. A simple 6.0Gb/s SATA card could also help, but so far none are EFI bootable that I've seen, and those currently with OS X drivers are eSATA only. I realize it's possible to run the cables back through a PCI bracket, but it's messy IMO, and the cards (i.e. newertech) are also a bit limited (500MB/s at best, as they're 1x lane Gen. 2.0 cards).

MLC based SSD's for parity based arrays is a bad idea given the current write cycle limitations. SLC is fine, but quite expensive yet. This will change over time (eMLC, lower prices on SLC, and other Flash technolgies that haven't reached the market yet), but for the moment, the cost is still too high for most.

I know, and have posted this in another couple of threads.nanofrog. Areca has a new card series that supports 6.0Gb/s

http://www.areca.us/products/1880.htm

doc

Best yet, they're actually available (ATTO's are harder to find in RAID versions). Their H6xx products (non-RAID HBA's) are readily available from what I've seen (cheaper too, which could be of interest for those wanting to run SSD's on an internal controller for either additional ports or bandwidth issues).

Here are the computer pics

As you may see I am not the best at routing cables or taking pictures by that matter, as you can see I had to custom made the top of the DX4 and had it soldered to the front part to fit all the disks the way I wanted. Hope this helps.

As you may see I am not the best at routing cables or taking pictures by that matter, as you can see I had to custom made the top of the DX4 and had it soldered to the front part to fit all the disks the way I wanted. Hope this helps.

Attachments

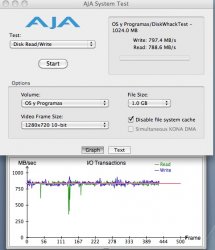

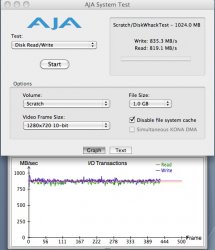

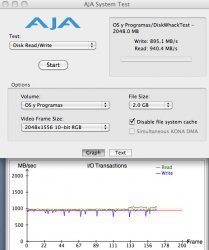

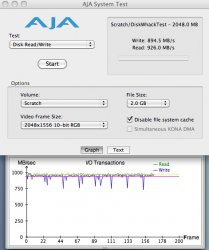

Is the image on the left the 4x SSD stripe set?Sorry for the delay, I had to set everything up, it was a Holiday in Mexico and also I have to work from time to time but hear are the test and the photos.

I ask, as I'd have expected more from 4x C300's. The VR's do look good however. Wonder what's going on.

BTW, what are the stripe sizes? You may want to experiment with them to see if you can eek out any peformance improvement.

I had a mixup with the drives, I will be reposting the exact array setup as well as the tests.

Sorry for the mixup

Don't be sorry bro.. First off thanks for getting back with some photos. Second which machine is this pro 2010 or 2009?

Don't be sorry bro.. First off thanks for getting back with some photos. Second which machine is this pro 2010 or 2009?

It's a 2009

Not a problem, and as already mentioned, it's nothing to be sorry about.I had a mixup with the drives, I will be reposting the exact array setup as well as the tests.

The test you've done looks good (10bit, 2GB, cache disabled).I don't know how the file size or video frame rates influence the results, if you need different parameters please let me know.

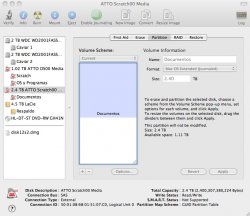

The SSD have 2 active partitions of 128 GB each.

Run the test again with the cache Enabled, and see what happens.

As a reminder, what stripe size did you use when creating the SSD array?

You may want to experiment with this, as you may be able to increase the performance.

Create the array with different stripe sizes, and test the throughputs (includes real world testing = applications with test files). Once done, you'll see what's the best fit for your usage.When I created the Array I left everything as default, since I don't have much knowledge of what the changes would do.

I know this takes time, but it's worth it. And you'll learn about your setup's operation.

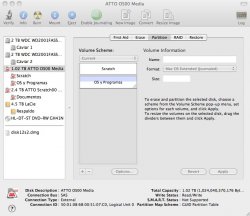

Setup

Mac Pro

Quad-Core Intel Xeon 2.26 GHz

Memory 16 GB

Mac OSX 10.6.4

- 4 Crucial Real SSD 300 (Boot Drive and Aps) in Transintl DX4

- 4 10k Velociraptors (Scratch) connected to MaxConnect SAS/SATA BackPlane Attachment for Mac Pro [2009] Attaching the backplate to the DX4 was tricky (Involved a visit to the blacksmith).

- 2 WD Caviar Black 2TB (Backup 1) in Transint Pro Caddy 2 in optical bay.

- 1 x ATTO Technology ExpressSAS R608 8-Port Internal 6Gb/s SAS SATA RAID Controller.

- LaCie 12big Rack Fibre via NAS as Backup in Raid 5 connected to a Xserve

The 4 Crucial and the 4 Velociraptors connected to the Atto (2 striped sets), the 2 Caviar (Software Striped) connected to sata out ports of the bacplane in bays 1 and 2.

Please let me know what benchs you would like and what programs to use.

I still have not been able to boot from the Atto card, even tough in the Atto configuration I have boot enabled. I hope to fix this soon since I am booting from my past HD connected to the DVD Cable.

The OP is probably long gone, but I've managed to stumble into this thread and am wildly intrigued. If I'm reading the bench results correctly The OP seems to have surpassed the ICH bandwidth limit of 690 or so and arrived at a really fast, (800 M/ps range) configuration, without going outside the mac, ie an external 4 or 8 bay tower.

I'm wondering what a set up like this might cost? anybody hazard a guess?

- Julian

The SSD's, HDD's, RAID card, and mount alone (using the cheapest drives that fit):The OP is probably long gone, but I've managed to stumble into this thread and am wildly intrigued. If I'm reading the bench results correctly The OP seems to have surpassed the ICH bandwidth limit of 690 or so and arrived at a really fast, (800 M/ps range) configuration, without going outside the mac, ie an external 4 or 8 bay tower.

I'm wondering what a set up like this might cost? anybody hazard a guess?

- Julian

ATTO R608 $838

DX4 $129

HDD adapter kit $129

4x Crucial 64GB C300 SSD's $620

4x 150GB Velociraptors HDD's $720

Subtotal $2436

DX4 $129

HDD adapter kit $129

4x Crucial 64GB C300 SSD's $620

4x 150GB Velociraptors HDD's $720

Subtotal $2436

Of course, you can get higher with larger capacity drives, and the rest of the system may scare the pants off of you.

BTW, the ICH limit is ~660MB/s (690 is more than it's capable of).

The SSD's, HDD's, RAID card, and mount alone (using the cheapest drives that fit):

ATTO R608 $838

DX4 $129

HDD adapter kit $129

4x Crucial 64GB C300 SSD's $620

4x 150GB Velociraptors HDD's $720

Subtotal $2436

Of course, you can get higher with larger capacity drives, and the rest of the system may scare the pants off of you.

BTW, the ICH limit is ~660MB/s (690 is more than it's capable of).

Nano,

Cool, thanks for the above pricing info.

Q: One thing I'm not totally understanding is the bacplane thinga ma jiggy from max Upgrades you've linked. It's just a connector? a cable? a way to re- route either the 4 ssds or the 4 raptors to the atto card? or away from the ICH? is this how the 660 ICH limit is surpassed?

Q: How is it that this set up stays entirely internal, and exceeds the ~660MB/s limit of anything put inside the mac? My crude and admittedly technically challenged logic can't wrap my head around this. Perhaps the re - routing going on in the OP's configuration is where the magic is?

Q: I see an abundance of members here wanting to have the boot and apps on a stripe set of 2, 3, and in this case, 4 ssds. I must be missing something. What is the point? (not being argumentative here, just curious) Is it just to get faster boot ups or faster application launches? Perhaps I don't see any great benefit because I typically only run one application (photoshop) 10 hours a day? I know there is some benefit to fast read times on the boot drive, when the OS does it's SWAP thing. But uncertain how often or to what extent this occurs.

Thanks!

Anand is not recommending these drives for use in Macs which lack Trim support...

http://www.anandtech.com/show/3812/the-ssd-diaries-crucials-realssd-c300/9

Measureabators stuck in an Escher loop forever obsessing over how long it takes to complete a task.................. how sad.

On a more grounded note, I haven't seen any change in the performance of my C300. However, I do run my SSD's with <50% fill so maybe that explains why I haven't seen any change in drive performance.

Like everything in life.............. YMMV.

cheers

JohnG

It's a kit composed of machined trays and cables (2x; one for data, one for power) that attach to each drive, and place the ends into a single MiniSAS connector (= version you need = aka SFF-8087; which is the end you need for most RAID cards).Q: One thing I'm not totally understanding is the bacplane thinga ma jiggy from max Upgrades you've linked. It's just a connector? a cable? a way to re- route either the 4 ssds or the 4 raptors to the atto card? or away from the ICH? is this how the 660 ICH limit is surpassed?

You need this because the backplane connectors (SATA + power) are soldered directly to the backplane board (has the PCIe slots soldered to it as well). The OEM trays are different (longer) since 2009 so the drives will plug directly to the connectors on the backplane board.

So the new drive tray/mounts in the MaxUpgrades kit are shallower in order to allow for the necessary cables to be plugged into the drive.

The downside to this kit, is you're no longer using those 4x SATA ports on the ICH.

You may be able to, via RT angle SATA cables for example, and route them somewhere else (say an optical bay or DX4). This idea may or may not work, so it's just a possibility (assuming you can find physical locations for the disks, cables, routing path, and a means of power for the drives).

Hopefully, this makes sense.

You're moving the bandwidth used to the PCIe lanes (ideally, you put the RAID card in slot 2, as it's an 8x lane unit; it will still work in slot 3 or 4, but only has 4x lanes available to it).Q: How is it that this set up stays entirely internal, and exceeds the ~660MB/s limit of anything put inside the mac? My crude and admittedly technically challenged logic can't wrap my head around this. Perhaps the re - routing going on in the OP's configuration is where the magic is?

Performance, cost, or perhaps just someone that likes to tinker.Q: I see an abundance of members here wanting to have the boot and apps on a stripe set of 2, 3, and in this case, 4 ssds. I must be missing something. What is the point? (not being argumentative here, just curious) Is it just to get faster boot ups or faster application launches? Perhaps I don't see any great benefit because I typically only run one application (photoshop) 10 hours a day? I know there is some benefit to fast read times on the boot drive, when the OS does it's SWAP thing. But uncertain how often or to what extent this occurs.

In terms of performance, a stripe set is usually associated more with sustained transfers rather than random access (what SSD's are really good for vs. mechanical), but even random access gets some improvement.

As per real world performance gains, the only way to really know, is to try it out (experiement), as the application details aren't really available (i.e. what runs as single threaded and multi-threaded in suites such as CS5). The users that have gone to similar lengths have posted the results (i.e. save operation in Photoshop for example, is single threaded based on that information).

Cost is much easier to determine. This is the instance where you compare if the capacity of n smaller drives in a stripe set = that of a single SSD drive is cheaper than the single drive or not. In this instance, performance may be secondary (i.e. budgetary limitations rank first, performance second; not that performance isn't an issue at all). It may or may not be the case, as it will depend on the specific drives being compared.

But if there's the necessity to add a card, a RAID card will be worse cost wise than the single drive when added to smaller drives (RAID card is where performance trumps cost). A simple SATA 6.0Gb/s card (eSATA actually) is much easier to deal with IMO, and may work in favor of multiple drives in terms of cost (though currently available models can't boot OS X, and the cables must be run out of an empty PCI bracket to the ports on the card). Not pretty, but would be functional.

Somewhat relevant to this thread so I will ask my question here:

I just purchased a 12core MacPro and am looking to fill it with 8 x 256GB C300 SSD drives all stripped together in a single raid0 array.

This will function as my startup, apps, and media drive.

I have a 14TB DroboPro running Time Machine as my backup, so no need to raid for redundancy. Just looking for maximum speed.

My questions are:

Is there a RAID card with 8 internal connections, 6Gb/s & bootable?

I found ExpressSAS R608 and the Areca ARC-1880... but not sure if I can boot up off of these.

Is there something I am overlooking... is this system going to work as planned (provided I find an appropriate RAID controller)?

Thanks!

I just purchased a 12core MacPro and am looking to fill it with 8 x 256GB C300 SSD drives all stripped together in a single raid0 array.

This will function as my startup, apps, and media drive.

I have a 14TB DroboPro running Time Machine as my backup, so no need to raid for redundancy. Just looking for maximum speed.

My questions are:

Is there a RAID card with 8 internal connections, 6Gb/s & bootable?

I found ExpressSAS R608 and the Areca ARC-1880... but not sure if I can boot up off of these.

Is there something I am overlooking... is this system going to work as planned (provided I find an appropriate RAID controller)?

Thanks!

Somewhat relevant to this thread so I will ask my question here:

I just purchased a 12core MacPro and am looking to fill it with 8 x 256GB C300 SSD drives all stripped together in a single raid0 array.

This will function as my startup, apps, and media drive.

I have a 14TB DroboPro running Time Machine as my backup, so no need to raid for redundancy. Just looking for maximum speed.

My questions are:

• Is there a RAID card with 8 internal connections, 6Gb/s & bootable?

I found ExpressSAS R608 and the Areca ARC-1880... but not sure if I can boot up off of these.

• Is there something I am overlooking... is this system going to work as planned (provided I find an appropriate RAID controller)?

Thanks!

You might want to check this comparison test on the crucial SSDs

http://macperformanceguide.com/SSD-RealWorld-SevereDuty.html

Both of those cards are bootable once you flash the firmware to the EFI version (can get it off of the disk that comes with it, or off of the support site).My questions are:

Is there a RAID card with 8 internal connections, 6Gb/s & bootable?

I found ExpressSAS R608 and the Areca ARC-1880... but not sure if I can boot up off of these.

Is there something I am overlooking... is this system going to work as planned (provided I find an appropriate RAID controller)?

Between the two, the Areca is a better choice (better price/performance ratio; includes internal cables, which are about $30USD each if you have to buy them, so it saves you another $60 or so as well as the price difference between the models).

You'll also need a mount of some sort (MaxUpgrades has a kit that will fit 8x 2.5" SSD's in a single optical bay - it also includes cables to get power to the drives; here).

How the heck did I miss this thread?

Impressive results you've got there. Thanks for providing benchmarks and pictures!

Impressive results you've got there. Thanks for providing benchmarks and pictures!

Last edited:

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.