Where those Hitachi's SAS? Particularly 15k rpm units?

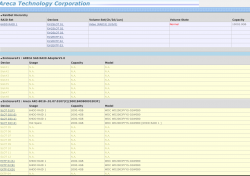

I ask, as 15k would be able to generate those kinds of throughputs (and they could be used with your card - you'd have to test them, but all SAS disks are enterprise grade, so it has the right timings). If you've the Exact model number of those Hitachi's, then you can check to see if they're on the HDD Compatibility List, which I expect they would be.

Now once it's done with the initialization, you've a lot of work to do (and learning first hand how the card will react).

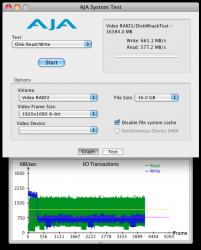

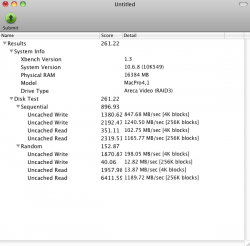

- Performance testing.

- Failure testing.

Place test/dummy data on the array (after performance testing), and do the following...

- Start a write, and pull the power (tests out the UPS).

- Do this again with the UPS out of the loop (plug the system directly into the wall = tests out the BBU).

- Start another read and pull a disk (pull it off of the external, as it does have an inrush current limiter for Hot Plugging).

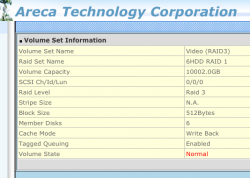

- Pay attention to the performance of RAID 3.

- Replace the disk, and watch what the card does again, including performance.

- Do the same with a write (yank disk as above, and again, pay attention to the performance).

It's a lot of work, but you'll thank yourself later, as you'll get a good understanding of how the card actually works, and what to expect in the event of a real failure (most data loss is the result of user error, even in fault states/degraded arrays on hardware RAID controllers).

So it really is in your best interest to figure this out now rather than later (when it's important and can either cost you your data, or a lot of additional time that could have been prevented by knowing the right methodology to begin with).