I hope it's ok to resurrect an old thread. I'm trying to find out of my experience is normal with a new (to me) flashed Radeon 7990.

It's an MSI OC edition, which I believe is a reference card that's factory overclocked about 9% ... a little slower than the GHZ edition. The card works fine and benchmarks fine in my Mac Pro 5.1. But it's always kicking out a lot of heat. One of the reasons I chose this card was that in all the reviews the power consumption was low at idle. It's a power hog when working hard, but my workflow won't have it working hard for hours on end.

Anyway, I checked HardwareMonitor, and it shows power consumption at idle to be around 60 watts! Around 30 watts from the PCI slot, and around 15 each from the booster cables. Review sites say it should only consume 10–14 watts at idle. So I'm getting 4 to 6 times worse than this.

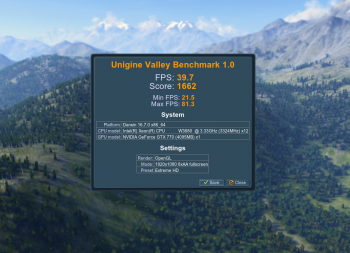

Meanwhile, running full tilt in a stress test, I can't get it to pull more than 185 watts. So its maximum power is lower than expected, while its idle power is much higher.

Anything to be done about this? Not the end of the world, but it would be nice to not have a space heater under my desk all summer long.

FWIW, AMD advertises power saving features on this card to reduce energy consumption at idle. Is it possible that these only work under Windows?

Thanks for any thoughts.