I don't disagree with Apple's policy of moving on and pushing forward however my point was that the shift from PPC to Intel was disruptive on a number of levels beyond a "compiler shift" which also downplays the extra libraries that those tools provided.

There were things in the PPC -> x86 shift that matter that the compilers couldn't automagically. But endian shift ( big versus little) and some vectorization code ( SSE, SSE2, ..... Intel's alpahbet soup , 'yet another try' at vectorizing). Sloppy type usage ( int treated at pointer ) which was a 32-bit -> 64-bit issue too.

But Metrowerk's proprietary library wasn't it. Apple had their libraries under control. Mac OS X had always been built against x86 from the beginning ( like NeXTStep was ). Apple had a x86 track on Mac OS X from the start of 'retiring' OS9.

I think we'll have to agree to disagree on Metrowerks being surprised, I don't think they'd have made the same decision but we will also never know the answer there.

It wasn't "Metrowerks" decision to make. It was Freescales. Your "agree to disgree" is hooked on the premise that Metrowerks was a free-standing company. It was not at that point. Holding onto a x86 compiler was not what Freescale needed that most in 2004-5.

A newsgroup thread from the time period.

"...

The Metrowerks website now seems to indicate that Metrowerks has been

absorbed into Freescale as a "team" and no longer exists as a separate

company:

http://www.metrowerks.com/MW/About/default.htm

> Freescale Semiconductor’s Metrowerks organization is a silicon

> enablement team that helps customers experience and fully leverage the

> performance of Freescale products. The organization’s embedded

> development leadership, technology and talent are focused on driving

> success for Freescale and its customers.

"

and later in that thread.

"...

It occurs to me that Nokia also saw the writing on the wall, and presumably

being Codewarrior users themselves, bought the x86 compilation stuff so that

it wouldn't sink along with Metrowerks (or at least with the MW desktop

tools dept). ..."

Clinging to the x86 passing up the money that Nokia was willing to hand over would have helped Freescale sell more chips how? You are handwaving around that very relevant question with "agree to disagree" arm flapping. Metrowerks moves from 1999-2003 set the ground work for that x86 compiler 'bail out' sale to Nokia. Apple's processor move was indicdental.

I do agree though that the move to Windows seemed like an over extension.

Which was a major issue for a company that didn't have "print money" kinds of margins and other major investments to get returns on. It was owned by a semiconductor fabrication company that needed billion dollar fabs to be competitive. Holding onto to that x86 compiler wasn't going to be a license to print money.

I feel Apple have learned from that last move and proactively deprecated support and has been relatively open about communicating the future. As you point out Apple now have better control of their own development tooling compared to the last transition where there was competition in this space. I'm of the camp that the 64-bit requirement for Catalina is long expected with folk having been given the option of compiling 64-bit binaries for over a decade, anything left on 32-bit at this point was almost going out of their way to avoid it.

Apple killed off 32-bit on iOS long before finished killing it off on macOS side. Getting rid of 32-bit was not just part of the ARM move. iOS was on ARM then and now. Hasn't gone anywhere and 32-bit is dead there. Apple killed off booting 32-bit macOS kernels a long time ago also. Apple saw both 32-bit ARM and 32-bit x86 as legacy 'baggage' and unloaded them.

The major theme is that Apple keeps things more streamlined over time. Yes the transition is coming but making the software stack more streamlined is just something Apple is going to do even when there is no impending major hardware change coming.

I think making Catalina the cutting point was the push to clean out the 32-bit apps to simplify Rosetta on ARM and get people used to the idea that an app that might not have been updated for a decade is not going to work moving forward.

Dumping 32-bit ARM from iOS helped make Rosetta more simple how? The *BIGGER* issue was that canned 32-bit ARM apps

years before.

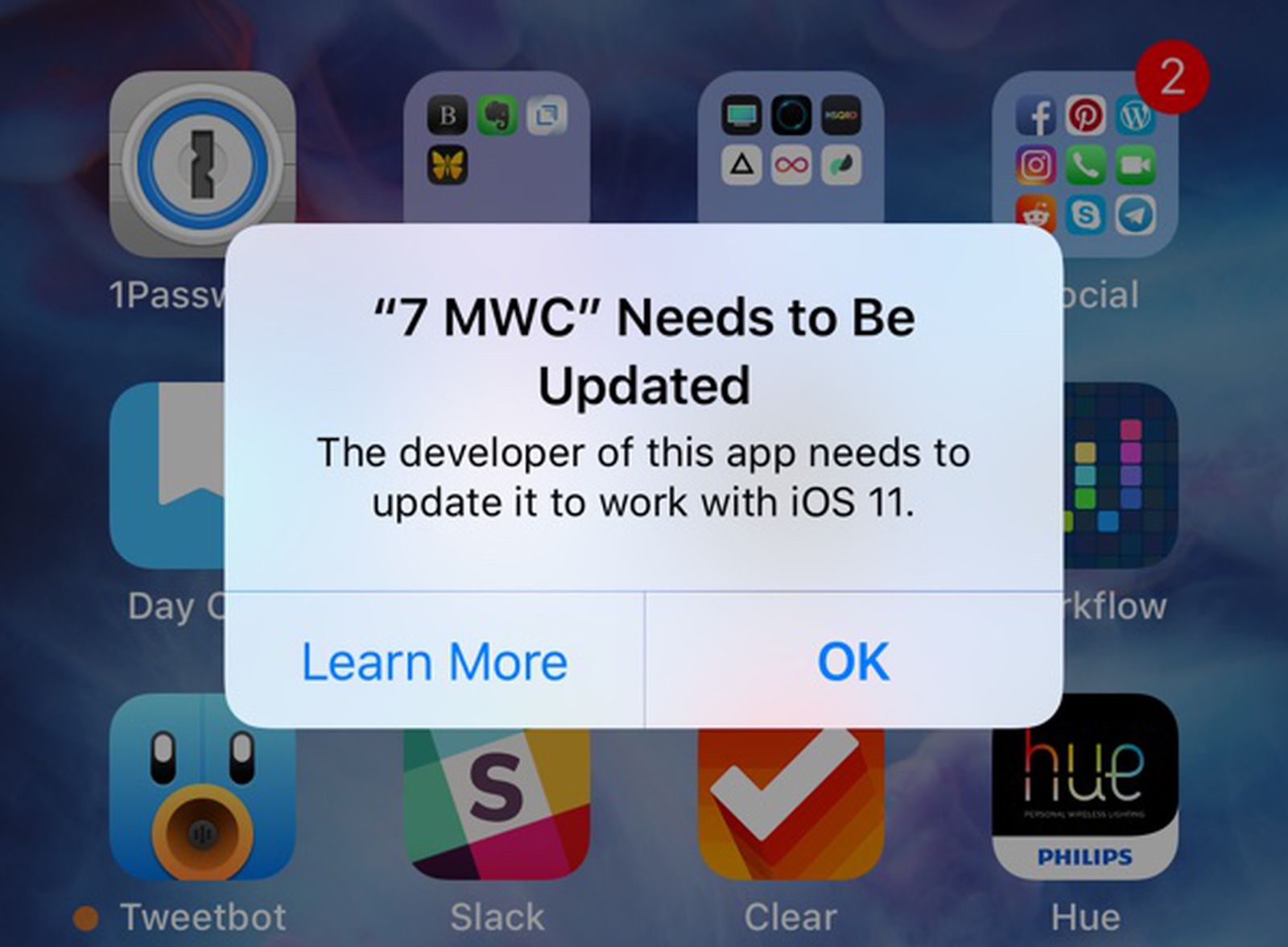

Ahead of WWDC kicking off tomorrow and the unveil of iOS 11, 32-bit applications have stopped appearing in App Store...

9to5mac.com

Ahead of the launch of iOS 11, there were several signs suggesting 32-bit apps would no longer be supported in the new operating system, which has been confirmed with the release of the first iOS 11 beta. When attempting to open a 32-bit app when running iOS 11, the app refuses to launch...

www.macrumors.com

There was no actively maintained 32-bit library target to get to on the arm side even with arm code. So what would a 32-bit x86 emulator even naturally hook too? ( a 32->64 thunk would technically be possible but misses the forest for the trees as to the huge mindset mismatch there. )

That Rosetta also got simpler was a happy side-effect of something Apple had already done.