So, after more testing and installing LR6 on the Mojave side of things too (noticing this was possible), I can conclude:

For photo editing, the 5K screen of the iMac seems to pose a real challenge when doing local adjustments. Drawing in masks (or the adjustments directly without displaying the masks) seems to be a task requiring huge amounts of processing power, which at the retina resolution makes even the newest machines showing a severe lag between moving the brush and seeing the effect.

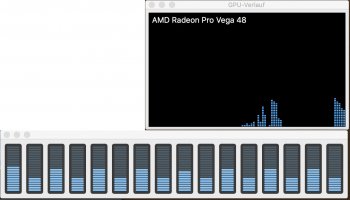

This might be no surprise for someone coming from a fairly recent machine, but for me, upgrading from a 2011 MBP with 1920x1200 screen resolution, this is a bit disappointing: now I have this gorgeous machine with its oversized i9 processor and Vega 48 graphics, but the workflow when drawing masks is slower than on my 8-year old Mac. I completely understand the new iMac has to lug around 6 times the pixels of my old one, but still, from a purely "comfort of work" standpoint this is a step back. (Especially when editing my 24MP photos at nearly 100% view makes everything look just GORGEOUS, I mean, wow!)

There are workarounds which reduce the lag, e.g.:

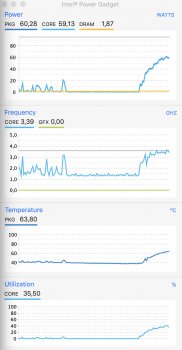

I really don't regret going with the i9/Vega now, at least my machine will go at the task the fastest way possible at the time being... on a side note: while drawing masks, neither CPU nor GPU operate at their limit, so maybe there is a way of reducing the lag a bit by optimizing the code? Not that I'm an expert in these things, just my observation.

Just posting this to let people who are in the same situation I was in (moving from an older machine to the gorgeous 27" screen) know what to expect.

For photo editing, the 5K screen of the iMac seems to pose a real challenge when doing local adjustments. Drawing in masks (or the adjustments directly without displaying the masks) seems to be a task requiring huge amounts of processing power, which at the retina resolution makes even the newest machines showing a severe lag between moving the brush and seeing the effect.

This might be no surprise for someone coming from a fairly recent machine, but for me, upgrading from a 2011 MBP with 1920x1200 screen resolution, this is a bit disappointing: now I have this gorgeous machine with its oversized i9 processor and Vega 48 graphics, but the workflow when drawing masks is slower than on my 8-year old Mac. I completely understand the new iMac has to lug around 6 times the pixels of my old one, but still, from a purely "comfort of work" standpoint this is a step back. (Especially when editing my 24MP photos at nearly 100% view makes everything look just GORGEOUS, I mean, wow!)

There are workarounds which reduce the lag, e.g.:

- open the app in "low res" mode

- use a second monitor with lower resolution for these tasks

- reduce the size of the app window on the screen

- use a different screen scaling (towards "larger text") in the Monitors control panel

I really don't regret going with the i9/Vega now, at least my machine will go at the task the fastest way possible at the time being... on a side note: while drawing masks, neither CPU nor GPU operate at their limit, so maybe there is a way of reducing the lag a bit by optimizing the code? Not that I'm an expert in these things, just my observation.

Just posting this to let people who are in the same situation I was in (moving from an older machine to the gorgeous 27" screen) know what to expect.

Last edited: