Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Will ARM Macs have ARM GPUs?

- Thread starter Haeven

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

We will probably see a mix of integrated and discrete. The lower end models will probably be integrated, while higher end ones with higher thermal envelopes will most likely have discrete as at least an option. Personally, I am hoping for a 13" MBP with a dGPU, as ARM should use less power, thus freeing up the thermal budget for a GPU. Hopefully there will also be the switchable graphics like we have now.

To me it wouldn't make sense to switch to Apple Silicon in order to get control over the hardware-software integration, diminish reliance on 3rd party chip manufacturers and then...... continue to rely on 3rd party chip manufacturers. They do GPUs really well in the A{}X series so, while nothing has been said officially, all signs point to them making their own IMO.

Whether those Apple-made GPUs will be integrated or discrete, well, I have no idea. We'll see soon enough!

Whether those Apple-made GPUs will be integrated or discrete, well, I have no idea. We'll see soon enough!

So far Apple only talked about using their own systems in the new Macs, and they have stressed over and over again that these will have Apple GPUs and unified memory architecture. Maybe next year or so it will change, but so far I have the feeling that they fully intend to go Apple GPU only. I have some speculation on how they could pull off delivering desktop-class performance using an “integrated” GPU.

It is incorrect that they have not said anything.

In the WWDC tech talk "Metal for Apple Silicon" they explicitly said that "Macs with Apple Silicon will come with Apple designed GPUs."

They also said that they will hit performance equivalent to dGPUs from AMD with power consumption less than an integrated Intel GPU. Though I'm skeptical on that.

Further more, the feature set of these GPUs will be combining both the MetalFamilyMac v2 as well as MetalFamilyApple v3, and for code written against the Catalina SDK or earlier there will be some performance limiting options enabled by default to force the GPU to behave more like an immediate renderer like AMD and Intel GPUs, even though it is actually a tile based deferred renderer. While it reduces performance, if code assumes an immediate renderer incorrectly it can cause graphics glitching.

Also, it's not called an ARM GPU. ARM is a CPU instruction set architecture. It has nothing to do with GPUs. The GPU will likely be on the ARM die, but it is not tied to ARM.

In the WWDC tech talk "Metal for Apple Silicon" they explicitly said that "Macs with Apple Silicon will come with Apple designed GPUs."

They also said that they will hit performance equivalent to dGPUs from AMD with power consumption less than an integrated Intel GPU. Though I'm skeptical on that.

Further more, the feature set of these GPUs will be combining both the MetalFamilyMac v2 as well as MetalFamilyApple v3, and for code written against the Catalina SDK or earlier there will be some performance limiting options enabled by default to force the GPU to behave more like an immediate renderer like AMD and Intel GPUs, even though it is actually a tile based deferred renderer. While it reduces performance, if code assumes an immediate renderer incorrectly it can cause graphics glitching.

Also, it's not called an ARM GPU. ARM is a CPU instruction set architecture. It has nothing to do with GPUs. The GPU will likely be on the ARM die, but it is not tied to ARM.

They also said that they will hit performance equivalent to dGPUs from AMD with power consumption less than an integrated Intel GPU. Though I'm skeptical on that.

I’m not

I’m notThe iPad Pro GPU is already comparable to GTX 1050, give it few extra cores and more thermal headroom, and you can easily get to the levels of 5300M Pro. Assuming it performs in the TBDR mode of course, otherwise the RAM bandwidth will limit it too much. To get more performance though, they need faster RAM. My guess is that they plan to use something like HBM2 as system RAM to solve that particular issue.

Comparable to a GTX 1050? I haven't worked with graphics on iOS but that seems high. Based on what data? Is that sustained or burst? I mean I know Macs will have another thermal headroom, but if that's sustained in the iPad's chassis that's pretty wild.

At the aforementioned tech talk from WW, Apple did however show off their GPU running Dirt Rally at what looked like pretty good settings at a very consistent and seemingly high frame rate, with the immediate mode rendering toggles forced on since that was an unoptimised title; And just like when they showed off Tomb Raider, it was even x86 translation. So I am not at all doubting that they can deliver good performance. But less than iGPU power consumption, competing with their dGPUs just seem too good to be true. If the A12X/Z is as good as you say it may not be too far off though

I doubt they'll use HBM2 for system memory though. It's very pricy and people wouldn't be satisfied with a maximum of 8GB of system memory.

I suspect a hybrid, a bit like Intel's Crystal Well chips, where you have regular DDR memory for system RAM but there's a level 4 cache on die - perhaps with something like an interposer attaching HBM2 to the die as a lvl 4 cache for the GPU

I should mention that at the Metal for Apple Silicon talk, their diagrams seemed to suggest that their GPU memory at least in some cases be separate from main memory as they talked a lot about when to flush to system memory and when to read from system memory versus just relying on the already cached to VRAM data on the GPU with Apple Silicon

Comparable to a GTX 1050? I haven't worked with graphics on iOS but that seems high. Based on what data? Is that sustained or burst? I mean I know Macs will have another thermal headroom, but if that's sustained in the iPad's chassis that's pretty wild.

Based on 3Dmark (and other) benchmarks from independent reviewer. I might be a bit over enthusiastic though

I doubt they'll use HBM2 for system memory though. It's very pricy and people wouldn't be satisfied with a maximum of 8GB of system memory.

My speculation is that it might make sense for 16” system, which is already more expensive. Saving from simplifying the overall architecture as well as not having to rely on IHVs might make it cost efficient - and it would sufficiently discriminate the 16” from the rest of the line. But that is my wishful thinking of course.

For the 13”, I think LPDDR5 is a reasonable bet.

I know the A12X is supposed to have similar graphics performance to an Xbox one S (approx 1.4 TFLOP to the GTX 1050s 1.8*) and the A12Z ups that another notch - from what I've seen that's a perfectly plausible claim. Assuming double the TDP (~15W) and no need to thermally regulate itself (active cooling) I don't see why Apple couldn't get up to low-mid dedicated performance from an even more advanced 5nm based A14 chipset.Comparable to a GTX 1050? I haven't worked with graphics on iOS but that seems high. Based on what data? Is that sustained or burst? I mean I know Macs will have another thermal headroom, but if that's sustained in the iPad's chassis that's pretty wild.

At the aforementioned tech talk from WW, Apple did however show off their GPU running Dirt Rally at what looked like pretty good settings at a very consistent and seemingly high frame rate, with the immediate mode rendering toggles forced on since that was an unoptimised title; And just like when they showed off Tomb Raider, it was even x86 translation. So I am not at all doubting that they can deliver good performance. But less than iGPU power consumption, competing with their dGPUs just seem too good to be true. If the A12X/Z is as good as you say it may not be too far off though

I doubt they'll use HBM2 for system memory though. It's very pricy and people wouldn't be satisfied with a maximum of 8GB of system memory.

I suspect a hybrid, a bit like Intel's Crystal Well chips, where you have regular DDR memory for system RAM but there's a level 4 cache on die - perhaps with something like an interposer attaching HBM2 to the die as a lvl 4 cache for the GPU

I should mention that at the Metal for Apple Silicon talk, their diagrams seemed to suggest that their GPU memory at least in some cases be separate from main memory as they talked a lot about when to flush to system memory and when to read from system memory versus just relying on the already cached to VRAM data on the GPU with Apple Silicon

*Yes I'm aware this is just one measure and of variable relevance depending on what you're measuring, but its one easily available quantified marker of performance.

A bit of further info in this article:

AnandTech Forums: Technology, Hardware, Software, and Deals

Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

My speculation is that it might make sense for 16” system, which is already more expensive. Saving from simplifying the overall architecture as well as not having to rely on IHVs might make it cost efficient - and it would sufficiently discriminate the 16” from the rest of the line. But that is my wishful thinking of course.

Right, the thing that would make me doubt that is that the 16" can currently go to 64GB of system RAM which I don't see being feasible with HBM. I think a hybrid solution is more likely and as mentioned Apple has already talked about separate system and graphics memory in the aforementioned talk from WW. - I'll soon be watching the follow-up "Optimising Metal for Apple Silicon". Might find more information there

And at some point, yes LPDDR5; But I think first gen will be LPDDR4X or something.

I know the A12X is supposed to have similar graphics performance to an Xbox one S (approx 1.4 TFLOP to the GTX 1050s 1.8*) and the A12Z ups that another notch - from what I've seen that's a perfectly plausible claim. Assuming double the TDP (~15W) and no need to thermally regulate itself (active cooling) I don't see why Apple couldn't get up to low-mid dedicated performance from an even more advanced 5nm based A14 chipset.

*Yes I'm aware this is just one measure and of variable relevance depending on what you're measuring, but its one easily available quantified marker of performance.

A bit of further info in this article:

AnandTech Forums: Technology, Hardware, Software, and Deals

Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.www.anandtech.com

Right that's definitely burst for float32 though. Apple's A-GPU is quite good at shader perf, but last I saw not quite as good at texturing and geometry. - Regardless what they have is definitely impressive.

Woah. I appreciate the discussion guys.

Take a look at this rumor by a video:

. If Apple's ARM iMac (Q4 2020 or later) might just have an Apple GPU, the Navi 22 GPU might be reserved for Intel iMac. The video also estimates that Navi 2x will be announced or released in September or October 2020.

If rumors are correct (the Intel iMac will be launched in Q3 2020, Navi 2x is for Intel iMac and Navi 2x will be shown in September or October), then we might see Intel iMac by September 2020. This is only a guess and are based on rumors. So I could be wrong.

Take a look at this rumor by a video:

If rumors are correct (the Intel iMac will be launched in Q3 2020, Navi 2x is for Intel iMac and Navi 2x will be shown in September or October), then we might see Intel iMac by September 2020. This is only a guess and are based on rumors. So I could be wrong.

It would be very odd if Apple spent the last several years polishing off external GPU support in MacOS, and developing the new Mac Pro’s MPX interface for PCI cards if they were going to be dropping support for discrete graphics cards.

I think there will be Apple on-die GPU, but can’t believe there will not be an option for discrete GPU upgrades.

I think there will be Apple on-die GPU, but can’t believe there will not be an option for discrete GPU upgrades.

It would be very odd if Apple spent the last several years polishing off external GPU support in MacOS, and developing the new Mac Pro’s MPX interface for PCI cards if they were going to be dropping support for discrete graphics cards.

I think there will be Apple on-die GPU, but can’t believe there will not be an option for discrete GPU upgrades.

I could see all mobile products sticking with Apple GPUs with the option to add eGPUs through USB4. Desktop is where I see them including dGPUs from 3rd parties such as AMD.

To me it wouldn't make sense to switch to Apple Silicon in order to get control over the hardware-software integration, diminish reliance on 3rd party chip manufacturers and then...... continue to rely on 3rd party chip manufacturers. They do GPUs really well in the A{}X series so, while nothing has been said officially, all signs point to them making their own IMO.

Whether those Apple-made GPUs will be integrated or discrete, well, I have no idea. We'll see soon enough!

ya, but i guess the only reason to keep using 3rd party GPU's would be if Apple *couldn't* do it themselves. It wouldn't make sense, but then 3rd parties ave also been in this GPU-game longer than Apple I think as well.

Usually Apple excells well i reckon at perfomance and battery. so there maybe some potentional issues if they sed 3rd GPU still. You know the saying "You make all the components,, you have a better life"

Woah. I appreciate the discussion guys.

Take a look at this rumor by a video:. If Apple's ARM iMac (Q4 2020 or later) might just have an Apple GPU, the Navi 22 GPU might be reserved for Intel iMac. The video also estimates that Navi 2x will be announced or released in September or October 2020.

I wouldn't expect the 6000 series nor anything that powerful. See 16-inch Macbook Pro and the 5XXXM series update. That's Navi and likely the extent Apple will care to update due to inventory control (they recycle the same graphics options on high-end laptop and desktop). iMacs use mobile chips. iMac Pro might use this but I think that line is a dead-end now that they have the Mac Pro.

Yup, I see no reason why they couldn't do it. They leaned hard on the fact that they've been creating custom graphics modules for their X series of chips with 6 or 7 iterations now, and seemed very proud of the fact that the iPad's GPU is now 1000x more powerful than it was at launch.ya, but i guess the only reason to keep using 3rd party GPU's would be if Apple *couldn't* do it themselves. It wouldn't make sense, but then 3rd parties ave also been in this GPU-game longer than Apple I think as well.

Usually Apple excells well i reckon at perfomance and battery. so there maybe some potentional issues if they sed 3rd GPU still. You know the saying "You make all the components,, you have a better life"

It will be an Apple GPU. Not an arm gpu.Hi all, I think I heard at Keynote that GPUs in ARM Macs will ARM too. But I wasn't fully paying attention at that time. I could be very wrong. Do you think ARM Macs won't have AMD GPUs but will have custom, integrated and/or ARM GPUs?

Do you think ARM Macs won't have AMD GPUs but will have custom, integrated and/or ARM GPUs?

There will only be integrated Apple GPUs.We will probably see a mix of integrated and discrete.

Source

Sorry, but it’s going to take more than that to convince me, for the reason’s i mentioned above (recent time spent on MacOS support for external GPUs and recent time spent on the hardware side for the Mac Pros MPX interface).

That slide’s just meant to set the table for comparison’s sake. They’re not announcing product specs for any one computer, let alone the entire lineup in such an obtuse manner.

"And to know if a GPU needs to be treated as integrated or discrete, use the isLowPower API. Note that for Apple GPUs isLowPower returns False, which means that you should treat these GPUs in a similar way as discrete GPUs. This is because the performance characteristics of Apple GPUs are in line with discrete ones, not the integrated ones. Despite the property name though, Apple GPUs are also way, way more power-effficient than both integrated and discrete GPUs."Sorry, but it’s going to take more than that to convince me. For the reason’s i mentioned above (recent time spent on external GPUs and recent time spent on the Mac Pros MPX interface).

That slide’s just meant to set the table for comparison’s sake. They’re not announcing final product specs in such an obtuse manner.

Bring your Metal app to Apple silicon Macs - WWDC20 - Videos - Apple Developer

Meet the Tile Based Deferred Rendering (TBDR) GPU architecture for Apple silicon Macs — the heart of your Metal app or game's graphics...

It sounds like Apple GPUs are just going to be part of the SoC with the CPU. Think iPad chips with more cores, clocked up with the greater thermal headroom, and now on 5nm die. They're going to scream. No eGPU or dGPU needed.

"And to know if a GPU needs to be treated as integrated or discrete, use the isLowPower API. Note that for Apple GPUs isLowPower returns False, which means that you should treat these GPUs in a similar way as discrete GPUs. This is because the performance characteristics of Apple GPUs are in line with discrete ones, not the integrated ones. Despite the property name though, Apple GPUs are also way, way more power-effficient than both integrated and discrete GPUs."

Bring your Metal app to Apple silicon Macs - WWDC20 - Videos - Apple Developer

Meet the Tile Based Deferred Rendering (TBDR) GPU architecture for Apple silicon Macs — the heart of your Metal app or game's graphics...developer.apple.com

It sounds like Apple GPUs are just going to be part of the SoC with the CPU. Think iPad chips with more cores, clocked up with the greater thermal headroom, and now on 5nm die. They're going to scream. No eGPU or dGPU needed.

I’ll believe it when I see it when it comes to desktop applications where thermal headroom is not a limiting factor.

I just don’t believe that Apple’s

GPU on the next mac mini is going to be particularly competitive with a Radeon 5700XT (or whatever comes out this year) in an external GPU. And I think that is likely to hold true in the next iMac, and a near certainty in the next Mac Pro.

Instead of quoting vague marketing images, we might as well just drop this whole conversation and wait and see instead.

It is on Apple to persuade its most demanding users about its platform future: what happens if they need more power than Apple thinks is enough? Desktops aren't like phones, most don't upgrade every year. In terms of business hardware depreciation, it's on average three years. Are these SoCs potentially swappable? What if memory needs to increase, is it slot-and-swap or is everyone stuck with some fixed default during purchase? What if that integrated GPU design is lackluster on a multi-thousand-dollar workstation, what can be done about that?

It is on Apple to persuade its most demanding users about its platform future: what happens if they need more power than Apple thinks is enough? Desktops aren't like phones, most don't upgrade every year. In terms of business hardware depreciation, it's on average three years. Are these SoCs potentially swappable? What if memory needs to increase, is it slot-and-swap or is everyone stuck with some fixed default during purchase? What if that integrated GPU design is lackluster on a multi-thousand-dollar workstation, what can be done about that?

Instead of quoting vague marketing images, we might as well just drop this whole conversation and wait and see instead.

It is on Apple to persuade its most demanding users about its platform future: what happens if they need more power than Apple thinks is enough? Desktops aren't like phones, most don't upgrade every year. In terms of business hardware depreciation, it's on average three years. Are these SoCs potentially swappable? What if memory needs to increase, is it slot-and-swap or is everyone stuck with some fixed default during purchase? What if that integrated GPU design is lackluster on a multi-thousand-dollar workstation, what can be done about that?

“Can’t help ya, there was a line on a slide once”

I also think for the high-end MBP or iMac, Apple would use dgpu combine with igpu from the cpu.

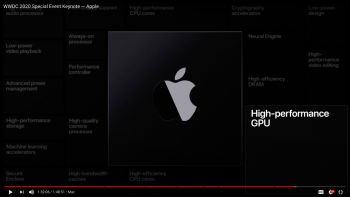

But this presentation clearly shows Apple will use their own GPU solution.

[automerge]1593341083[/automerge]

This is 100% confirmation for me that Apple will use their own silicon for the GPU,

Wow, Apple is really confident with their GPU performance.

But this presentation clearly shows Apple will use their own GPU solution.

[automerge]1593341083[/automerge]

"And to know if a GPU needs to be treated as integrated or discrete, use the isLowPower API. Note that for Apple GPUs isLowPower returns False, which means that you should treat these GPUs in a similar way as discrete GPUs. This is because the performance characteristics of Apple GPUs are in line with discrete ones, not the integrated ones. Despite the property name though, Apple GPUs are also way, way more power-effficient than both integrated and discrete GPUs."

Bring your Metal app to Apple silicon Macs - WWDC20 - Videos - Apple Developer

Meet the Tile Based Deferred Rendering (TBDR) GPU architecture for Apple silicon Macs — the heart of your Metal app or game's graphics...developer.apple.com

This is 100% confirmation for me that Apple will use their own silicon for the GPU,

Wow, Apple is really confident with their GPU performance.

Attachments

Last edited:

I should mention that at the Metal for Apple Silicon talk, their diagrams seemed to suggest that their GPU memory at least in some cases be separate from main memory as they talked a lot about when to flush to system memory and when to read from system memory versus just relying on the already cached to VRAM data on the GPU with Apple Silicon

I watched this session now and what you are referring to is the tile memory on a TBDR architecture. This is not VRAM in the strict sense of the word (more like a cache). Also, all the discussion in the video (including the compatibility mode) is about developers writing buggy code - basically Apple is choosing defaults that would work around common API misuse. This misuse might not be apparent on an AMD GPU, but will show up on an Apple GPU side the later gives you more fine grained control over GPU memory.

But all in all, they have given no indication of dedicated VRAM. Only talked about features iPhone GPUs have had for years.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.