If Microsoft and/or Qualcomm released x86-to-ARM translation layer that is as good as Rosetta 2. Things would change overnight, just like how Apple Silicon transition was seamless and did not break most of legacy x86 apps (infact they ran faster).At the time Apple ditched the floppy, that medium was already on the way out as optical media and even external storage devices such as the Sygate Quest and Iomega ZIP drive became more widely accepted, due in large part to their ability to hold significantly more data. USB largely eliminated the need to manually set up peripherals such as mice, printers, and external storage, and even Microsoft jumped on the "Universal Plug and Play" bandwagon once Apple went there with the iMac.

x86 is an entirely different matter though. Given the sheer dominance of x86 in the electronics space, it would take a seismic shift from the PC side of the industry to change gears from x86 based hardware to ARM or RISC-V. Inertia is real, and it would be extremely difficult to change course among the major players on the PC side, such as HP, Dell, and Lenovo. Microsoft themselves would also have to make WoA their priority instead of x86 variants of Windows, and progress on that side of the market has been (arguably) glacially slow from Redmond.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Will x86 be dead in a decade?

- Thread starter Apple Fan 2008

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

If Microsoft and/or Qualcomm released x86-to-ARM translation layer that is as good as Rosetta 2. Things would change overnight, just like how Apple Silicon transition was seamless and did not break most of legacy x86 apps (infact they ran faster).

The sticking point is that AS has some tiny hardware features that facilitate x86 translation that are not present in stock AArch64. If ARM were to standardize these or equivalent features in a Cortex-E architecture, making them readily available to all licensees, it would be much easier for people to move off of x86.

If Microsoft and/or Qualcomm released x86-to-ARM translation layer that is as good as Rosetta 2. Things would change overnight, just like how Apple Silicon transition was seamless and did not break most of legacy x86 apps (infact they ran faster).

Qualcomm doesn't operate on the software side of things, so it would be up to Microsoft. The big difference there is that Apple actually deprecated 32-bit backwards compatibility prior to the Apple Silicon announcement, so the ARM transition was an overall smoother process than either 68k-PPC or PPC-Intel were. For Microsoft to even have a chance to approach the stability of a Rosetta 2 type tool, they would also need to fully deprecate 32-bit as well.

Apple also has the advantage of holding an architecture license for ARM, compared to the design licenses companies such as MediaTek and Qualcomm have. That is how Apple was able to incorporate elements of the x86 translation layer into their (custom) silicon itself instead of having it all run in software. Since Qualcomm licenses designs from ARM, they are limited in what they can do to build on those preexisting cores. Is it possible that ARM backports those elements into their core designs? Possibly, but not likely depending on whether Apple copyrighted those changes. What ARM has backported from Apple is not anything architectural, but additions to their existing ISA, on the microcode level.

https://jobs.apple.com/en-us/details/200475918/risc-v-high-performance-programmer 😉I guess RISC-V will be next.Gotta catch them all!

Apple also has the advantage of holding an architecture license for ARM, compared to the design licenses companies such as MediaTek and Qualcomm have. That is how Apple was able to incorporate elements of the x86 translation layer into their (custom) silicon itself instead of having it all run in software. Since Qualcomm licenses designs from ARM, they are limited in what they can do to build on those preexisting cores.

You are mistaken. Qualcomm does in fact have an architecture license.

Ahhh I thought the title was able Intel Macs being dead in 10 years.Considering having the ARM architecture is only getting more popular. I was wondering your guy’s opinions on whether x86 will be dead in 10 years.

I think legacy x86 will become a niche like mainframes within 1-2 decades.

As economies of scale worsens for AMD/Intel/Nvidia desktop legacy x86 & desktop dGPUs then prices will go up and refresh cycles will slow.

ARM laptops will provide better value because of the economies of scale of ~1.5 billion SoC shipped annually worldwide.

For consumers who are largely browser-based whether it ARM/x86/Windows/macOS will largely be irrelevant.

Last edited:

I don't think that's right. Part of the problem with Windowsland is that there are many players whose incentives and timelines are not aligned, so transitions are very gradual and messy.If Microsoft and/or Qualcomm released x86-to-ARM translation layer that is as good as Rosetta 2. Things would change overnight, just like how Apple Silicon transition was seamless and did not break most of legacy x86 apps (infact they ran faster).

Look at what happened with Windows 8 - Microsoft intended every Windows device to have a touch-screen and they could leverage their PC dominance and eat the iPad and other tablets. But Lenovo, Dell, HP, etc largely did not put touch screens on most of the Windows 8 machines they made. Didn't want to spend the extra money on something they didn't think customers wanted to pay for. Net result - the Windows touch platform flopped. And while Microsoft tried to backtrack with Windows 10, the Windows 8 debacle has had lasting impacts on the platform more broadly, all negative.

The way the PC industry innovates is that a supplier, e.g. Intel, comes up with something that is better at the current way of doing things and that has extra modes that are not yet used. Then, once those extra modes reach a certain installed base, the software starts taking advantage of them. Look at, say, 32-bit protected mode - most 386es and a good chunk of 486s went to the e-waste pile having never run a 32-bit protected mode operating system, but by 1995, there were enough 32-bit-capable machines out there for Windows 95 to be a smashing success. And same thing with x64 - the original x64-capable desktops running Windows XP or even Vista went to the e-waste pile having never run a 64-bit operating system, but by the late 2000s, there was enough of an installed base of x64 systems for driver makers, etc to start supporting it seriously.

Qualcomm could make the world's greatest ARM chips and Microsoft have great drivers for them tomorrow - if you are Lenovo or HP, why would you gamble on that, when any problems means YOU get to eat the returned hardware and no one is waltzing into Worst Buy demanding the new Qualcomm chip in their $700 laptop? It's safer to just keep buying the Intel or AMD chips.

The biggest reason for the conservatism in Windowsland is that Lenovo/Dell/HP/etc do not want to get hardware returned because some legacy port is missing or some new-fangled OS or something isn't compatible with somebody's older software/peripherals/etc. (Look, also, at one of the things that killed the Linux netbook in ~2006-8 - apparently return rates were much higher on Linux netbooks than on XP netbooks) The second reason is that Lenovo/Dell/HP/etc do not want to add anything to their lower-end systems (e.g. USB-C, dual-band wifi, etc) unless they are convinced they absolutely have to. If it costs $0.50/more per system, and they don't think the people waltzing into WB care about it enough to pay $50 more for a system that has it, Lenovo/Dell/HP don't throw it in. That is why $700 consumer Windows laptops are how they are.

Apple did not invent any of it (not GUI, not USB, not multi-touch screens). Yes, they often can move faster because they own hardware, software and the users. In the broader industry the same tech gets introduced just as fast (often faster) . It just does not get abandoned/proliferated to all models at once. For example, at the same time Apple introduced capacitive (multitouch) screen, LG did the same. Floppy drives are still being used and why not - there is unique hardware that was designed with them and nobody is going to replace it as long as it works. The way Apple behaves is somewhat suitable for individual users much less so for businesses. That's one reason why Apple products are so poorly accepted by businesses.In the mid '80s, Apple introduced the (premium) consumer-level GUI (which was not their own invention per se but a majo refinement on the concept) – within a decade, it had become extremely difficult to buy a consumer-level computer that did not come with a GUI. In the mid/late '90s, Apple abandoned the floppy drive, and various arcane ports in favor of USB – over the following decade, floppies became increasingly scarce and USB became the de facto standard. In the late aughts, they introduced a simple multi-touch-screen smartphone, and it became the fundamental design principle for nearly all smartphones (it may be possible to obtain a phone with one of those hinky keyboard things, but they are very uncommon).

A few years ago, after a decade-and-a-half flirtation with them, Apple abandoned the mess that is Intel/AMD's x86-64 architecture. Apple has had some product flops, though this move looks a lot like not one. This may be the onset of another Apple-driven major trend that could see x86 marginalized – you can still get some of the things Apple has killed over the decades, but it tends to take extra effort.

And Apple tried to push USB-C and that... didn't really go that far in the absence of Windows-land as well as everything else with USB-A going along. There are still many, many devices (most?) that come with a USB-A cable/connector in the box...Apple did not invent any of it (not GUI, not USB, not multi-touch screens). Yes, they often can move faster because they own hardware, software and the users. In the broader industry the same tech gets introduced just as fast (often faster) . It just does not get abandoned/proliferated to all models at once. For example, at the same time Apple introduced capacitive (multitouch) screen, LG did the same. Floppy drives are still being used and why not - there is unique hardware that was designed with them and nobody is going to replace it as long as it works. The way Apple behaves is somewhat suitable for individual users much less so for businesses. That's one reason why Apple products are so poorly accepted by businesses.

But fundamentally you are exactly right - Apple likes to kill things, in part because many people see killing things as innovative even when it is... done a bit too early. 1998 was probably a little early to get rid of the floppy especially in the absence of USB flash drives or USB 2.0 or easy access to high speed Internet; by 2003-4ish Windowsland was starting to follow, I forget when exactly Dell made floppies a customizable option but it was around then.

Windowsland doesn't like to kill things because Dell/HP/Lenovo/etc are terrified of returns and Microsoft is terrified of another Vista. They worry that if someone takes a new computer home and they can't plug in their ___________________, they'll just return the machine and they don't have the margins to handle those kinds of returns. Hence the long lifespan of, say, VGA and parallel ports... or even DVD drives, which despite their cost oddly enough stuck around in low-end laptops much longer than they did in high-end Windows laptops.

And businesses, of course, are of the same view. If you have 10 employees running software A, B, and C on operating system X and you hire an 11th employee, you want to be able to buy a computer running operating system X and compatible with software A, B, and C. Lenovo/HP/Dell/etc's business lines, along with their slow adoption of new versions of Windows (many business machines today still come with Windows 10 preloads; many business machines in 2016 came with Windows 7 preloads), basically guarantee that. Apple... basically guarantees the opposite.

ChatGPT may be biased... it's probably written for it. ;-)View attachment 2207686Here's what ChatGPT said:

The tech that makes it relevant to millions of DOS & Windows programs... they'll willingly abandon them?And now Intel's talking about "X86-S" with some of the legacy stuff removed (requires 64-bit OS, rings 1 and 2 removed, etc).

Who knows what the status of that is given their lawsuit with Arm and Arm’s rumored licensing change.You are mistaken. Qualcomm does in fact have an architecture license.

You are mistaken. Qualcomm does in fact have an architecture license.

Qualcomm licenses the core designs from ARM for their SoCs in what's called a TLA. Apple merely licenses the ISA and builds their own SoCs utilizing said ISA. From an article on the ongoing Qualcomm-ARM lawsuit:

In an updated Qualcomm counterclaim made public Oct. 26, Qualcomm argues that Arm is no longer going to license its central processing unit (CPU) designs after 2024 to Qualcomm and other chip companies under technology license agreements (TLAs).

Here is something from Qualcomm itself outlining their use of existing ARM core designs:

According to Qualcomm, the reasoning came down to continuing support for legacy applications. The Cortex-A710 is the last of Arm’s cores to support 32-bit applications (AArch32) — all subsequent and future cores are 64-bit only (AAarch64), at least in theory. The Snapdragon 8 Gen 2 also uses Arm’s refreshed Cortex-A510 little cores, which, along with a 5% reduction in power consumption, can be built with 32-bit support.

Since Apple does not license core designs from ARM but merely the ISA itself, whatever happens with the Qualcomm lawsuit won't have any significant impact on Apple's work with its own SoCs.

Qualcomm licenses the core designs from ARM for their SoCs in what's called a TLA. Apple merely licenses the ISA and builds their own SoCs utilizing said ISA. From an article on the ongoing Qualcomm-ARM lawsuit:

Qualcomm has both. Arch license ( 'old' and not through Nuvia) and TLA. Up until the rapid switch to 64-bit , Qualcomm used to make their own cores. Qualcomm had a 'side' project into server CPUs that didn't work out , thought that 64 ibt was mainly about large ( > 4GB RAM) capacity that didn't matter in phones ( when Apple looked at it as a ISA garbage collection time to toss stuff 'getting in the way'. ) , and had take-out problems ( Broadcomm saber rattling they could take over and manage the money better).

Qualcomm continued to dabble at acquiring arch licenses ... they just were doing much with them ( and could afford to do that. )

The hocus pocus Qualcomm is throwing out there about Arm not doing them anymore in the distant future is mostly misdirection from what happened to cause the law suit. It is not particularly relevant to the suit at all.

" ... Intel® APX doubles the number of general-purpose registers (GPRs) from 16 to 32. This allows the compiler to keep more values in registers; as a result, APX-compiled code contains 10% fewer loads and more than 20% fewer stores than the same code compiled for an Intel® 64 baseline.2 Register accesses are not only faster, but they also consume significantly less dynamic power than complex load and store operations. ... "

www.intel.com

www.intel.com

If Intel chucks some dubious baggage at the same time (e.g., booting some 1990's vintage OS) , there is little reason for it to 'die off' in a decade.

https://www.tomshardware.com/news/intels-new-avx10-brings-avx-512-capabilities-to-e-cores

If they don't over play this as a wedge to try to claw from share from AMD this all could be helpful. ( they should have been somewhat talking with AMD about this so that do 'fork' the x86 market too much. Too much battling inside the pie will likely shrink the pie. ).

Introducing Intel® Advanced Performance Extensions (Intel® APX)

These extensions expand the x86 instruction set with access to registers and features that improve performance.

If Intel chucks some dubious baggage at the same time (e.g., booting some 1990's vintage OS) , there is little reason for it to 'die off' in a decade.

https://www.tomshardware.com/news/intels-new-avx10-brings-avx-512-capabilities-to-e-cores

If they don't over play this as a wedge to try to claw from share from AMD this all could be helpful. ( they should have been somewhat talking with AMD about this so that do 'fork' the x86 market too much. Too much battling inside the pie will likely shrink the pie. ).

Sounds a lot like they are just trying to paste some of what makes ARM/POWER efficient while retaining their VLIS. I am skeptical of whether it will be a major gain.Intel® APX

Sounds a lot like they are just trying to paste some of what makes ARM/POWER efficient while retaining their VLIS.

Not really. More so it is just exposing the underlying microarchitecture they (and AMD) already have the low levels anyway. (even a lowly 'Atom' low end implementation has more than just 16 actual registers. ) All they are doing to taking some of the fleader-farb of 1970-80's vintage jargon out of the the conversation between the compilers and the real underlying microarchitecture. If the register pressure isn't all that high... shouldn't be using most of the old funky modes anyway. One of the primary reasons compiler are still trying to engage them is the register allocation taps out and now have to start tap-dancing to the oldies.

5-10 year old binaries that haven't be recompiled will run just as slow now as they will after these changes.

I am skeptical of whether it will be a major gain.

It doesn't have to be a huge major gain. They just have to make progress and get more folks to run more sourcecode through newer compilers over time. ( as opposed oldest binaries possible and/or oldest compiler settings possible. )

The VLIW went overboard and trying to much optimization into the static compilation stage. x86 is a bit too far in bed with the "Dynamic, in context, rewrites will save the day". Some stuff should be written differently in the first place and need both static and dynamic approaches. I've said before x86 is more constipated than 'complex'.

P.S. it looks alot like the issues that Jim Keller has been talking about. Since he spent time at both AMD (Zen) and Intel ( some designs coming in a couple of years) it won't be surprising if the uplift comes when focus the future designs as a more focused subset of the instruction set.

Last edited:

Every time Intel has tried to kill x86 in the past, it's failed. The most notable example was when they tried to make the move to 64-bit with Itanium and got absolutely killed by AMD64 (now called x86-64). In fact, Intel pays AMD royalties to this day because all of the 64-bit extensions are AMDs code instead of Intel's. I think at this stage, Intel is just going to keep grafting things onto x86 as long as possible, because any major shift in platform will kill what momentum they have.

Maybe, but don't underestimate people's optimism or stupidity.Every time Intel has tried to kill x86 in the past, it's failed. The most notable example was when they tried to make the move to 64-bit with Itanium and got absolutely killed by AMD64 (now called x86-64). In fact, Intel pays AMD royalties to this day because all of the 64-bit extensions are AMDs code instead of Intel's. I think at this stage, Intel is just going to keep grafting things onto x86 as long as possible, because any major shift in platform will kill what momentum they have.

Look at Microsoft and Windows 8. They tried to move Windows to the future with a modern touchy GUI, it completely failed, and, if anything, it set into motion (or at least accelerated) a whole set of trends which are likely to make Windows and its native APIs increasingly irrelevant in the future. Isn't Intel capable of making the same mistake, i.e. i) not understanding why people choose their product in the first place, and ii) assuming they can engage in Apple-style transitions where they take their userbase along from thing A to B and then pretend A never existed?

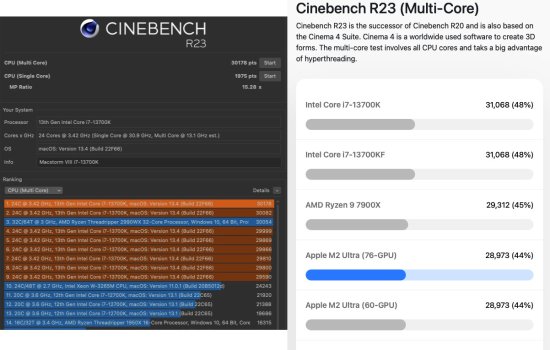

There is just one thing everyone is not asking themselves here. If Apple' current best Ultra chip with their vertical integration of both software and hardware can barely beat Intel high end gaming laptop chips but can't touch high end Intel desktop gaming chips what makes us think Qualcomm or anyone else can make actually here? Whatever they come up with will be slower than Apples take. Battery life? Both Intel and AMD have battery life covered as well.

There is just one thing everyone is not asking themselves here. If Apple' current best Ultra chip with their vertical integration of both software and hardware can barely beat Intel high end gaming laptop chips but can't touch high end Intel desktop gaming chips what makes us think Qualcomm or anyone else can make actually here? Whatever they come up with will be slower than Apples take. Battery life? Both Intel and AMD have battery life covered as well.

Any Intel or AMD part aimed at gaming or content creation is going to have a significantly higher TDW than Apple's SoCs. For example, the i7-13620H has a minimum wattage of 35W, with the base being 45W and max being 115W. Unless you have a massive laptop (17" or larger) with a battery taking up most of the interior, battery life won't be anywhere near what Apple Silicon is running. The Ryzen 7 7745HX has a base TDP of 55W, but is configurable between 45W-75W. So while the AMD part does lead to better battery life than a comparable Intel CPU, it still lags behind Apple's solutions.

Intel is starting to run into the laws of physics with their processor designs. In fact, the main reason they adopted a BIG.little design mixing P and E cores was that simply throwing cores at the processors started to have diminishing returns in terms of power consumption and heat generation. The E core push seems to be a way for Intel to say they're adding even more cores while slowing down the rate at which wattage requirements have increased. Likewise, Intel's reliance on older processes means they still have to push higher base wattages in order make sure the entire CPU gets the necessary power it needs (the aforementioned Intel part is on "Intel 7" node, which is kinda equivalent to 7nm in some areas and 10nm in others, IIRC).

We can only HOPE ¯\_(ツ)_/¯

still loving mine. Forza Horizon 5 on my bootcamp'd iMac is a better racer than any of the iPhone games I play on my Apple Silicon macs

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.