** NVIDIA Geforce GTX880M Mac Edition ROM **

** NVIDIA Geforce GTX870M Mac Edition ROM **

** NVIDIA Geforce GTX860M Mac Edition ROM **

Genuine Native Boot Screen & Brightness Control

View attachment 942200

The following are the UGA equipped roms I put together for:

NVIDIA GeForce GTX 880M

N15E-GX-A2, MXM-B (3.0)

8GB VRAM

NVIDIA GeForce GTX 870M

N15E-GT-A2, MXM-B (3.0)

3GB VRAM

NVIDIA GeForce GTX 860M

N15P-GX-A1, MXM-B (3.0)

2GB VRAM

GTX880M - первая карта видеопамяти объемом

8 ГБ, работающая на наших машинах! Теперь у меня столько же врамов, сколько системной оперативной памяти. Рад сообщить, что macOS видит весь баран. Он полностью повышается даже на базовых тактовых частотах. Когда я купил эту карту, заклепки были очень длинными и удерживали слишком большую часть графического процессора от поверхности радиатора. Раньше было тепловое дросселирование, так как он почувствовал ситуацию перегрева. Когда я удалил заклепки и использовал винты, чтобы закрепить его, я смог получить гораздо более плотное уплотнение, и карта поднялась должным образом.

Протестировано на iMac 2011 года с использованием High Sierra 10.13.6. Пожалуйста, не стесняйтесь тестировать на других версиях MacOS, я буду обновлять этот пост по мере необходимости с успехом / неудачами.

- Эти ПЗУ не требуют стороннего загрузчика, такого как OpenCore.

- Для них потребуется модификация базового степпинга яркости AppleIntelPanelA / ApplePanels / F10Ta007.

- Проблемы с глубиной кадрового буфера пока остаются, и их можно временно исправить, перейдя в режим ожидания.

Как и в предыдущем случае, эти ромы должны вернуть:

⦁ Подлинная естественная регулировка яркости

⦁ Настоящий серый экран ранней загрузки (индикатор выполнения этапа 1 и 2)

⦁ Соответствие оригинального загрузчика macOS

** обновление 9/4/2020 **

обратите внимание, что 870M_6GB_UGA.rom является

экспериментальным и находится на стадии тестирования для тех, кто заинтересован и имеет карту.

"безумно здорово!"

-Стив Джобс

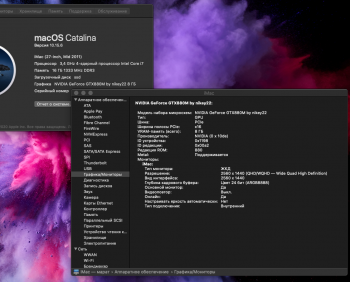

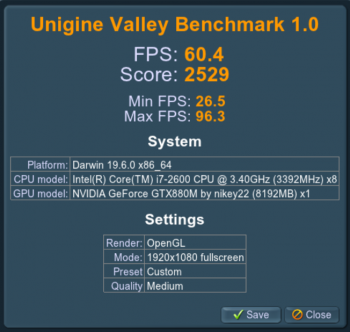

View attachment 940773 View attachment 940774

[/ QUOTE]

thank you very much for your work!!! I wanted to share my impressions, on hi Sierra the buffer problem was noticeable, on Catalina there are fewer such problems, cs go on ultra, in Sierra there were problems everything hung up! I am very much looking forward to solving problems with the buffer) since this still exists, Windows 7 also had problems. 10 Windows has not yet put, perhaps everything works much better there) I apologize for the translation)