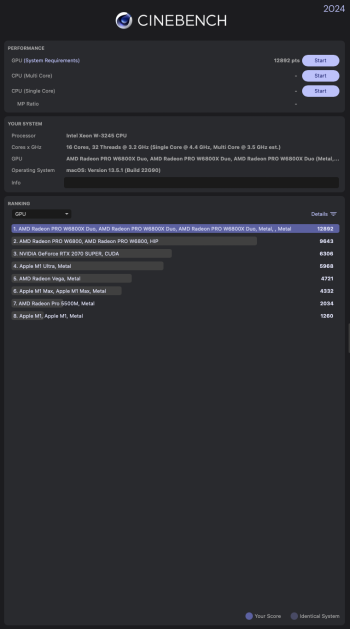

An m2 ultra is fine workstation on par with a 7950 ($600) cpu and a 7900xt (1000 $) gpu but for an insane price. If you so cpu rendering using karma or vray etc it perform just slighty worse than that consumer level cpu. If you do gpu rendering however you are at best on the level of a 2080ti from 2018. Actually, even on mac a single 6800xt performs similar to the ultra. So the old 2019 mac pro with 2-4 or these cards obviously is outright destroying the ultras. And that my friend is the problem.

GPU rendering is just going to be slow until we get dedicated raytracing hardware. Having had a play with Octane and Redshift, it's usable, but not great. Haven't really found a GPU render that is compelling for FX kinda things compared to Karma CPU (Karma xPU looks promising, but looks like it's going to be Nvidia only for the foreseeable). My general feeling is that it's going to be a few generations before the GPU is particularly compelling for rendering (reckon M3 will be a good start, but still expect it to be a while).

M2 wise, in terms of sims and general performance its been interesting and a bit swings and roundabouts; been doing some vellum fluid stuff lately and it's using around 40GB of GPU memory and I'd have to by a pretty expensive Nvidia card for that. Sure, I could do it on cpu but it's then very slow (15min GPU equates to over an hour on CPU). I'd expect something similar for minimal solve pyro simulations (although the GPU/CPU difference much less than for vellum fluid/grains, which is admittedly a bit of en edge case).

Been benchmarking a few Nvidia cards, and found them pretty underwhelming. They weren't the latest gen, but was expecting a lot more from them given the amount of hype. This was mainly OpenCL tests, so likely could just be Nvidia's OpenCL driver being terrible, but put me off splashing out on a 3090 or 4090. Obviously all these test are Houdini specific, so ymmv, but. did dampen my enthusiasm a fair bit.

Speaking of crusty hardware..:

The only 26-core Xeons I am aware of are older Xeon Golds, which are Server CPUs optimized for multi-socket configurations. It was a very poor workstation CPU even at the time of its release and cheaper than actual workstation-optimized CPUs. So I wouldn’t take this kind of comparison as a bragging point.

Fair point; didn't know that. I think we've still got a few of them at work, and they are not great (and that's being generous).

Was more of a tongue in cheek poke at the sentiment that's been floating around the forums a bit that AS is incapable of any 3D work (see pretty much any thread on the new Mac Pro). Which, TBH, I've been finding very silly.

www.intel.com