Ray tracing, from a consumer point of view is not that interesting if you asked me. I would think most folks with AMD and Nvidia GPUs do not really make use of RT for gaming if they value high frame-rate. And I don't think many will own the highest end GPUs just for gaming.

Apple likely is targeting RT for the professional folks rendering movies, especially large scenes with tons of render assets. Hundreds of GB for the GPU to use is really good for such an application.

An M3 Pro/Max with HW RT (if it comes with the M3) will likely not perform exceptionally well in games, so likely RT will not be used.

Ray tracing may be important for AR, to properly "ground" artificial objects in the real world. In earlier years Apple has shown demo's of how, if you don't include things like shadows, it's hard to see exactly where an artificial AR object is supposed to be in space; it may look like it is floating a foot above the ground.

So value provided by RT hardware would appear to be

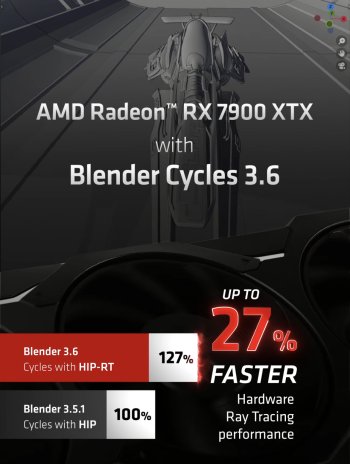

- (definite) making high end Apple hardware at least somewhat competitive and relevant to people running a lot of blender type apps.

And presumably the cheaper it becomes (in performance) the more adding ray-tracing flare will move from the specialty of a few professionals to something present in more mainstream apps like Illustrator and Photoshop, maybe even video editing. (In these uses it pairs well with AI hardware, which can extract a good enough 3D model from image/video, and then apply ray tracing to that.)

- (definite, but mostly for boasting purposes) making consumer games prettier

- (definite, and perhaps very important, who knows?) making AR feel more natural, less uncanny value. This is mainly value for Vision Pro, but hell, OF COURSE Apple are going to add hardware to the SoCs that benefit Vision Pro, and if every other product can also get some extra benefit, that's just a nice bonus.

(Which raises the issue of how much else on the A17 that Apple did not mention is mainly there for Vision Pro; and getting that right was, in fact, the single

highest priority of the A17 team...)

- (very tentative...) the hardware required for ray tracing MAY possibly be useful for other GPU tasks (I've suggested this in the context of walking large pointer-based data structures) in a way that's of value to tasks apparently totally unrelated to RT. This may be present on day one; it may be a goal Apple is aware of, but was unable to fit into this year's design; or it may be a crazy idea that will never make sense!