Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

dGPU is dead with Apple Silicon, meaning that eGPUs are also dead?

- Thread starter toke lahti

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

iPad Pro supports 5k external monitors.

Only mirror or real dual screen desktop?

I guess Apple can leave imp & mp to intel based for many years on.

Even that they say now different, maybe they will ditch tb also, usb4 can handle most things needed.

Only mirror or real dual screen desktop?

I guess Apple can leave imp & mp to intel based for many years on.

Even that they say now different, maybe they will ditch tb also, usb4 can handle most things needed.

They currently only mirror but I'm pretty sure it's a software thing, the hardware is certainly powerful enough to do it.

Apple aren't leaving the iMac Pro and Mac Pro as Intel machines past 2022. They announced a 2 year transition period, they are on countdown right now.

iPad Pro supports 5k external monitors.

Plural? Not concurrently. Far, far closer to a 5K display can adjust to the iPad Pro; not the other way around.

"... You can use the LG UltraFine 5K Display at a resolution of 3840 x 2160 at 60Hz on these devices:

...

iPad Pro ... "

Use the LG UltraFine 5K Display with your Mac or iPad - Apple Support

Learn about the LG UltraFine 5K Display and how to use it with your Mac or iPad.

A 5K display is 5120 x2880 . From the iPad Pro perspective it is in the zone of "lights up some pixels" ( screen not completely dark so "supported" ) than "drive a 5K monitor" . The Mini 2014 supports an even lower resolution.

Apple had something driving a single( 6K display during the WWDC demos. That's isn't a huge hurdle if don't have to drive an embedded screen. ( the MacBookPro from 2016 are on the 5K supported list. Also the MBA 2018. ).

Apple doesn't have demonstrated "leading edge" support here for external monitors. That's why the position that Apple is "done" with every 3rd party dGPU from now into the future is a highly dubious position.

They currently only mirror but I'm pretty sure it's a software thing, the hardware is certainly powerful enough to do it.

The display subsystem is a fixed function unit. Just pointing at the GPU computational cores isn't going to drive more displays at all. More capable display units soak up substantive space on a die. Pretty good chance the hardware isn't there to keep the die smaller.

Apple aren't leaving the iMac Pro and Mac Pro as Intel machines past 2022. They announced a 2 year transition period, they are on countdown right now.

Even with a two year window getting to where the Vega Pro II is a humongous stretch from where Apple is now. Let alone where AMD and Nvidia are going to be in 2022 with the Vega Pro II class successors. There is substantive software and hardware they are short on. The two year gap is more so going to give them a chance to catch up on the software the will probably be require than the hardware. Much better hardware is going to be there (just not an Apple logo on it).

Even with a two year window getting to where the Vega Pro II is a humongous stretch from where Apple is now. Let alone where AMD and Nvidia are going to be in 2022 with the Vega Pro II class successors. There is substantive software and hardware they are short on. The two year gap is more so going to give them a chance to catch up on the software the will probably be require than the hardware. Much better hardware is going to be there (just not an Apple logo on it).

I agree, I don't think there's any way the Mac Pro doesn't have discrete graphics from Nvidia or AMD. As far as I'm concerned Apple only needs to catch Intel.

As mentioned in a certain video, which brings a tincture of gaming in AS macs, no support for dGPU == no more eGPUs?

Really?

How sure we can be of this?

"Hardly no iOS users are using eGPU with their devices."

I think the big mystery here is how Apple will (or won't) handle expandable graphics on the Mac Pro. It's highly possible that whatever they do there can be ported into a Thunderbolt 3/4/USB4 enclosure. For all we know, eGPUs for Intel Macs might still end up being compatible for Apple Silicon Macs (albeit with the latter utilizing them entirely differently). I'm not sure how likely it is, but as others have said, Apple put in a lot of work into eGPU support and having that effectively be dead-ended a year and a half after unveiling seems odd (especially since Apple would've been planning this Apple Silicon transition while releasing Intel Mac eGPU support).

I would like to point out that "ARM Macs not having dedicated GPUs" is still just a rumor, and is based on shaky logic.

Lets apply some critical thinking here:

1. Integrated GPUs exist for current Apple processors because there's no reason to fit a dedicated GPU on an iPhone or iPad. For small devices such as them it makes sense to have the whole system on a single chip.

2. Making a HEDT-class processor with a HEDT-class GPU would be prohibitively expensive and lower the performance ceiling of both the CPU and GPU. Not really an option with the "Pro"-level machines. And it would be much easier technically and financially to have a dedicated die for a separate Apple CPU and GPU

3. Dedicated GPUs are used in higher-end "Pro" Apple products such as the 27-inch iMac, the iMac Pro, the 16-inch MacBook Pro, and the Mac Pro. Unless Apple's willing to compromise the performance of all these products, a dedicated GPU is necessary.

No, Apple pretty much outright said that all SoCs will have an integrated GPU. While that doesn't necessarily preclude dGPU presence on the higher end, the way they said it heavily implies that we're likely not getting (at least traditional) dGPUs. We might see Afterburner-esque cards that add specialty co-processors to assist in graphical tasks that the Apple Silicon SoC integrated GPU calls for augmentation for (like ray tracing or real-time render assistance, just to throw out off-the-top-of-my-head examples). But traditional dGPUs are not coming along for the ride here. Luckily, it's seeming like they really won't need to so long as developers actually optimize their apps for Metal.

I like Max Tech, but I think he’s wrong.

Killing off dGPUs isn’t going to happen anytime soon. No matter the efficiency of an integrated Apple GPU. It will never have the thermal design power or chip space to compete with separate GPUs or offer a wider range of features such as larger memory capacities with HBM memory.

But performance will be increased a lot for those that currently rely on intel integrated GPU.

I’d guess we will see dGPUs offered in the lineups we have now. I.e. MBP 16 will have a separate GPU option.

As to whether there will be a separate Apple GPU. I’m less sure, but I would think it unlikely. It’s a lot of silicon fabbing to dedicate to something that’s only used in a minority of macs. Without shared CPU/GPU memory advantage, I’m not sure they’d be much better than AMDs offerings.

Comparing the AMD dGPU in the Intel 16" MacBook Pro and the iGPU in the Apple Silicon SoC that's likely to appear in the Apple Silicon 16" MacBook Pro replacement is an Apples to Oranges comparison. Those GPUs do not process or render graphics in the same way at all and there's enough WWDC2020 videos/articles that detail that if you don't believe me. These are much more efficient iGPUs than Intel's ever were and they're going to be able to do the kind of work you'd see on an AMD dGPU based Mac. For those not optimizing for these new GPUs, yes, it will appear as though AMD's offerings are better; the trick is to optimize for Metal. But if developers can do that (and the important ones will), then it'll still be an upgrade as far as the end result.

Don’t they already make their own gpus.....all the MPX module gpu’s for Mac Pro seem to be bespoke.

Apple made the cards; AMD still made the GPUs. Apple did modify the reference designs heavily; but it's still an AMD GPU under the hood.

I posted my thoughts about this a few weeks back. Just going to copy it here:

I felt reasonably certain that the recency of Apple’s initial rollout of eGPU support was an indication that it would not be phased out just two years later, and became even more confident with Apple’s re-iterated support for Thunderbolt v. USB4 earlier this week.

There have also been a number of recent articles about Apple’s ongoing development of VR/AR headsets. https://www.theverge.com/2020/6/19/...-external-hub-jony-ive-bloomberg-go-read-this

Maybe there will be an additional widget to provide the necessary GPU horsepower, maybe not, but Apple obviously sees VR/AR as a big item on the horizon of computing.

Fair to say that not very many people are using VR headsets today, and most of those who are, are doing it for gaming. How much Mac-native VR is going on? Mac VR gaming? Not a lot...pretty niche.

Now go look at the eGPU Apple

support page. https://support.apple.com/en-us/HT208544

First sentence of the article mentions VR. Interesting for such a niche use case to feature so prominently. And what are all the wonderful things an eGPU is good for with an Apple computer?

(1) making applications run faster. A very mainstream reason to use an eGPU.

(2) Adding additional monitors and display. Another super common reason to use an eGPU.

(3) Use a virtual reality headset.

...

I think the eGPU page is a dead giveaway Apple has something planned for VR, and an acknowledgement that they need a way to provide the necessary graphical capability to macbook and imac purchasers that don’t have that internal GPU capability.

Thank you for coming to my TED talk.

I agree that Apple would've have put the work into eGPU support (concurrent to Apple Silicon Mac transition planning) only for it to ONLY be applicable to Intel Macs. However, I think there's a ton about this that simply isn't clear yet (and won't be clear until Apple releases their first Apple Silicon Macs and the slew of documentation to follow them).

in a pro workstation one big CPU / GPU chip is bad all around

1st people who really need a lot of cpu power will be stuck paying for GPU power to drive 4 screens at 8K

or maybe people really need to drive a lot of screens / GPU power will not get it due to heat / chip / ram limits. maybe being forced to use USB based video docks.

I'm not sure what data you have to make such a claim. Plus, it's likely that they will expand the Afterburner family to include cards that handle all sorts of extended/expanded video processing and post-processing effects in the same way that the T2 has alleviated much of that from Apple GPUs. Hell, Apple has on the new 27" iMac, a variant of Intel processors that don't come with Intel IGPs at all (because all of the things that the Intel IGP actually did stellar work with separate from the AMD GPU is even better performed by the T2). It's very possible that we'll see additional graphics assistant co-processor offerings from Apple to offset whatever they don't put into a workstation chip. That being said, there's a ton that we don't know about whatever iPad-Pro-topping power that they'll even put into this new family of SoCs designed specifically for the Mac. Apple wouldn't be doing this transition if they were only capable of having it result in better lower-end Macs (Mac mini, MacBook Air, 13" MacBook Pro, 21.5" iMac).

I think this is right, and that folks are vastly overestimating how easy it will be for Apple to reach the performance found in the top available GPUs in iMac, Macbook Pro, Mac Pro. Do we really think Apple is going to develop an in-house Radeon VII equivalent to sell 10,000 units per year in a future Mac Pro refresh?

It also aligns with Apple’s recent support for eGPUs, which was only rolled out 2 years ago. Apple are going to sell the super skinny computers they’ve always wanted, and the eGPU will be just one more classic Apple dongle-based solution.

We’ll find out in short order, I suppose.

Again, I agree with sentiments suggesting that Apple didn't roll out eGPU support only to limit it to three years' worth of systems before it and a year and a half's worth of systems that followed between said rollout and the final round of Intel based Macs (especially on the low-end). That said, I think you're underestimating what they'll be able to do with their GPUs and software optimized for their frameworks.

Apple has been the one writing drivers for AMD chipsets on the Mac for a long number of years now, and goes as far as to write the firmware as well.

That is the reason why Apple doesn’t use NVidia parts today. Nvidia wouldn’t allow Apple to to go as deep into these things.

Again, do you have sources for this? Would love to see them.

Hardware -> Apple can win here;

Marketing Budget -> Apple can win here;

Games (Developers) -> Get the first two right and this one should take care of itself.

That argument is flawed and evidence of it being flawed is present in the Apple TV's lack of gaming titles (considering it is certainly equipped with console-level graphics) as well as the Mac's current struggles to get ports to popular PC/XBox/PS4 titles. Hell, Catalina's Culling(tm) resulted in Mac gaming being effectively cut in half going into this transition, so if it wasn't already unappealing for developers to release AAA titles for the Mac, it's about to be made worse in moving away from the x86 architecture and the kinds of GPUs Intel Macs have had (despite the design of Apple Silicon GPUs being way more efficient). Game devs just aren't embracing the Mac or the Apple TV.

Well,

mini 2006-7 had igpu,

mini2009 had 9400M

mini2010 had 320M

mini2011 optional 6630M.

The 9400M and the 320M were both iGPUs that also doubled as the logic board's chipset. They shared VRAM with the system just like the Intel iGPUs did. The only difference is that, relative to Intel iGPUs and the lower-end dGPUs present in the 15" and 17" MacBook Pros of the time, they didn't suck as much.

As for the 6630M, yes, it technically was a dGPU. It was an incredibly weak one that was subject to frequent failure (much like most of the ATI Radeon HD 5xxx and AMD Radeon HD 6xxx/M series in Macs of that era).

Again, do you have sources for this? Would love to see them.

I love your persistence, but my source is an NVidia employee, who is a great friend. I’ve been fortunate enough to have friends all over the industry.

I prefer to keep them, so I can’t give much more then the fact it’s NVidia that shut the door on Apple.

AMD themselves also confirmed to me back in 2018 they are in no way involved with the macOS drivers. Apple are doing all the work. Same applies to Intel drivers.

I still don't expect to see AMD GPU support on Apple Silicon Macs. Developer Beta 5 again has no ARM version of the AMD drivers. I guess Apple will offer their own dedicated graphics cards in the future once the first ARM based Mac Pro is released.

I still don't expect to see AMD GPU support on Apple Silicon Macs. Developer Beta 5 again has no ARM version of the AMD drivers. I guess Apple will offer their own dedicated graphics cards in the future once the first ARM based Mac Pro is released.

AMD themselves also confirmed to me back in 2018 they are in no way involved with the macOS drivers. Apple are doing all the work. Same applies to Intel drivers.

I still don't expect to see AMD GPU support on Apple Silicon Macs. Developer Beta 5 again has no ARM version of the AMD drivers. I guess Apple will offer their own dedicated graphics cards in the future once the first ARM based Mac Pro is released.

Yeah they'll go their own way in terms of GPU no surprises with the amount of GPU dudes they are hiring

That argument is flawed and evidence of it being flawed is present in the Apple TV's lack of gaming titles (considering it is certainly equipped with console-level graphics) as well as the Mac's current struggles to get ports to popular PC/XBox/PS4 titles.

You're assuming the only part of console hardware that matters is the GPU. AppleTV doesn't have the controller it needs yet. It also doesn't have the marketing. Apple doesn't push it as a TV box all that hard (plus the whole naming clash with the TV streaming service is an issue here), let alone as a games console.

Theres a chicken/egg thing going on here. You can't push it as a console without some great titles and Angry Birds isn't going to cut it. Nor is some pay to win Command & Conquer clone.

Apple really ought to commission/subsidise some titles/ports if they want this to work. Microsoft dropped a pile of cashon Halo to kickstart XBox. I'm sure there were a few other titles they pushed too. They went out and rounded up games devs, Apple is leaving out treats and hoping they wander in of their own accord.

If they don't want to drop billions buying AAA titles then a sound strategy might be to resurrect some older popular franchises. I'm sure theres a few cult classics that wouldn't cost all that much but that were always good enough to have been more popular with a bigger marketing budget.

One nice little move might be to integrate a fleet of games with an Apple Music feed. Grand Theft Auto playing Apple Radio or your favourite Apple Music playlist in game? A new version of Homeworld with a soundtrack by Two Steps from Hell?

How about an all new Call of Duty map where all the billboards are for Apple services and the computers in the background are all iMacs? Better yet a future-based version where soldiers have an advanced military version of Siri. Apple needs to get their 'different thinkers' on this.

You're assuming the only part of console hardware that matters is the GPU. AppleTV doesn't have the controller it needs yet. It also doesn't have the marketing. Apple doesn't push it as a TV box all that hard (plus the whole naming clash with the TV streaming service is an issue here), let alone as a games console.

Apple has three different controlers available on their website right now for the AppleTV; a wireless XBox controller, a wireless Playstation DualShock4 controller, & a wireless Steel Series Nimbus+ controller...

And I really don't see any "naming clash", the AppleTV has been the AppleTV since it was introduced, AppleTV+ is just an optional service addition to the AppleTV...

Apple really ought to commission/subsidise some titles/ports if they want this to work. Microsoft dropped a pile of cashon Halo to kickstart XBox. I'm sure there were a few other titles they pushed too. They went out and rounded up games devs, Apple is leaving out treats and hoping they wander in of their own accord.

Man, I hope they realise this quickly. It's sad to see every non-iPhone App Store basically be a disappointment because they generally refuse to make the accommodations necessary to make them more worth it for developers (not even taking into account their cut, which is whole other conversation). And the tvOS App Store is the best example of this.

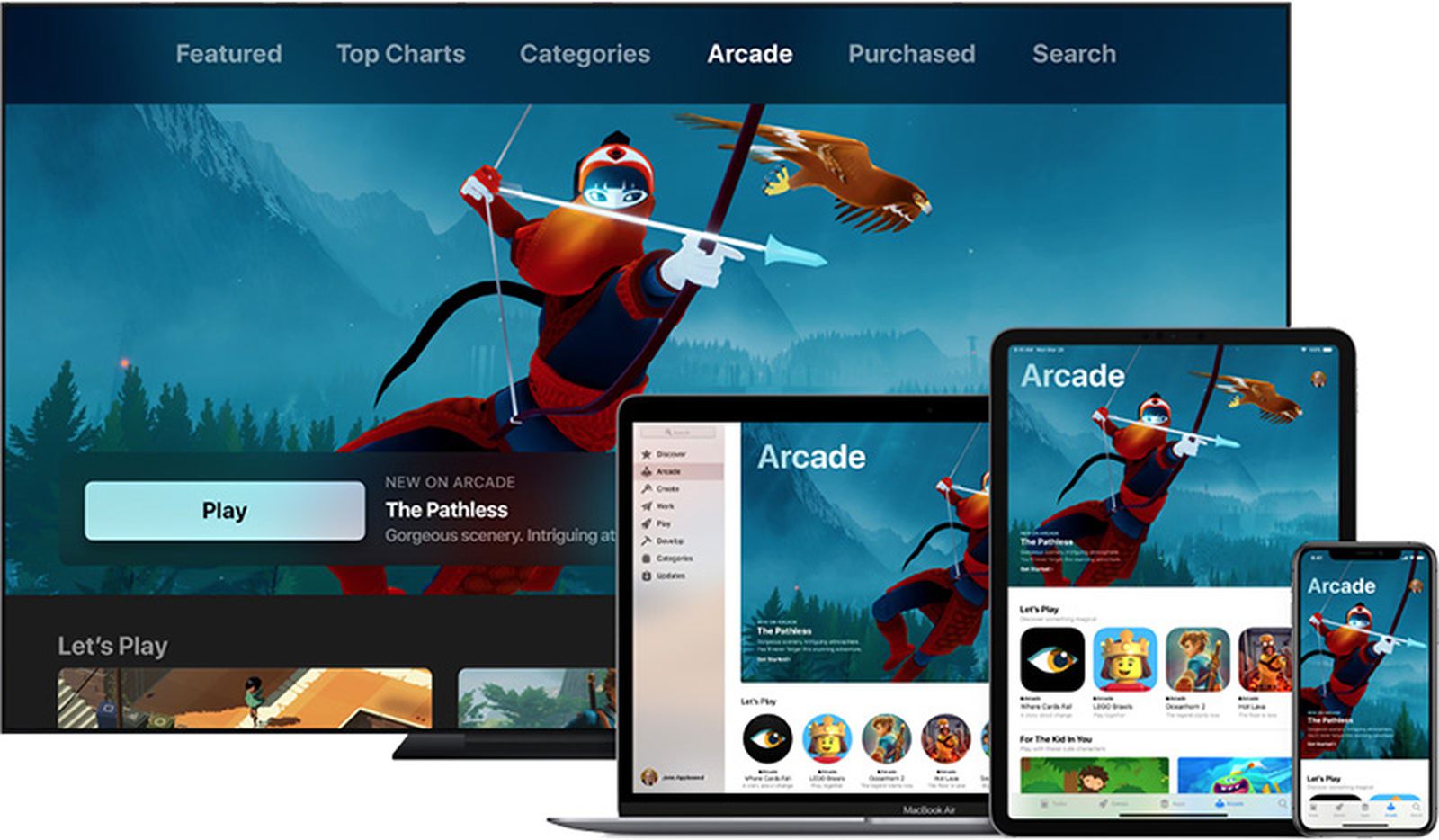

Edit: it does seem like they kind of realise this with Arcade, but with that recent report about them shifting focus to more 'engaging' games I'm less hopeful.

Apple has three different controlers available on their website right now for the AppleTV; a wireless XBox controller, a wireless Playstation DualShock4 controller, & a wireless Steel Series Nimbus+ controller...

But how many people do you imagine know that you can even play "proper" games on AppleTV? Let alone that there are controllers available? Most people don't even know AppleTV exists because Apple doesn't advertise it.

It seems like making the best gaming controller ever is something Apple could probably have a good stab at. Maybe they could make it compatible with Xbox and PS but add extra functionality for Apple device gaming.

And I really don't see any "naming clash", the AppleTV has been the AppleTV since it was introduced, AppleTV+ is just an optional service addition to the AppleTV...

You say "Xbox" or "Playstation" to any fool in the street and chances are they know what you mean and at least roughly what it is and does. Say "AppleTV" and is the same true? Will anyone of them mention gaming? Or even that its a box you plug into the TV?

Strangely I expect if you lined it up next to an Xbox and PS people would never believe it could come close to their performance (even the older models) due its size.

You're assuming the only part of console hardware that matters is the GPU. AppleTV doesn't have the controller it needs yet. It also doesn't have the marketing. ....

if they want this to work. Microsoft dropped a pile of cashon Halo to kickstart XBox. I'm sure there were a few other titles they pushed too. They went out and rounded up games devs, Apple is leaving out treats and hoping they wander in of their own accord.

Apple didn't drop a pile of cash?

Apple reportedly spending $500 million to fund development of 100+ games for its Apple Arcade subscription service - 9to5Mac

Who knew, Apple has deep pockets? The company is reportedly spending billions of dollars a year on Apple TV+ original...

9to5mac.com

9to5mac.com

Apple Arcade Games Reportedly Permitted on Xbox, PlayStation, and Nintendo Switch After Brief Exclusivity

Apple is spending hundreds of millions of dollars to secure new games for its forthcoming Apple Arcade subscription service, with its total budget...

Doesn't have a pro-active marketing strategy ?

Apple Cancels Some Arcade Games in Strategy Shift To Keep Subscribers

Apple Inc. has shifted the strategy of its Apple Arcade gaming service, canceling contracts for some games in development while seeking other titles that it believes will better retain subscribers.

Apple Canceling Some Apple Arcade Game Contracts to Focus on Hit Games That Will Draw Subscribers

Apple is shifting its Apple Arcade strategy and canceling contracts for some games while pursuing titles that it thinks will help it better retain...

They wouldn't have to shift strategy if they had no strategy.

Apple isn't interested in the GPU as being the primary sole tech spec aspect of a single product. They need good to above average GPUs. They probably do have little interest the most flashy, tech porn eyeball grabbing GPU. Not going to to toe-to-toe with playstation or XBox on grunt power. More so this is an entertaining, thoughtful gameplay. And that it is portable across the apple ecosystem.

That is good enough to be a business they can continuously invest production seed money into.

If Apple bumps the next AppleTV with a A12Z ( or A12x ) then the AppleTV will do less than a PS5 or Xbox Series X but it will also probably costs hundreds of dollars less. If get less while paying less that isn't necessarily a problem.

Apple didn't drop a pile of cash?

They need a catalogue hence lots of games is a good idea but they need some big ones and once they have a couple they need to tell everyone about it. Shifting strategy to retain subscribers by tweaking your catalogue isn't really marketing is it?

You're assuming the only part of console hardware that matters is the GPU. AppleTV doesn't have the controller it needs yet. It also doesn't have the marketing. Apple doesn't push it as a TV box all that hard (plus the whole naming clash with the TV streaming service is an issue here), let alone as a games console.

Theres a chicken/egg thing going on here. You can't push it as a console without some great titles and Angry Birds isn't going to cut it. Nor is some pay to win Command & Conquer clone.

Apple really ought to commission/subsidise some titles/ports if they want this to work. Microsoft dropped a pile of cashon Halo to kickstart XBox. I'm sure there were a few other titles they pushed too. They went out and rounded up games devs, Apple is leaving out treats and hoping they wander in of their own accord.

If they don't want to drop billions buying AAA titles then a sound strategy might be to resurrect some older popular franchises. I'm sure theres a few cult classics that wouldn't cost all that much but that were always good enough to have been more popular with a bigger marketing budget.

One nice little move might be to integrate a fleet of games with an Apple Music feed. Grand Theft Auto playing Apple Radio or your favourite Apple Music playlist in game? A new version of Homeworld with a soundtrack by Two Steps from Hell?

How about an all new Call of Duty map where all the billboards are for Apple services and the computers in the background are all iMacs? Better yet a future-based version where soldiers have an advanced military version of Siri. Apple needs to get their 'different thinkers' on this.

I'm actually not assuming that the only part of the console hardware that matters is the GPU. That's not at all what I'm saying. You read the completely wrong thing into what I was saying.

They have performant enough hardware to compete with consoles today. They support using existing industry standard controllers on ALL of their platforms (excluding watchOS for obvious reasons). These are controllers that have already been adopted by gamers and developers alike. Saying that Apple's only drawback here is a killer controller is completely missing the point.

Apple's problem is software. Developers need to develop Apple's way in order to make games sing on an Apple TV, let alone an Apple Silicon Mac. Developers begrudgingly do this on the iPhone because it makes up such a large percentage of the mobile market and they do it for the iPad because the iPad ~98% of the tablet market. But there's no such incentive to do this on the Apple TV and now that Apple is effectively leaving the common processor architecture (x86), making GPUs that don't operate the way GPUs do on PCs, Intel Macs, consoles, there's not much incentive to get AAA gaming on a Mac, especially when Mac and PC users alike keep bashing the idea of gaming on the Mac. Plus, at least OpenGL was an industry standard, unlike Metal which is Apple proprietary. These things matter to the developers that would make the games. Not the controller.

Apple just need to invest time and resources on game engines that AAA games use to rewrite them in Metal. This is 100% possible. If Apple wants to do it, they will.I'm actually not assuming that the only part of the console hardware that matters is the GPU. That's not at all what I'm saying. You read the completely wrong thing into what I was saying.

They have performant enough hardware to compete with consoles today. They support using existing industry standard controllers on ALL of their platforms (excluding watchOS for obvious reasons). These are controllers that have already been adopted by gamers and developers alike. Saying that Apple's only drawback here is a killer controller is completely missing the point.

Apple's problem is software. Developers need to develop Apple's way in order to make games sing on an Apple TV, let alone an Apple Silicon Mac. Developers begrudgingly do this on the iPhone because it makes up such a large percentage of the mobile market and they do it for the iPad because the iPad ~98% of the tablet market. But there's no such incentive to do this on the Apple TV and now that Apple is effectively leaving the common processor architecture (x86), making GPUs that don't operate the way GPUs do on PCs, Intel Macs, consoles, there's not much incentive to get AAA gaming on a Mac, especially when Mac and PC users alike keep bashing the idea of gaming on the Mac. Plus, at least OpenGL was an industry standard, unlike Metal which is Apple proprietary. These things matter to the developers that would make the games. Not the controller.

Metal is the most beautiful and performant graphics API ever created, all platforms confounded.

Apple just need to invest time and resources on game engines that AAA games use to rewrite them in Metal. This is 100% possible. If Apple wants to do it, they will.

Metal is the most beautiful and performant graphics API ever created, all platforms confounded.

I'm not saying that Metal isn't worth coding for. I'm saying that Metal is different enough to be hard to justify having a developer already making Windows, XBox, and Playstation versions of a game to then also make a macOS/tvOS variant of. Whether or not it's a beautiful or performant graphics API is irrelevant if developers are inconvenienced more than the profit they'll generate by making a Mac/Apple TV port of a AAA title. It also REALLY doesn't help that Apple is waging war against the gatekeeper of one of the most common game engines in use today, but that's a whole 'nother topic.

Epic is waging war against one of the largest app ecosystems, but that's a whole 'nother topic.It also REALLY doesn't help that Apple is waging war against the gatekeeper of one of the most common game engines in use today, but that's a whole 'nother topic.

Apple didn't start the war.

Epic is waging war against one of the largest app ecosystems, but that's a whole 'nother topic.

Apple didn't start the war.

Epic started the current fight. But they started it due to Apple's (and Google's) in-app purchase fee structure which, if you are a developer, is not insubstantial.

Anyway, who started it and why is beside the point. Apple kicking off the Unreal Engine for pretty much every platform they have in response to that fight will cost them in the gaming arena and they can't really afford to be losing battles there. It will further the notion that anyone needing a Mac for most high-end purposes should look to Windows instead.

I'm not saying that Metal isn't worth coding for. I'm saying that Metal is different enough to be hard to justify having a developer already making Windows, XBox, and Playstation versions of a game to then also make a macOS/tvOS variant of.

I think you are overestimating the challenge. Only few games nowadays use GPU APIs directly, most use engines such as Unity that take care of all the details for you. In case you don’t want to use an engine - targeting different APIs in the same game is not that big of a challenge, assuming the developer is competent. And even then you can just use Vulkan and link with MoltenVK for native Metal support. Also, WebGPU is coming and there will be implementations for desktop.

Not to mention that the majority of Mac games already run on Metal. Even ones with custom engine.

Apple kicking off the Unreal Engine for pretty much every platform they have in response to that fight will cost them in the gaming arena and they can't really afford to be losing battles there. It will further the notion that anyone needing a Mac for most high-end purposes should look to Windows instead.

It will further the notion that Epic is an unreliable partner who wouldn’t hesitate for a second to sacrifice their customers in order to gain publicity for their private war. Anyway, loss for Epic, win for Unity and others. There is a lot of money to be made on Apple platforms as Apple users are much more likely to buy stuff.

As to high-end gaming purposes, Apple has no presence whatsoever. Not enough Mac with fast GPUs out there. Apple Silicon might or might not change it - but staying with Intel definitely won’t change it.

Who started is the entire point. The other point is Epic is whinging about the 30% based on who the 30% is going to, not the 30% itself. Apple had no choice but enfore it's own App store rules.Anyway, who started it and why is beside the point. Apple kicking off the Unreal Engine for pretty much every platform they have in response to that fight will cost them in the gaming arena and they can't really afford to be losing battles there. It will further the notion that anyone needing a Mac for most high-end purposes should look to Windows instead.

You are saying Apple will be hurt in the long run because it enforced it's own App store rules now? I don't think so.

High end pro users will keep using their Macintoshes for everything non gaming related. This Epic vs Apple/Google fight does nothing to harm them. Also people who seriously want to game are right now looking into high end gaming PCs or gaming consoles. It's only the low end (though quite large) market that use iOS devices for gaming. Also that iOS gaming often is not the primary reason the iOS device was purchased.

High tier gaming is really a niche market anyway. The much bigger market is mid tier gaming. As in Series X, PS5 and Switch. The Macintosh or iOS never had that market anyway, the three major consoles did and still do.As to high-end gaming purposes, Apple has no presence whatsoever. Not enough Mac with fast GPUs out there. Apple Silicon might or might not change it - but staying with Intel definitely won’t change it.

I don’t think so, but my point is not a technical one.

Apple says in two years the transition would be complete, so that must include the newly redesigned mac pro.

As part of said redesign, apple made a huge effort to build a custom gpu module, have they done that to use the mac pro expansion module only for a couple of years? It would have made more sense to use regular gpus then.

Apple says in two years the transition would be complete, so that must include the newly redesigned mac pro.

As part of said redesign, apple made a huge effort to build a custom gpu module, have they done that to use the mac pro expansion module only for a couple of years? It would have made more sense to use regular gpus then.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.