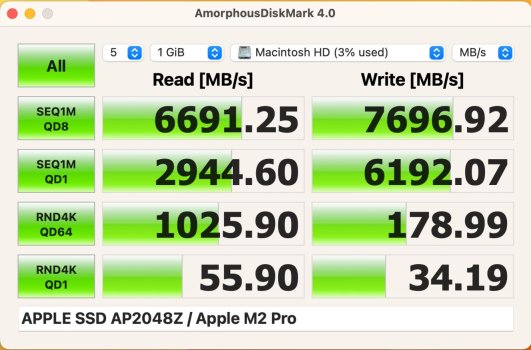

Yeah that's very awesome speeds to be honest. Feels reassuring since I'm leaning toward the laregest one (8TB). How come there's slight increases as we get larger capacities? What's the technical reason for it? Just curious.

There are numerous reasons why and it's a bit more complicated than this, but to be a bit reductive, two big factors are cache and spare area. We don't know the specifics of how Apple's unique combo of bare flash chips and SoC-based controller are manufactured, but with SSDs in general, what is typical is that they usually have a smaller portion of the drive made up of more expensive, faster performing flash (perhaps SLC or MLC cells) and then a larger portion made up of cheaper, slower performing flash (perhaps TLC or QLC cells). The drive first writes to this faster flash, but for especially large transfers, once it is filled up, the drive then has to fall back to writing to the slower cells and this often causes a big drop-off in performance. Since the amount dedicated to the faster cells is proportional, larger drives will have a larger area of faster flash.

Another reason is spare area. Since drive manufacturers know some flash cells will become unusable due to wear during the lifespan of the drive, they set aside "spare area" of extra flash that is not counted in the total capacity. As cells are worn down due to wear, they are sealed off so to speak and replaced with fresh new cells from this spare area. Larger drive capacities will have more spare area, and therefore will have less slowdown with heavy use over time due to more cells set aside for wear levelling.

Finally, some of the same concepts that apply to HDDs apply here too. Even though SSDs are usually much faster at random reads and writes than HDDs, even SSDs are faster at sequential than random. So as a drive gets more or mostly filled up, (especially for large files) if there are not enough contiguous portions of free space, even though the data

could be written sequentially in theory, because there is not a big enough chunk of contiguous space free, it has to be written to different areas of the drive anyway. SSDs will not be affected as badly as HDDs by this, but they will still perform worse than they would if they had tons of free space in this case. Also, because of the way that SSDs write data, doing something like defragmentation is not so simple, and in many cases could actually further decrease the performance of the drive, by increasing wear. Finally, with SSDs, if someone's drive is showing 30% free, but just before that it was 99% full, and they just deleted 29% of the content, then that drive is still going to be almost as badly fragmented as it was when it was 99% full.

I should also note that with modern versions of macOS, it is less apparent unless you are very savvy how much drive space is

actually free, as macOS reports used (but purgeable) space as free space. So with APFS file duplication methods, snapshots, caches other background processes going on, often a lot more of the drive is actually filled than what meets the eye.