yes, PS5 has around the same TFlosp as this and a little bit more than 400gb/s bandwidth...so yes, its basically a PS5 levelGiven that both models significantly improved the cooling system from their predecessors (the 14" should have around 50-60W sustained cooling power), I'd expect the CPU to run pretty much unrestricted on both. The GPU is where the thermals are much more important.

But then again, M1 Max is more of a GPU with an integrated CPU than a SoC in traditional term of the world. It's literally a gaming console..

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Geekbench score for M1 Max. It's great

- Thread starter Kung gu

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

its on their website when you orderI doubt it to be honest. The USB-C will likely be still limited to 100W PD (less in practice). The new MagSafe is the dedicated fast charging port.

the 16" with 32 gpu cores you get magsafe with 140W but if you go with the same 32 gpu cores on the 14" you get only 96W power brick

Or maybe it is just for Apple not change the brick for the next generation of M2/3 max

its on their website when you order

the 16" with 32 gpu cores you get magsafe with 140W but if you go with the same 32 gpu cores on the 14" you get only 96W power brick

Or maybe it is just for Apple not change the brick for the next generation of M2/3 max

Ah sorry, I misunderstood your post. That's just like power brick for old 13" vs 15". Bigger computer bigger power brick

So, you dont think the maxed out 14" will draw more than 96W under load?Ah sorry, I misunderstood your post. That's just like power brick for old 13" vs 15". Bigger computer bigger power brickThe wattage is probably proportional to the battery so that you get similar fast charge capabilities for both machines.

I mean the cpu will draw around 20-30W and the 32 gpu cores around 60W...so we are already at 90W just from that

I hope im wrong but the 14" under load even plugged to have the battery draining than charging

So, you dont think the maxed out 14" will draw more than 96W under load?

My guess is around 60W tops.

I mean the cpu will draw around 20-30W and the 32 gpu cores around 60W...so we are already at 90W just from that

I hope im wrong but the 14" under load even plugged to have the battery draining than charging

I doubt that M1 Max will be able to show it's full performance in the 14" chassis...

I’m not sure whether the neural and media engines are included in the M1 Pro/Max CPU power consumption shown by Apple. The M1 CPU is also able to consume more power than the 15W shown by Apple, suggesting they’re not included in the figure. The energy efficiency comparison is misleading if it doesn’t include all components of the chip.So, you dont think the maxed out 14" will draw more than 96W under load?

I mean the cpu will draw around 20-30W and the 32 gpu cores around 60W...so we are already at 90W just from that

I hope im wrong but the 14" under load even plugged to have the battery draining than charging

I’m not sure whether the neural and media engines are included in the CPU power consumption shown by Apple. The M1 CPU is also able to consume more power than the 15W shown by Apple. The energy efficiency comparison is misleading if it doesn’t include all components of the M1 chip.

It's not misleading if they only compare it to CPU/GPU power consumption on other platforms. If they did full system power, the advantage would be even more in Apple's favor.

The NPU etc. power consumption is quite low to begin with.

From the presentation graphs it seems the Max is a 30W CPU plus a 55W GPU, so 85W for those two bits alone.

With the display, SSD and other Max elements, I can see why the 16 inch Max gets a 140W charger.

The 14 inch Max with the same specs only gets a 95W charger. Suggests the GPU isn't going to be running at a sustained 55W.

With the display, SSD and other Max elements, I can see why the 16 inch Max gets a 140W charger.

The 14 inch Max with the same specs only gets a 95W charger. Suggests the GPU isn't going to be running at a sustained 55W.

From the presentation graphs it seems the Max is a 30W CPU plus a 55W GPU, so 85W for those two bits alone.

With the display, SSD and other Max elements, I can see why the 16 inch Max gets a 140W charger.

The 14 inch Max with the same specs only gets a 95W charger. Suggests the GPU isn't going to be running at a sustained 55W.

Buy a new charger?

Well, these components also use power and contribute to the chip’s performance. So it would make sense to include the power consumption when comparing to other chips.It's not misleading if they only compare it to CPU/GPU power consumption on other platforms. If they did full system power, the advantage would be even more in Apple's favor.

The NPU etc. power consumption is quite low to begin with.

It appears Apple now shows the peak performance without including the total power needed to achieve it. Then they compare it to the performance and power consumption of other chips, which don’t have these additional neural and media engines, thus use no additional power to achieve the shown performance.

you want for them to compare the whole system VS others chips only?Well, these components also use power and contribute to the chip’s performance. So it would make sense to include the power consumption when comparing to other chips.

It appears Apple now shows the full performance without including the full power needed to achieve it.

I think the SoC vs SoC is a fair comparison

i think the 16" miniLed display consume less power than an 15" 4k Oled 120hz display..so..Apple is like loosing not comparing the whole system to other whole system

I’m talking about the SoC/chip not other components. The neural and media engine ARE part of the SoC AND contribute to its performance, so yes I think they should include their power consumption when comparing to other chips.you want for them to compare the whole system VS others chips only?

I think the SoC vs SoC is a fair comparison

i think the 16" miniLed display consume less power than an 15" 4k Oled 120hz display..so..Apple is like loosing not comparing the whole system to other whole system

I understand now what you mean, sorry. The neural is part of the cpu power consumption and media engine is part of the gpu cores power consumptionI’m talking about the SoC/chip not other components. The neural and media engine ARE part of the SoC, so yes I think they should include their power consumption when comparing to other chips.

No problem. However, I doubt that they’re included in the figure since the M1 SoC also is able to use more power than the CPU plus GPU power consumption shown by Apple.I understand now what you mean, sorry, but the neural is part of the cpu power consumption and media engine is part of the gpu cores power consumption

I’m talking about the SoC/chip not other components. The neural and media engine ARE part of the SoC, so yes I think they should include their power consumption when comparing to other chips.

What they report is likely total SoC power when running these tasks. And anyhow, NPU and the hardware encoders consume so little power that they are basically negligible.

A user is saying and i quoteWhat they report is likely total SoC power when running these tasks. And anyhow, NPU and the hardware encoders consume so little power that they are basically negligible.

"

My point is that the article is 99% wrong and the MacBooks actual GPU performance is nowhere near the consoles performance.

Maximum theoretical TFlops doesn’t mean you can hit those number if the GPU in the Mac is power and thermal starved.

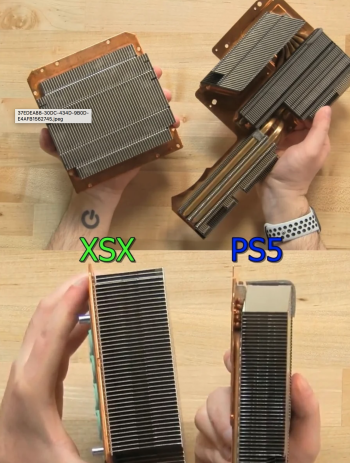

For the consoles to hit their max 10.3TF performance they actually use massive heatsinks and consume 200W+. Let that sink in for moment, and now imagine Apple’s 60W 10.4TF false marketing…"

In your opinion Is this false or totally true ?

If I’m not wrong, a lot of the 200W are consumed by the VRAM. I’m very sure Apple knows what they are doing. The M1 Max don’t just have 512-bits data bus with 400GB/s for fun.A user is saying and i quote

"

My point is that the article is 99% wrong and the MacBooks actual GPU performance is nowhere near the consoles performance.

Maximum theoretical TFlops doesn’t mean you can hit those number if the GPU in the Mac is power and thermal starved.

For the consoles to hit their max 10.3TF performance they actually use massive heatsinks and consume 200W+. Let that sink in for moment, and now imagine Apple’s 60W 10.4TF false marketing…"

In your opinion Is this false or totally true ?

I’m sure Anandtech will be doing their in-depth analysis article soon. We’ll know soon enough.

A user is saying and i quote

"

My point is that the article is 99% wrong and the MacBooks actual GPU performance is nowhere near the consoles performance.

Maximum theoretical TFlops doesn’t mean you can hit those number if the GPU in the Mac is power and thermal starved.

For the consoles to hit their max 10.3TF performance they actually use massive heatsinks and consume 200W+. Let that sink in for moment, and now imagine Apple’s 60W 10.4TF false marketing…"

Ah yes, the "I don't understand what is happening so thy must be lying" reaction. Well, M1 also hits 2.6TFLOPS with only 10 watts (I verified it myself), so Apple's claims are reasonable and realistic.

To be honest, I understand why the commenter might be confused, after all, it does sound very unlikely that Apple has such a big advantage in perf-watt, but they actually do. There are a few key factors to it:

- Apple does not have to rely on power-hungry RAM to feed their GPU (they use a high-tech multichannel low-power RAM that is much more expensive but uses somewhere around 10x less power than GDDR6 for comparable bandwidth)

- Apple GPUs are very streamlined devices, their SIMD processors are likely simpler than what Nvidia or AMD uses and hence more energy-efficient. They lack many tricks that other GPUs have, like the dual FP16 rate, their support of control flow divergence is very simple and efficient, their scheduling hardware is likely simpler too since they don't have to invoke very small shading kernels etc.

- Apple GPUs have their roots in the mobile phones, they have been developed to consume as little power as possible (while still being full-featured GPUs), so they probably use every trick in the book to lower their power consumption. Nvidia and AMD have neither Apple's expertise nor are their architectures focused on lowest possible power consumption. Their primary market is still desktop GPUs, and their design choices reflect that

- Apple has a process advantage that gives them that extra bit of power efficiency (but this is by far not enough to explain their lead)

Basically, this is what I have been repeating for the last two years — Apple currently has the most power-efficient GPU IP in the industry, by a large margin. We have had their entry-level hardware for a while now, we have benchmarks, it was actually very clear what G13 can do. I think it's quite funny that some people are surprised. Maybe Nvidia can catch up in perf/watt in two years. Who knows.

P.S. You are free to point that user to this post.

P.P.S. Of course, the simple fact is that Apple can do all this because their tech is very very expensive. Apple Silicon is basically power-efficient console tech for the desktop market. Or, you can also view it as a downsized custom supercomputer. Not even the RTX 3090 has a 512-bit RAM bus because it is too expensive for them. And M1 Max is also a huge die, larger than Nvidia's GA104 or the largest Xeons. These large Mac chips are not really a threat to the regular PC market with it's cheaper components, they are simply too expensive for a normal user.

Last edited:

"Ah yes, the "I don't understand what is happening so thy must be lying" reaction. Well, M1 also hits 2.6TFLOPS with only 10 watts (I verified it myself), so Apple's claims are reasonable and realistic.

To be honest, I understand why the commenter might be confused, after all, it does sound very unlikely that Apple has such a big advantage in perf-watt, but they actually do. There are a few key factors to it:

- Apple does not have to rely on power-hungry RAM to feed their GPU (they use a high-tech multichannel low-power RAM that is much more expensive but uses somewhere around 10x less power than GDDR6 for comparable bandwidth)

- Apple GPUs are very streamlined devices, their SIMD processors are likely simpler than what Nvidia or AMD uses and hence more energy-efficient. They lack many tricks that other GPUs have, like the dual FP16 rate, their support of control flow divergence is very simple and efficient, their scheduling hardware is likely simpler too since they don't have to invoke very small shading kernels etc.

- Apple GPUs have their roots in the mobile phones, they have been developed to consume as little power as possible (while still being full-featured GPUs), so they probably use every trick in the book to lower their power consumption. Nvidia and AMD have neither Apple's expertise nor are their architectures focused on lowest possible power consumption. Their primary market is still desktop GPUs, and their design choices reflect that

- Apple has a process advantage that gives them that extra bit of power efficiency (but this is by far not enough to explain their lead)

Basically, this is what I have been repeating for the last two years — Apple currently has the most power-efficient GPU IP in the industry, by a large margin. We have had their entry-level hardware for a while now, we have benchmarks, it was actually very clear what G13 can do. I think it's quite funny that some people are surprised. Maybe Nvidia can catch up in perf/watt in two years. Who knows.

P.S. You are free to point that user to this post.

P.P.S. Of course, the simple fact is that Apple can do all this because their tech is very very expensive. Apple Silicon is basically power-efficient console tech for the desktop market. Or, you can also view it as a downsized custom supercomputer. Not even the RTX 3090 has a 512-bit RAM bus because it is too expensive for them. And M1 Max is also a huge die, larger than Nvidia's GA104 or the largest Xeons. These large Mac chips are not really a threat to the regular PC market with it's cheaper components, they are simply too expensive for a normal user.

Basic physics… You switch more transistors in your GPU you pull more wattages and can hit higher TF numbers for both FP16/FP32. This is independent from memory power consumption (which is already very low for GDDR6) or SIMD arch.

- What do you mean with "Apple has a process advantage" and about which processes are you talking about exactly?

- Both AMD and NVIDIA have more experience than Apple when it comes to developing high performance GPU architectures and their power consumptions are tied to their maximum performance and node sizes. They are designed to deliver maximum FP32 performance in the industry, including superior ray-tracing performance (which Apple lacks) and GPU accelerate Tensor cores for ML applications (DLSS for example), INT4/8 operation and Mesh Shading. And all these features are actually accessible to developers, while Apple is not capable in any of these techniques, yet…"

"But explain to me how their GPUs are more efficient? How do they magically pull more FP32 performance? Are they magically switching less transistors, but have more TFlops? Pleas don’t confuse GPU performance with ARM64 vs X86 efficiency."

I dont know how to point him here...dont know if he really wants to

Last edited:

"

Basic physics… You switch more transistors in your GPU you pull more wattages and can hit higher TF numbers for both FP16/FP32. This is independent from memory power consumption (which is already very low for GDDR6) or SIMD arch.

Baseless conjecture. Chip design matters too. Again, M1 has 1024 shader cores operating at 1.3Ghz at 10W and it exactly hits the advertised 2.6TFLOPs — as I sad before, I have verified it myself using a long sequence of MADD operations. AMD and Nvidia need 2-3x power to reach the same MADD throughput.

Bottomline: Apple's shader ALUs are simply more efficient than AMD or Nvidia shader ALUs

- What do you mean with "Apple has a process advantage" and about which processes are you talking about exactly?

Apple chips are built on 5nm TSMC, AMD uses 7nm TSMC and Nvidia 8nm Samsung if I remember correctly

- Both AMD and NVIDIA have more experience than Apple when it comes to developing high performance GPU architectures and their power consumptions are tied to their maximum performance and node sizes.

Yes, they have more experience building high performance GPUs. They have much less experience building low-power GPUs. Apple has spend a decade building a very power efficient GPU core, now they simply scaled it up to a med-sized GPU.

They are designed to deliver maximum FP32 performance in the industry, including superior ray-tracing performance (which Apple lacks) and GPU accelerate Tensor cores for ML applications (DLSS for example), INT4/8 operation and Mesh Shading. And all these features are actually accessible to developers, while Apple is not capable in any of these techniques, yet…"

Ok, sure. And Apple offers actually useable sparse textures, programmable blending, tile shaders (persistent GPU cache between multiple shader invocations), shading determinism, advanced GPU-driven pipelines, advanced GPU SIMD shift operations and a developer friendly API (with a shading language that supports data and function pointers, typed bindings, C++ templates, dynamic linking etc.). I didn't realize this was an e-peen measuring contest. This is silly.

Personally though, I love how that person is offended that crappy Apple of all people dared to build a good GPU. Some folks 😁

So, you dont think the maxed out 14" will draw more than 96W under load?

I mean the cpu will draw around 20-30W and the 32 gpu cores around 60W...so we are already at 90W just from that

I hope im wrong but the 14" under load even plugged to have the battery draining than charging

The Intel 16” uses a 96W brick, feeding a 45W TDP CPU, and a 50W TGP GPU. But the CPU on my i7 can regularly sit closer to 60-65W when loaded down. Yet, in practice, I don’t really wind up pulling down enough power to discharge the battery while plugged in. If anything, the M1 Max is better suited for the 96W power supply than the 16” MBP was. M1 Max’s GPU has a similar “TGP” to the Radeon chips used previously, and a CPU that caps out at 30W, rather than ~65W.

The expected power consumption should be lower too, if Apple’s battery measurements are to be believed on the 16” version, due to the battery unable to get any larger. But also keep in mind that loads tend to be uneven. Getting perfect 100% load across the GPU and CPU is not very common. So hitting that perfect storm of 30W on CPU and 55W on GPU will not be something you do often, if at all, depending on what apps you use.

The main reason the 16” now comes with a 140W brick is fast charge. Getting a 50% charge in 30 minutes on a 100Wh battery is basically impossible on a 96W brick, even if the device was turned off. You have to account for some losses in the charge.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.