That will never happen. And M1 Max GPU is faster than 5700XT already. If anything they might put 64 core GPU into the iMac and even surpass 3090.next year this SoC will be in the next imac...so for the first time, Apple should say that their SoC is slower or equal to the top of the line (2 years old) gpu that replaces it ...until now Apple was like 2x faster , 800 faster etc than the device that new one replaces it

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Geekbench score for M1 Max. It's great

- Thread starter Kung gu

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nope, i doubt Apple sent out any 8-core test review units. The performance will still be enough for most people though. I'm expecting 9,500-10,000 multi-core Geekbench scoreso there have been no 14" base 8-core benchmarks yet? I'm curious how that machine compares to the M1.

Nope, i doubt Apple sent out any 8-core test review units. The performance will still be enough for most people though. I'm expecting 9,500-10,000 multi-core Geekbench score

I know it will be enough for me, I'm more looking to see if it's big enough of a jump over the M1 as I may just get an M1 Air.

How do these 10-core benchmarks compare to the M1?

A user is saying and i quote

"

My point is that the article is 99% wrong and the MacBooks actual GPU performance is nowhere near the consoles performance.

Maximum theoretical TFlops doesn’t mean you can hit those number if the GPU in the Mac is power and thermal starved.

For the consoles to hit their max 10.3TF performance they actually use massive heatsinks and consume 200W+. Let that sink in for moment, and now imagine Apple’s 60W 10.4TF false marketing…"

In your opinion Is this false or totally true ?

At best he is imprecise.

TFLOPS is calculated using the following formula:

TFLOPS = Cores x clock speed (Hz) x Floating Point Operations per clock cycle / 10^12

Only the clock speed might vary.

You have to use the clock speed which the GPU can obtain. You can't say if the GPU had unlimited power the clock speed could be higher and use that theoretical number.

So if the M1 GPUs could achieve X clock speed with no power and thermal constraints but only achieve 0.7X in practise, you use 0.7X to calculate TFLOPS.

Thus his argument doesn't really make sense.

0.2ghz more is prone wrong? Not at all. Since you are not able to provide any proofs that ARM can go beyond 3.3ghz like 5.0, I would not respond.

The original 8086 CPU was 0,00477 GHz so you were proven wrong by almost 42 8086's.

next year this SoC will be in the next imac...so for the first time, Apple should say that their SoC is slower or equal to the top of the line (2 years old) gpu that replaces it ...until now Apple was like 2x faster , 800 faster etc than the device that new one replaces it

Unless Apple introduces an M1 Super Max. (I can already see the video Tim Cook breaking out of prison.)

But I would be quite happy with an M1 Max, because it won’t be replacing a 2-year-old machine. It will be replacing my 2014 5K iMac.

I'm guessing the 8-core M1 Pro will be about 30% faster than the M1 in the multicore scores. Apple said that the M1 Pro CPU is up to 70% faster than the M1. Figure that the 4 efficiency cores are about as powerful as 1 power core. So the M1 effectively has the power of 5 power cores. The 10-core M1 Pro effectively has the power of 8.5 power cores. 8.5/5 = 1.7. The 8-core M1 Pro effectively has the power of 6.5 power cores. 6.5/5 = 1.3.I know it will be enough for me, I'm more looking to see if it's big enough of a jump over the M1 as I may just get an M1 Air.

How do these 10-core benchmarks compare to the M1?

That sounds about right. The M1 gets a multicore of about 7600. 30% faster is about 9900.Nope, i doubt Apple sent out any 8-core test review units. The performance will still be enough for most people though. I'm expecting 9,500-10,000 multi-core Geekbench score

looks like the post was deleted. Someone breaking their NDA?

I have found single core scores to be just about meaningless. My work laptop decimates my Mac Pro in single core benchmarks but it is catastrophically slower than said Mac Pro in regular use, and I only have one non-Apple app on it.I am a bit disappointed I have to say. I expected higher single-core performance than M1. The multi-core is as expected. There is little doubt that this will be the fastest laptop for a while. Maybe the upcoming Alder Lake will match the single-core performance, who knows...

Basically the same, a little better expected for the Max in memory bound workloads. Both much better than the M1.are there any comparisons out yet re: Pro VS Max regarding CPU?

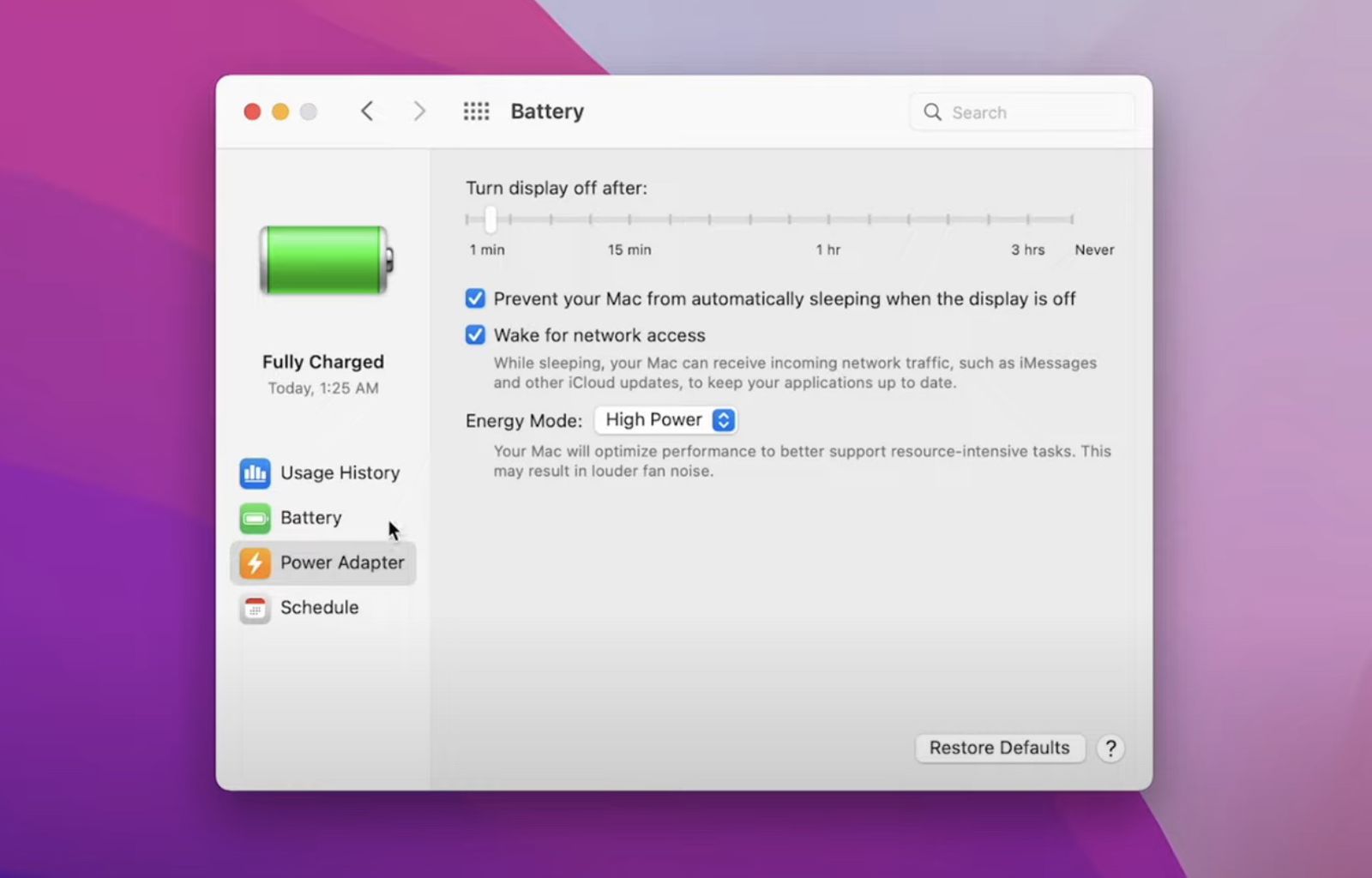

Anyone seen some test with performance mode on and off?

MacBook Pro Reviews Provide First Look at High Power Mode

The first 14-inch and 16-inch MacBook Pro reviews are now out and there are a few notable tidbits that are worth highlighting. Brian Tong's review provides a first look at High Power Mode, a new feature that is exclusive to 16-inch MacBook Pro models configured with an M1 Max chip. High Power...

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.