There's healthy competition between AMD and Intel but it's fastest overall; not most efficient. What would be interesting from both is a chip with just a bunch of efficiency cores. CPUs from 2014 and on are actually fine for what I do - just having a bunch of super-efficient cores would be fine. I don't care if things finish a few hundred milliseconds faster.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

Geekbench score for the Intel i9-13900K is out and it’s not looking pretty for Apple …

- Thread starter Retskrad

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I wonder if they would release an Atom CPU with just Gracemont cores. They haven't yet (which is interesting).There's healthy competition between AMD and Intel but it's fastest overall; not most efficient. What would be interesting from both is a chip with just a bunch of efficiency cores. CPUs from 2014 and on are actually fine for what I do - just having a bunch of super-efficient cores would be fine. I don't care if things finish a few hundred milliseconds faster.

I wonder if they would release an Atom CPU with just Gracemont cores. They haven't yet (which is interesting).

I had the opportunity to buy a 2017 iMac Pro for $1,800 earlier this year. It had the 28-core Xeon processor. I think that the high core count Xeons run at relatively low frequencies which may be more efficient. It definitely would be interesting to run a bunch of Gracemont cores for my workload. I can actually run everything I need off an M1 mini with 16 GB but the problem is the number of monitors that it can support. I run with four which isn't directly supported.

Not necessarily on ultra being workstation class unless they issued a statement I have not seen. I would think whatever they come up with for the Mac Pro will be considered workstation class. I consider the Ultra to be i9 level and be a replacement for the 27” iMac.Ultra is a workstation-level CPU. I'd put it in the same category as Threadrippers and Xeons. Looking strictly at the product segmentation, the M2 Pro should compete with the i7/i9 from Intel — and it does so quite successfully in the laptop space, on desktop — not so much.

It doesn’t matter what you consider it. It’s clearly a workstation class computer. It will not be as powerful as the upcoming Pro, but if you’re a Mac user for work the studio is very clearly a workstation class computer for its intended use-cases (mostly video/photo editing, it’s not a data science machine but is also shockingly capable for specific workflows in that field as well).Not necessarily on ultra being workstation class unless they issued a statement I have not seen. I would think whatever they come up with for the Mac Pro will be considered workstation class. I consider the Ultra to be i9 level and be a replacement for the 27” iMac.

What’s weird is that this race to the top is actively preventing them from delivering the performant mobile solutions that will be the big sellers now and in the future.There's healthy competition between AMD and Intel but it's fastest overall; not most efficient. What would be interesting from both is a chip with just a bunch of efficiency cores. CPUs from 2014 and on are actually fine for what I do - just having a bunch of super-efficient cores would be fine. I don't care if things finish a few hundred milliseconds faster.

I had the opportunity to buy a 2017 iMac Pro for $1,800 earlier this year. It had the 28-core Xeon processor. I think that the high core count Xeons run at relatively low frequencies which may be more efficient. It definitely would be interesting to run a bunch of Gracemont cores for my workload. I can actually run everything I need off an M1 mini with 16 GB but the problem is the number of monitors that it can support. I run with four which isn't directly supported.

Hopefully the rumored M2 Pro Mac Mini fills that void (3-4 monitor support). I wonder of that means a redesign as well due to the current one having just two thunderbolt ports plus an HDMI.

What’s weird is that this race to the top is actively preventing them from delivering the performant mobile solutions that will be the big sellers now and in the future.

I've noticed that. The base frequencies of their desktop chips tells you that they want performance over power consumption.

Hopefully the rumored M2 Pro Mac Mini fills that void (3-4 monitor support). I wonder of that means a redesign as well due to the current one having just two thunderbolt ports plus an HDMI.

The iMac Pro does support four external monitors I think. I would prefer that they just stick with the current design as it should have enough cooling for an M* Pro, especially a binned one.

Has Apple said it is? That’s all that matters here. It doesn’t have ECC RAM which last rumors I checked is the memory team is looking to incorporate with the Mac Pro.It doesn’t matter what you consider it. It’s clearly a workstation class computer. It will not be as powerful as the upcoming Pro, but if you’re a Mac user for work the studio is very clearly a workstation class computer for its intended use-cases (mostly video/photo editing, it’s not a data science machine but is also shockingly capable for specific workflows in that field as well).

It doesn’t matter what you or I think it is. If I “think” an i9 is workstation and you don’t, we have Intel’s words to back up who is right. I have not seen any statement from Apple that it’s workstation class. It’s just two Max put together. The underlying architecture is not different than 2x Max that would classify it as a Workstation CPU. It’s fast yes. I have one. But I wouldn’t consider it workstation same as I wouldn’t call an i9 a workstation CPU.

Has Apple said it is? That’s all that matters here. It doesn’t have ECC RAM which last rumors I checked is the memory team is looking to incorporate with the Mac Pro.

It doesn’t matter what you or I think it is. If I “think” an i9 is workstation and you don’t, we have Intel’s words to back up who is right. I have not seen any statement from Apple that it’s workstation class. It’s just two Max put together. The underlying architecture is not different than 2x Max that would classify it as a Workstation CPU. It’s fast yes. I have one. But I wouldn’t consider it workstation same as I wouldn’t call an i9 a workstation CPU.

I don't follow. So something is only a workstation for you if the manufacturer defines it as a "workstation"? Wouldn't it make more sense by constructing product classes by comparing capabilities rather than relying on arbitrary labels use by marketing departments? Besides, there is no clear definition of what exactly constitutes a "workstation", it's a relatively vague concept that is used to describe machines that exhibit certain characteristics. I believe that the Ultra firmly fits into the category.

That does bring up the question of the utility of ECC Ram. Does Apple hardware/software/workflow really need it?I don't follow. So something is only a workstation for you if the manufacturer defines it as a "workstation"? Wouldn't it make more sense by constructing product classes by comparing capabilities rather than relying on arbitrary labels use by marketing departments? Besides, there is no clear definition of what exactly constitutes a "workstation", it's a relatively vague concept that is used to describe machines that exhibit certain characteristics. I believe that the Ultra firmly fits into the category.

I think it's better to ask the opposite: is there a reason for non-ECC memory to exist? ECC chips are fundamentally no more expensive than non-ECC chips, but manufacturers have chosen to market them as an expensive server/workstation feature.That does bring up the question of the utility of ECC Ram. Does Apple hardware/software/workflow really need it?

I once had a Mac with subtle memory issues. They never occurred in normal use but only after hours or days of heavy use. Sometimes the computer would crash, but it was more common to simply have a bit flip in a cached file. That would cause the affected file to appear corrupted until I rebooted the computer. Because the memory issues were so small and rare, ECC would have protected from them.

I don‘t think it is as big of a selling point as one hopes. AMD has supported ECC Ram on all Zen processors, but it doesn’t seem to be a popular option.I think it's better to ask the opposite: is there a reason for non-ECC memory to exist? ECC chips are fundamentally no more expensive than non-ECC chips, but manufacturers have chosen to market them as an expensive server/workstation feature.

I once had a Mac with subtle memory issues. They never occurred in normal use but only after hours or days of heavy use. Sometimes the computer would crash, but it was more common to simply have a bit flip in a cached file. That would cause the affected file to appear corrupted until I rebooted the computer. Because the memory issues were so small and rare, ECC would have protected from them.

But your point does stand.

Well why isn’t an i9 workstation but a low end Xeon is? Saying the M1 Max is not workstation but gluing two together is doesn’t seem right. Can 10 i3 processors be workstation then? Is it just core counts?I don't follow. So something is only a workstation for you if the manufacturer defines it as a "workstation"? Wouldn't it make more sense by constructing product classes by comparing capabilities rather than relying on arbitrary labels use by marketing departments? Besides, there is no clear definition of what exactly constitutes a "workstation", it's a relatively vague concept that is used to describe machines that exhibit certain characteristics. I believe that the Ultra firmly fits into the category.

Essentially yes there are consumer and workstation class CPUs from the manufacturer. If I say I want a workstation Intel you say Xeon.

And I was comparing capabilities which is why I DONT think that just sticking two M1 Max equals a workstation CPU. Capabilities on workstations typically include ECC but other things.

Let me just ask: What makes it workstation to YOU? Why is a base M1 not a workstation but you think M1 Ultra is? Or better yet, like I mentioned above, if you don't think M1 Max is workstation level, but putting two of them together is, why?

Perhaps it is just my age, but the olden days Xeons and workstation specific CPU and GPU were better suited for "workstation" level of work, better geared towards more 24/7 operations and yeah ECC memory added in it, more specialized workflow, access to more RAM and more memory channels (got a server recently with 4TB of RAM, Xeon was the only thing at the time that allowed this much RAM, not sure if it changed). Workstation GPUs have historically been bad with Gaming (I had a $5,000 Quadro that got beat by a $500 GTX in gaming).

This is why I base it off the capabilities. If Apple says it is Workstation I still would be questioning it. I was just curious why some people find it workstation level processor. If "powerful computer" is the metric we are going to classify workstations now, then that is just highly subjective. Someone might find their i3 "workstation" since it is powerful for them

Last edited:

You are very confused if you think ECC has zero cost. For 64-bit wide DDR1-4 DIMMs, the ECC syndrome is 8 bits, so ECC DIMMs must be 72 bits wide. That means you have to pay for 1.125x the nominal memory capacity of the DIMM; a 16GB ECC DIMM actually has 18GB worth of DRAM chips on it.I think it's better to ask the opposite: is there a reason for non-ECC memory to exist? ECC chips are fundamentally no more expensive than non-ECC chips, but manufacturers have chosen to market them as an expensive server/workstation feature.

These ratios actually got worse with DDR5 and various LPDDR JEDEC standards, where channel width is reduced to 32 or even 16 bits. With 32-bit words, you still need an 8-bit syndrome to achieve standard single error correct / double error detect (SECDED) ECC, so now you're paying for 1.25x capacity.

Try popping that puppy in a laptop and we might be talking about 3rd degree burns. Sure faster is always better when it comes to processors, but relatively few users are really exploiting that additional speed. Gamers are probably more dependent on single core speed and gpu performance. Folks editing and rendering a lot of hi resolution video and audio. But that too is pretty gpu dependent. If you running office apps, checking email, surfing the web, streaming video, listening to music, etc. differences in processor speed are largely moot. I do statistics. For 99%+ of the stuff I do I'm the bottleneck. Once in a while I need to do something like estimate a fairly complex generalized linear model and estimate bootstrapped standard confidence interval estimates (each model has to iterate to find a solution, which can be a bit slow) and then you may need to run it thousands of times to get the bootstrapped confidence intervals. In those cases I may need to take a break. Everything else I do is virtually instantaneous and it makes almost no difference what kind of processor I'm using. Again, I realize there are folks that do things that really exploit both cpu and gpu processing speed. But for most of the things most of do most of the time we'd be hard pressed to tell the difference between any fairly recent cpu.

I think this is a bad look for Intel, not Apple. Apple and Intel will continue to swap places for fastest SC speed. [The M3, which will rumors say is going to use the M2's microarchitecture on an N3 process, and should be relased in late 2022 or early 2023, should be ~ 10%–15% faster than the M2 => GB SC ~2100–2200.] The difference is that you're comparing the SC speed of Intel's fastest desktop with the SC speed available to *every* Apple product, even its entry-level laptops.Source

The i9-13900K chip will be out later this year and we now have Geekbench results. Single core: 2133 and Multi core: 23701

In comparison, the M2 in the new MacBook Pro scored: 1919 in single core

8929 in multi core.

Sure, Apple is much better at performance per watt than Intel but it’s not a good look to fall behind in single core performance. Most day to day tasks are single core.

Last edited:

That 25% overhead is less than $2/GB. That's for all intents and purposes zero cost, except maybe if you are trying to buy a $200 laptop.These ratios actually got worse with DDR5 and various LPDDR JEDEC standards, where channel width is reduced to 32 or even 16 bits. With 32-bit words, you still need an 8-bit syndrome to achieve standard single error correct / double error detect (SECDED) ECC, so now you're paying for 1.25x capacity.

I'm not really sure that this i9-13900K will be the base, cheap chip from Intel ! Cannot be compare to M1 or M2 !Source

The i9-13900K chip will be out later this year and we now have Geekbench results. Single core: 2133 and Multi core: 23701

In comparison, the M2 in the new MacBook Pro scored: 1919 in single core

8929 in multi core.

Sure, Apple is much better at performance per watt than Intel but it’s not a good look to fall behind in single core performance. Most day to day tasks are single core.

Apple upended the chip industry with the M1 but AMD and Intel came back swinging and it seems like Apple now needs to pull another rabbit out of the hat with the M3.

M3 will be based on A17 if it comes out late 2023 not the M2 arch.The M3, which will rumors say is going to use the M2's microarchitecture on an N3 process, and should be relased in late 2022 or early 2023, should be ~ 10%–15% faster than the M2 => GB SC ~2100–2200.

I think this is a bad look for Intel, not Apple. Apple and Intel will continue to swap places for fastest SC speed. [The M3, which will rumors say is going to use the M2's microarchitecture on an N3 process, and should be relased in late 2022 or early 2023, should be ~ 10%–15% faster than the M2 => GB SC ~2100–2200.] The difference is that you're comparing the SC speed of Intel's fastest desktop with the SC speed available to *every* Apple product, even its entry-level laptops.

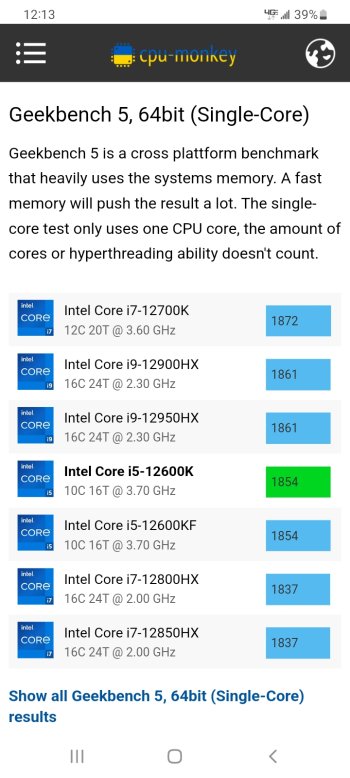

Every single intel cpu beats the m1 in single core on geekbench. The i5 ,i7 and i9 all score higher than the m1.

Attachments

How did you come up with LPDDR5 6400 being less than $2/GB? I’m seeing more than 5x that for just the ICs.That 25% overhead is less than $2/GB. That's for all intents and purposes zero cost, except maybe if you are trying to buy a $200 laptop.

The M1 is an A14 derivative, and the M2 appears to be an A15 derivative. Thus, if the standard nomenclature continues, the M3 would be based on the A16, not the A17. However, as I mentioned, current rumors are that the M3 is instead going to consist of the M2's microarchitecture on an N3 process. I don't know how reliable those rumors are.M3 will be based on A17 if it comes out late 2023 not the M2 arch.

I was responding to the OP's post about the SC and MC speeds of the M2, not the M1. I even quoted the OP's post in my post, so that should have been clear.Every single intel cpu beats the m1 in single core on geekbench. The i5 ,i7 and i9 all score higher than the m1.

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.