Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

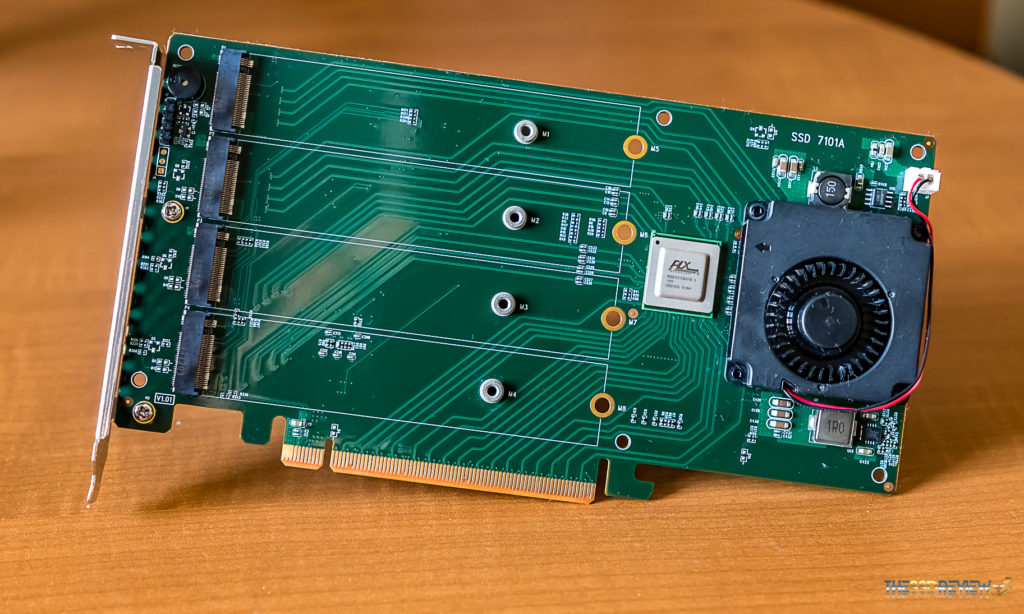

Highpoint 7101A - PCIe 3.0 SSD performance for the cMP

- Thread starter handheldgames

- WikiPost WikiPost

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- The first post of this thread is a WikiPost and can be edited by anyone with the appropiate permissions. Your edits will be public.

Is there a way to access the Mojave beta to get this firmware? Is it only available to developers at this point?

you can download the Mojave Public Beta.

https://www.macrumors.com/how-to/install-macos-mojave-public-beta/

Any opinions on populating the HighPoint 7101A with four Intel 600p series NVMe. M.2 cards? They are QLC 4 bits/cell but have a big SLC cache and price out at about half the cost per GB of Samsung 970 EVO/Pro.

Speed wise, Intel is claiming 1800 bps read and 1500 Mbps write, which is a lot less than Samsung 970 EVO/Pro!

I looked at the benchmarks for the 760 a third time. In most respects, the drive is a poor performer. Two of the drives in a raid 0 would have a difficult time matching the performance of Samsung’s products from last year.

Maybe ask in the NVIDIA thread at the top of the page? This thread is about a M2 PCIe carrier card.. but dont let me stop anyone who wants to take this thread off topic

Whoops

Posted to wrong thread

Posted to wrong thread

Maybe ask in the NVIDIA thread at the top of the page? This thread is about a M2 PCIe carrier card.. but dont let me stop anyone who wants to take this thread off topic

M2 format has SATA, AHCI and NVMe blades.

SATA M2 blades have to be inserted on a SATA M2 PCIe card, this card has a SATA chip to communicate with the SATA blade.

AHCI and NVMe M2 blades have to be installed on a M2 PCIe convertor card, it's just a format convertor.

=============================================================

Old thread but just to clarify ( from bitter experience ) . . . . . . .

1. SATA M2 blades : These are often referred to as "B" Type in their description

2. AHCI and NVMe M2 blades : These are usually referred to as "M" Type in their description.

eg : My Samsung 970 EVO which I boot High Sierra 10.13.6 from has an "M" type connector

Don't be confused if you see TWO M.2 sockets on an M.2 PCIe adapter . . . and if you want to boot from an AHCI or NVMe blade to get those incredible speeds .. they must be "M" Type.

Last edited:

Besides design and price, what practical differences are there between the Highpoint 1701A and the Amfeltec gen 3 x16?

I expect them to have similar performance and noise (similar small fan). The amfeltec is lower profile, but slightly longer (too long to fit in half length PCIe slots unless you move the fan). The amfeltec uses a PEX 8732 (32 lanes, 8 ports). The Highpoint's PEX 8747 has more lanes but I guess the fewer ports make it less expensive? All that's required for both cards is 32 lanes, 5 ports.

The PEX chips have some other minor differences. The 8747 only allows port sizes of x8 or x16 (another reason that it's less expensive?). I guess the Highpoint is using x8 for the NVMe devices but NVMe devices are only x4 so it doesn't matter. The 8747 also has other missing features (multi-host, etc.) that are not needed for this application (more reasons that it's less expensive?). The 8747 may have less max packet latency (100 ns vs 106 ns).

I tested the amfeltec with four 1TB Samsung 960 Pros, in a MacPro3,1 and a Hackintosh using various speeds 2.5 GT/s, 5 GT/s, and 8GT/s and slot widths (x8 and x16). The MacPro3,1 can get up to 5226 MB/s at 5 GT/s. The Hackintosh can get 6315 MB/s at 5 GT/s and 11141 MB/s at 8 GT/s.

The method to change the link speed (created back in October 2017) is useful for other PCIe gen 3 cards (usually not graphics cards) that incorrectly boot into 2.5 GT/s mode such as the GC-TITAN RIDGE (Thunderbolt 3 add-in card).

I expect them to have similar performance and noise (similar small fan). The amfeltec is lower profile, but slightly longer (too long to fit in half length PCIe slots unless you move the fan). The amfeltec uses a PEX 8732 (32 lanes, 8 ports). The Highpoint's PEX 8747 has more lanes but I guess the fewer ports make it less expensive? All that's required for both cards is 32 lanes, 5 ports.

The PEX chips have some other minor differences. The 8747 only allows port sizes of x8 or x16 (another reason that it's less expensive?). I guess the Highpoint is using x8 for the NVMe devices but NVMe devices are only x4 so it doesn't matter. The 8747 also has other missing features (multi-host, etc.) that are not needed for this application (more reasons that it's less expensive?). The 8747 may have less max packet latency (100 ns vs 106 ns).

I tested the amfeltec with four 1TB Samsung 960 Pros, in a MacPro3,1 and a Hackintosh using various speeds 2.5 GT/s, 5 GT/s, and 8GT/s and slot widths (x8 and x16). The MacPro3,1 can get up to 5226 MB/s at 5 GT/s. The Hackintosh can get 6315 MB/s at 5 GT/s and 11141 MB/s at 8 GT/s.

The method to change the link speed (created back in October 2017) is useful for other PCIe gen 3 cards (usually not graphics cards) that incorrectly boot into 2.5 GT/s mode such as the GC-TITAN RIDGE (Thunderbolt 3 add-in card).

Besides design and price, what practical differences are there between the Highpoint 1701A and the Amfeltec gen 3 x16?

I expect them to have similar performance and noise (similar small fan). The amfeltec is lower profile, but slightly longer (too long to fit in half length PCIe slots unless you move the fan). The amfeltec uses a PEX 8732 (32 lanes, 8 ports). The Highpoint's PEX 8747 has more lanes but I guess the fewer ports make it less expensive? All that's required for both cards is 32 lanes, 5 ports.

The PEX chips have some other minor differences. The 8747 only allows port sizes of x8 or x16 (another reason that it's less expensive?). I guess the Highpoint is using x8 for the NVMe devices but NVMe devices are only x4 so it doesn't matter. The 8747 also has other missing features (multi-host, etc.) that are not needed for this application (more reasons that it's less expensive?). The 8747 may have less max packet latency (100 ns vs 106 ns).

I tested the amfeltec with four 1TB Samsung 960 Pros, in a MacPro3,1 and a Hackintosh using various speeds 2.5 GT/s, 5 GT/s, and 8GT/s and slot widths (x8 and x16). The MacPro3,1 can get up to 5226 MB/s at 5 GT/s. The Hackintosh can get 6315 MB/s at 5 GT/s and 11141 MB/s at 8 GT/s.

The method to change the link speed (created back in October 2017) is useful for other PCIe gen 3 cards (usually not graphics cards) that incorrectly boot into 2.5 GT/s mode such as the GC-TITAN RIDGE (Thunderbolt 3 add-in card).

On a single ssd basis. Carrier boards with PCIe 3.0 x4 @ 8gts, is about twice as fast as Gen PCIe 2.0 x4 @ 5.0 gts. Taking the bandwidth of an x8 PCIe 2.0 connection and assigning it to a x4 PCIe 3.0 SSD.

The latest mac pro firmware fixes issues with the Highpoint initialization at x16 pcie 2.0. Full speed is possible without the need for setpci.

With that said.

1) Amfeltec M.2 2x x4 PCIe 3.0/8gts @ x8 - Max throughput with 1 ssd at a time is 3200 MB/Sec, 3200 MB/Sec with 2 ssd.

2) Amfeltec M.2. 4x x4 PCIe 2./5gts 0 @ x16 - max throughput of 1500 MB/sec per ssd at a time. 6000 MB/Sec with 4 ssd.

3) Highpoint M.2. 4xPCIe 3.0/8gts @ x16 - max throughput with 1 ssd at a time is 3200 MB/Sec , 6200+MB/Sec with 4 SSD, although 2 will take up the bandwidth.

It comes down to price and features. If you want something cheap, the PCIe m.2 boards from QNAP may work. I almost bought one before I found a $275 deal on the Highpoint. From what I remember, the amfeltec 3.0 shares a similar price point with the highpoint.

The Aplicata-Quad-NVMe-PCIe-Adapter is another option with an x8 four port m.2 adapter. Although its more than the highpoint at 449.00 with half the bandwidth.

Last edited:

As an Amazon Associate, MacRumors earns a commission from qualifying purchases made through links in this post.

I agree. 8.0GT/s*128b/130T ≈ 2*5.0GT/s*8b/10TOn a single ssd basis. Carrier boards with PCIe 3.0 x4 @ 8gts, is about twice as fast as Gen PCIe 2.0 x4 @ 5.0 gts. Taking the bandwidth of an x8 PCIe 2.0 connection and assigning it to a x4 PCIe 3.0 SSD.

In this example, two SSD's does not increase speed because the 5 GT/s x8 of the slot is the same as the 8 GT/s x4 of one NVMe. If your slots are x16, then be sure to get the x16 version of the Amfeltec gen 3.1) Amfeltec M.2 2x x4 PCIe 3.0/8gts @ x8 - Max throughput with 1 ssd at a time is 3200 MB/Sec, 3200 MB/Sec with 2 ssd.

In this example, the SSDs are gen 2 (is there such a thing?) or you are using a gen 2 Amfeltec instead of the gen 3 as in the first example.2) Amfeltec M.2. 4x x4 PCIe 2.0/5gts @ x16 - max throughput of 1500 MB/sec per ssd at a time. 6000 MB/Sec with 4 ssd.

It might take a little over 2 to take the entire bandwidth of the 5.0 GT/s x16 slot. In my hackintosh test, I got 224 MB/s extra by adding a third SSD (went up from 6091 MB/s to 6315 MB/s).3) Highpoint M.2. 4xPCIe 3.0/8gts @ x16 - max throughput with 1 ssd at a time is 3200 MB/Sec , 6200+MB/Sec with 4 SSD, although 2 will take up the bandwidth.

I'm using "x4 PCI Express to M.2 PCIe SSD Adapter" from StarTech for my MacPro3,1 Thunderbolt 3 tests. No switch is necessary because Thunderbolt 3 controllers provides PCIe 3.0 links. No heatsink is necessary because the Thunderbolt PCIe enclosures have fans.It comes down to price and features. If you want something cheap, the PCIe m.2 boards from QNAP may work. I almost bought one before I found a $275 deal on the Highpoint. From what I remember, the amfeltec 3.0 shares a similar price point with the highpoint.

The Aplicata-Quad-NVMe-PCIe-Adapter is another option with an x8 four port m.2 adapter. Although its more than the highpoint at 449.00 with half the bandwidth.

As an Amazon Associate, MacRumors earns a commission from qualifying purchases made through links in this post.

Some additional insight..

Speed comes with a price.. Fan noise. The expression of "silence is golden" clearly does not apply to the 7101A. My Mac Pro used to be silent with the Amfeltec Squid, not any more.

Whilst the small fan on card does a great job of cooling the SSD, the effort to push air through a low profile / channeled heatsink cover could make some users cringe.

View attachment 769749

Amfeltec Squid for comparison

View attachment 769750

Perhaps I'm missing something, but with 4 sticks, the Amfeltec Squid seems like it's faster and quieter, and now with the latest Mojave bootrom, is also bootable. Is there any reason to go for the Highpoint?

Perhaps I'm missing something, but with 4 sticks, the Amfeltec Squid seems like it's faster and quieter, and now with the latest Mojave bootrom, is also bootable. Is there any reason to go for the Highpoint?

Squid is costlier than SSD7101A. Squid is a double face card and will get you in trouble with some GPUs, RX-580 is one.

Edit: removed incorrect info.

Last edited:

Squid with only one SSD gets 1500MB/s, SSD7101A gets 3200MB/s. Squid is costlier than SSD7101A. Squid is a double face card and will get you in trouble with some GPUs, RX-580 is one.

That's super useful. But if you dont have the double face issue, and you are going for max capacity/max speed (and you dont mind paying more) it seems like the squid gets you there faster and quieter...

If you going to use 4 blades at the same time and have a slim GPU, Squid is better than SSD7101A, in any other situation, SSD7101A wins.That's super useful. But if you dont have the double face issue, and you are going for max capacity/max speed (and you dont mind paying more) it seems like the squid gets you there faster and quieter...

Both cards have the same PLX switch, so throughput is similar. Squid is more mature, with time throughput and support will be the same.

Last edited:

If you going to use 4 blades at the same time and have a slim GPU, Squid is better than SSD7101A, in any other situation, SSD7101A wins.

Both cards have the same PLX switch, so throughput is similar. Squid is more mature, with time throughput and support will be the same.

I may easily be misunderstanding big parts of the thread, but it seems to get full performance out of the 7101, you need to run some scripts and modify kexts or other things, whereas the squid just gets to those high speeds with no modifications of the OS.

I may easily be misunderstanding big parts of the thread, but it seems to get full performance out of the 7101, you need to run some scripts and modify kexts or other things, whereas the squid just gets to those high speeds with no modifications of the OS.

Not any longer if you are on bootrom 138+

Here, you are comparing the PCIe 2.0 amfeltec (no fan) instead of the PCIe 3.0 amfeltec (with fan) to the PCIe 3.0 Highpoint.Some additional insight..

Speed comes with a price.. Fan noise. The expression of "silence is golden" clearly does not apply to the 7101A. My Mac Pro used to be silent with the Amfeltec Squid, not any more.

Whilst the small fan on card does a great job of cooling the SSD, the effort to push air through a low profile / channeled heatsink cover could make some users cringe.

The squid is double faced, but the pcb is offset, so there should be no interference, unless your M.2 drives have overly tall heatsinks. Is there a post describing this problem with the RX-580?Squid is costlier than SSD7101A. Squid is a double face card and will get you in trouble with some GPUs, RX-580 is one.

I haven't seen any evidence that one is better than the other (I am referring to the gen 3 amfeltec). Are you referring to the gen 2 or gen 3 amfeltec?If you going to use 4 blades at the same time and have a slim GPU, Squid is better than SSD7101A, in any other situation, SSD7101A wins. Both cards have the same PLX switch, so throughput is similar. Squid is more mature, with time throughput and support will be the same.

It's a bad combo, since Sapphire RX-580 is more than 2.0 slots, read from here #23The squid is double faced, but the pcb is offset, so there should be no interference, unless your M.2 drives have overly tall heatsinks. Is there a post describing this problem with the RX-580?

I personally don't have any of this cards, but I installed both Squids and the SSD7101A for friends and clients. When I'm gonna buy one, will be the SSD7101A. I hate myself for missing the June/July Amazon promo.I haven't seen any evidence that one is better than the other (I am referring to the gen 3 amfeltec). Are you referring to the gen 2 or gen 3 amfeltec?

Form-factor of SSD7101A is, IMHO, better than the one used by the Squid. If I'm not wrong, the PLX switch used by SSD7101A is newer too. Squid is more mature, has better driver support with Windows/Linux, but given time, SSD7101A will get there.

Nowhere in that thread is mentioned the amfeltec; but the HighPoint 7101A is at #11. It seems that it's the RX580 that is exceeding PCIe specs, not the amfeltec or highpoint.It's a bad combo, since Sapphire RX-580 is more than 2.0 slots, read from here #23

The SSD7101a is simpler, having all M.2's on the same side of the board.Form-factor of SSD7101A is, IMHO, better than the one used by the Squid.

The amfeltec uses both sides of the board to make it low profile which is a benefit to some (low profile PCs). The offset and double sided PCB may add expense. The differing orientation of the M.2 slots on each side makes non NVMe applications less easy or more complex (for example, when attempting to use NVMe to PCIe cables or adapters).

What drivers? The PLX chip is handled by the standard PCIe drivers, isn't it? There aren't any other addressable components on the cards that can be affected by drivers, is there? What does a driver do that the OS does not?If I'm not wrong, the PLX switch used by SSD7101A is newer too. Squid is more mature, has better driver support with Windows/Linux, but given time, SSD7101A will get there.

Nowhere in that thread is mentioned the amfeltec; but the HighPoint 7101A is at #11. It seems that it's the RX580 that is exceeding PCIe specs, not the amfeltec or highpoint.

My answer was related to the GPU ZombiePhysicist has, not the PCIe NVMe cards. With MP5,1 any GPU bigger than 2.0 slots will be in trouble with Squid and a little less with SSD7101A.

The SSD7101a is simpler, having all M.2's on the same side of the board.

The amfeltec uses both sides of the board to make it low profile which is a benefit to some (low profile PCs).The offset and double sided PCB may add expense. The differing orientation of the M.2 slots on each side makes non NVMe applications less easy or more complex (for example, when attempting to use NVMe to PCIe cables or adapters).

Again, we are talking specifically MP5,1 here.

What drivers? The PLX chip is handled by the standard PCIe drivers, isn't it? There aren't any other addressable components on the cards that can be affected by drivers, is there? What does a driver do that the OS does not?

You can use standard macOS drivers or install the manager and drivers for 10.12/10.13. Take a look here: http://highpoint-tech.com/USA_new/series-ssd7101a-1-download.htm

You can use the manager to select RAID mode/type/mount/unmount and etc. HotPlug is not supported with HighPoint macOS drivers.

Last edited:

It could just be a software raid. The kext matches PCIe class code of NVMe devices. Does it operate with any set of NVMe devices or just the ones on the highpoint card? If it was a hardware raid, then wouldn't the individual NVMe devices be hidden? It might be interesting to try this driver with the amfeltec installed. I would like to see a IORegistryExplorer.app save file for this configuration or at least a screen shot to see how the driver relates to the devices.You can use standard macOS drivers or install the manager and drivers for 10.12/10.13. Take a look here: http://highpoint-tech.com/USA_new/series-ssd7101a-1-download.htm

You can use the manager to select RAID mode/type/mount/unmount and etc. HotPlug is not supported with HighPoint macOS drivers.

Here, you are comparing the PCIe 2.0 amfeltec (no fan) instead of the PCIe 3.0 amfeltec (with fan) to the PCIe 3.0 Highpoint.

The squid is double faced, but the pcb is offset, so there should be no interference, unless your M.2 drives have overly tall heatsinks. Is there a post describing this problem with the RX-580?

I haven't seen any evidence that one is better than the other (I am referring to the gen 3 amfeltec). Are you referring to the gen 2 or gen 3 amfeltec?

The 3.0 Squid was not available for my tests

The double sided squid barely fits with a 2.0 width card.

Adding heatsinks to the squid makes it even wider. The lack of space from the double sided card defeats any airflow and interferes with cards on both sides of the squid.

I had the amfeltec squid 2.0 and based on its inferior/lack of ssd cooling and it’s space cramping design that is focused on 1/2 height server installations. Honestly, I would never recommend the product for a Mac Pro. The width issues and the lack of cooling and the lack of space to accommodate heatsinks are points where the Highpoint is superior to the squid. While I would like to send money to Canada, from a hardware design perspective, I’m troubled with the amfeltec design.

I’m glad you are having good success with the amfeltec product. I tried to like the product, but the negatives outweigh the positives.

Last edited:

I just wanted to make sure the two (or three) cards were not mischaracterized. You've done an excellent job of pointing out the physical differences. I don't expect to see significant performance differences between similar generation cards (disregarding throttling that may occur with inadequate cooling). I still wonder about the Highpoint raid controller marketing though - is there an actual raid controller?I’m glad you are having good success with the amfeltec product. I tried to like the product, but the negatives outweigh the positives.

I just wanted to make sure the two (or three) cards were not mischaracterized. You've done an excellent job of pointing out the physical differences. I don't expect to see significant performance differences between similar generation cards (disregarding throttling that may occur with inadequate cooling). I still wonder about the Highpoint raid controller marketing though - is there an actual raid controller?

I've only tested Highpoint's initial MacOS driver release. In that version, the webdriver setup for the raid controller did not function on the 2009 cMP. While the highhpoint nvme driver worked and successfully booted macos, it's 4k and large file performance was inferior to Apple's NVMe drivers for single drive systems.

While there are users out there who have stated their use of Highpoint's recent drivers for multiple disk raid system's, that's not me. I would appreciate anyone with experience with this to step in with a reply.

While the following links are geared towards the PC/Windows implementation of the SSD7101-a, they establish a level of expectation for the product and the supplied driver.

Toms Hardware Review

TheSSDReview.com Coverage

Introduction

Stumbling into an Amazon warehouse Deal, I picked up the HighPoint 7101a NVMe PCIe adapter, equipped with a MacPro friendly Broadcom 8747 PCIe bridge at a great price. While Highpoint's acknowledgement of MacPro compatibility was sketchy at best, I ordered one to go along with a recently acquired Samsung 970 Pro NVMe Boot drive.

Some Images

PEX 8747

48-Lane, 5-Port PCI Express Gen 3 (8 GT/s) Switch, 27 x 27mm FCBGA

The ExpressLane™ PEX 8747 is a 48-lane, 5-port, PCIe Gen 3 switch device

Technical Specification Document link

On to the results

Let the numbers speak for themselves. I'm VERY impressed with the near PCIe 3.x performance on a '09 CMP with the Highpoint 7101A PCIe SSD Adapter and the Samsung 970 pro. Yes... Read performance has almost doubled from 1500MBs to 3000MBs. Writes are up 50%.

Taking a look under the hood, The 7101A addresses PCIe SSD’s at a pcie 3.0 x4 8.0Gts link, saturating the x16 a pcie 2.0 connection at close to PCIe 3.0 speeds.

If there is a Holy Grail of PCIe SSD adapters for the cMP, this may be the one.

View attachment 767207

970 Pro in a standard m2 x4 adapter with a heatsink.

View attachment 767208 970Pro in the 7101A.

View attachment 767209

Whilst the 7101a usually costs $400, a qty can be found at enthusiast pricing on Amazon for $275.

It’s clear the HighPoint 7101a brings a new level of PCIe NVMe / AHCI Sata Express performance to the 4,1/5,1 that’s unattainable with any other controller.

I was able to tear down the Amfeltec Squid 2.0x4 this morning, removing 3 SM951's and their heatsinks that are setup in a software raid. Moving them across to the 7101A, some interesting results came to light:

Addressing a single SSD at a time, the Highpoint 7101A is MUCH FASTER than the Amfeltec Squid. Allowing near PCIe 3.0 speeds from our aging mac pro.

Using a Software RAID 0 setup for the Amfeltec Squid, the Highpoint 7101A is about 25% SLOWER than the Squid. Perhaps I need to reformat the array with a different block size.

Let's start with BlackMagic Speed Test:

Highpoint 7101A 3xSM951

View attachment 767333

Highpoint 7101A 2xSM951 ** FASTER THAN 3X SM951 in 7101A**

View attachment 767369

Amfeltec Squid - 3xSM951

View attachment 767336

Amorphous Disk Mark (FREE - GO GET THIS ONE)

Highpoint 7101A 3xSM951

View attachment 767337

Highpoint 7101A 2xSM951

View attachment 767371

Amfeltec Squid - 3xSM951

View attachment 767340

QuickBench is another great test to show bandwith. Lets look first at 4k - 1m tests:

Highpoint 7101A 3x SM951

View attachment 767343

Highpoint 7101A 2x SM951

Amfeltec Squid - 3xSM951

View attachment 767344

Lets look at some larger file transfers.

Highpoint 7101A 3xSM951

Amfeltec Squid - 3xSM951

hello thank you for share, i'm planning to buy one like this, i saw their official website provide a driver for mac os, is that necessary? and did boot the system with any nvme this card? thank you

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.