James my Man- this is Macrumors. “Negativity” is the default for many. Pricing resentment, would-be design savants know what Apple should design and almost any new product is derided as a failure pre-release. (Mixed Reality Headsets are near universally hated and won’t arrive before summer!! Laughs). I’ve read Marxist claims of how exploitative Apple is on these forums and silly propaganda that Apple is supposed to produce products for the masses. (Thankfully they do not)— Siri, as you note cannot compete with ChatGPT. AI is dangerous, exciting, inspiring and a threat to many jobs.— BRING IT!!Lots of negativity in here. I find ChatGPT to be pretty cool and unlike the internet-based AI attempts last decade it seems people haven’t managed to immediately turn it into a racist fascist.

It’s a hell of a lot better at answering complicated questions than Siri is.

Got a tip for us?

Let us know

Become a MacRumors Supporter for $50/year with no ads, ability to filter front page stories, and private forums.

How to Access ChatGPT From Your Mac's Menu Bar

- Thread starter MacRumors

- Start date

- Sort by reaction score

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I program for a living and immediately find it useful for being a rubber duck, or finding the one stupid thing I missed in a function. Don’t dump huge chunks of your code in it however, or that could be a potential security risk. Just sayin’. I love it, but it won’t replace me any time soon as it is stupid and recreates a lot of s&^t code you can copy and paste from another source. It doesn’t replace true understanding. You have to have skill and brains to make it work. So fear not fellow programmers.

I’m betting your skepticism is pre-mature. Yes for the short term AI may not be an immediate threat. But all over the net are posting examples of AI produced limited coding solutions in a professional setting. there is little doubt that When ChatGPT 4 arrives it will accelerate the current momentum. I’m going out on a limb here and suggest that at least 25% of all currently human written coding will be conducted by AI in less than a decade. (Maybe much less than a decade and at a Much higher Rate!.). Let’s meet back here on March 5, 2028 at the half way point and see what’s up!

Dannys my Man. One of the great myths on this form is that members are pro- tech. Most are fearful any new product or tech. Few embrace new technology or systems. they are, in a word: “Irrelevant”. And will be swept away by the millions in the near term. (I’m thinking less than 20 years.- Commentary here will be without all the drama and fear. And the world will be a better place. Laughs!Bizarrely negative responses in here from a supposedly tech orientated crowd.

Last edited:

I’m betting your skepticism is pre-mature. Yes for the short term AI may not be an immediate threat. But all over the net are posting examples of AI produced limited coding solutions in a professional setting. there is little doubt that When ChatGPT 4 arrives it will accelerate the current momentum. I’m going out on a limb here and suggest that at least 25% of all currently human written coding will be conducted by AI in less than a decade. (Maybe much less than a decade and at a Much higher Rate!.). Let’s meet back here on March 5, 2028 at the half way point and see what’s up!

How are you even going to quantify that? This is impossible to prove or disprove.

Unless your claim is “at least 25% of software developers lose their jobs in less than a decade”?

Yes you’re correct— I have no data. It’s a gut call. Therefore meaningless unless it comes true. I have read several prognosticators suggest higher numbers than 25%. That said, I”m glad to see Coding now shifting to AI. Most Coders in my experience are not creative. I remember the Title of an Information Week cover in the early 2000’s. “The Just say ‘No” Culture of IT””— it was an accurate spot on description of what I experienced at 2 Fortune 50 companies that I worked at during the period. We accelerated the move to the cloud for efficiency — in part due to the intransigence of IT workers! No matter when it arrives— I’ll be glad to see the exit of human coders.How are you even going to quantify that? This is impossible to prove or disprove.

Unless your claim is “at least 25% of software developers lose their jobs in less than a decade”?

Just a few examples of playing around with it.

-Asked it to invent a cocktail for me which could be from a James Bond movie.

-Asked it to translate me in five different ways "I want to pay by card" in Spanish for my next trip.

-Told it I got this in my fridge, what can I cook with it?

-Asked to pretend there were five more ancient Wonders of the World, what could there have been?

-Asked why my basil plants never last for long.

-Asked it to pretend to be a dog and to write me a poem about bones.

-Asked it which current singer could replace Freddie Mercury if Queen wanted to perform again.

-Asked it to write me a short story for my little daughter about an elephant and his mouse friends.

-Help me to write a nice card for my friends new home.

-Help me with some recipes I always do, I want to spice them up.

-Asked for some suggestions for my next Vespa scooter trip, no more that 4 hours. Got some nice ideas, asked for more details on one of the suggestions, got really great ideas.

-Need to build a bridge with just six sheets of paper. What do you suggest, how to fold it, how to lay it so it can hold a cup of water.

-Asked to invent some physics experiments for the kids just with things from the household.

Some of it was just for fun, some of it was really useful!

-Asked it to invent a cocktail for me which could be from a James Bond movie.

-Asked it to translate me in five different ways "I want to pay by card" in Spanish for my next trip.

-Told it I got this in my fridge, what can I cook with it?

-Asked to pretend there were five more ancient Wonders of the World, what could there have been?

-Asked why my basil plants never last for long.

-Asked it to pretend to be a dog and to write me a poem about bones.

-Asked it which current singer could replace Freddie Mercury if Queen wanted to perform again.

-Asked it to write me a short story for my little daughter about an elephant and his mouse friends.

-Help me to write a nice card for my friends new home.

-Help me with some recipes I always do, I want to spice them up.

-Asked for some suggestions for my next Vespa scooter trip, no more that 4 hours. Got some nice ideas, asked for more details on one of the suggestions, got really great ideas.

-Need to build a bridge with just six sheets of paper. What do you suggest, how to fold it, how to lay it so it can hold a cup of water.

-Asked to invent some physics experiments for the kids just with things from the household.

Some of it was just for fun, some of it was really useful!

Please SBLue your fun tone and attitude and embrace of this exciting new tool seems soooo out of place in this forum. Are you feeling ok? Laughs!!!Just a few examples of playing around with it.

-Asked it to invent a cocktail for me which could be from a James Bond movie.

-Asked it to translate me in five different ways "I want to pay by card" in Spanish for my next trip.

-Told it I got this in my fridge, what can I cook with it?

-Asked to pretend there were five more ancient Wonders of the World, what could there have been?

-Asked why my basil plants never last for long.

-Asked it to pretend to be a dog and to write me a poem about bones.

-Asked it which current singer could replace Freddie Mercury if Queen wanted to perform again.

-Asked it to write me a short story for my little daughter about an elephant and his mouse friends.

-Help me to write a nice card for my friends new home.

-Help me with some recipes I always do, I want to spice them up.

-Asked for some suggestions for my next Vespa scooter trip, no more that 4 hours. Got some nice ideas, asked for more details on one of the suggestions, got really great ideas.

-Need to build a bridge with just six sheets of paper. What do you suggest, how to fold it, how to lay it so it can hold a cup of water.

-Asked to invent some physics experiments for the kids just with things from the household.

Some of it was just for fun, some of it was really useful!

Just because people don't lap up everything that companies ever release and herald them as the next coming of Techno-Christ doesn't mean we're all a bunch of pessimistic ******s. There are blatantly obvious problems with the current slew of AI tools and the companies that are releasing them, to shut your eyes and pretend they don't exist is dumb. These are complex topics. These tools could make things a lot worse for a lot of people, even if they do improve life for others. It's not the craziest idea to approach threads like this with a bit of nuance in your opinion.Please SBLue your fun tone and attitude and embrace of this exciting new tool seems soooo out of place in this forum. Are you feeling ok? Laughs!!!

Yes you’re correct— I have no data. It’s a gut call. Therefore meaningless unless it comes true

It's meaningless even if it does "come true", because it'll still be impossible to prove or disprove.

My eyes are wide open. I was amused by your Techno-Christ characterization. Yes these tools could make things worse for a lot of people. they’ll have to deal with it. You work on the “blatant and obvious problems “—- I’m running with the possibilities. An exciting, cruel and empowering future is coming! Many will be washed away. Others will thrive. Bring It!!Just because people don't lap up everything that companies ever release and herald them as the next coming of Techno-Christ doesn't mean we're all a bunch of pessimistic ******s. There are blatantly obvious problems with the current slew of AI tools and the companies that are releasing them, to shut your eyes and pretend they don't exist is dumb. These are complex topics. These tools could make things a lot worse for a lot of people, even if they do improve life for others. It's not the craziest idea to approach threads like this with a bit of nuance in your opinion.

Actually AI will have far greater impact on civilization than Christ did!!! And we’ll all be better off!

Last edited:

That's a pretty callous approach to human suffering, but not unsurprising from the AI-enthusiast crowd.My eyes are wide open. I was amused by your Techno-Christ characterization. Yes these tools could make things worse for a lot of people. they’ll have to deal with it. You work on the “blatant and obvious problems “—- I’m running with the possibilities. An exciting, cruel and empowering future is coming! Many will be washed away. Others will thrive. Bring It!!

Actually AI will have far greater impact on civilization than Christ did!!! And we’ll all be better off!

Well, can totally avoid giving confidential data just like when dealing with a contractor, an assistant, tech support, anything that doesn’t need the confidential data… my point was more as an example, like imagine that someone asks you to collate “all the cities mentioned in a YouTube video ordered and labeled by population number”. It can and will excel at that.You’re giving away possibly confidential data by doing that.

Not a good idea if you live in an authoritarian country.

Yesterday somebody posted their ChatGPT python code checker on Reddit.

Programmers downvoted and criticized the hell out of it. ”Where do you store the data we upload to your system?”

No answers given.

You do not share commercially sensitive data with these things.

Have some of you not learned from the last 20 years of data controversies? Just because some company says they respect your data doesn’t mean they really do, especially when it comes to machine learning and scraping data from everywhere.

Just one tiny example if you got time to spare, it’s the shortest one I have found, less than 5mins. I’m just trying to see if there’s a middle ground over here between “it’s useless” vs “it’s the second coming of Christ”:

But I totally understand that not everybody wants to use different tools, especially experimental and each minute is precious.

It’s just that I believe that it’s a different thing saying “it doesn’t work” to “I don’t feel like using it”. I don’t use windows 95% of the time and even avoid it, but because I prefer something else that works better for me, windows for all practical purposes does the job too.

Sorry, a longer post, I'm playing catch-up.

Overall, I think ChatGPT decent for what it can do, but it's not supposed to be able to do everything and a lot of the negativity appears to come from people not actually using it for what it's designed for.

I see a lot of people very proud of tricking it into proven it's not a human being, even though when asked for a personal opinion it tells you it's not a person, so why do people think it's trying to trick them?

It tells you up front that it's not great at math, just ask it (or read the intro). Proving it's not great at math isn't that big a win.

Of course, for anything important you always need to double- or triple-check it's facts, but you really need to do that with Wikipedia and even proper medical information sites; there's a reason why you should usually get a second option from a human medical doctor when you're dealing with something serious.

Is it as smart as a 5-year old? I guess, if the 5-year old speaks multiple languages fluently, can translate between them, and has the ability to write high school and university exams and maintain a pretty solid "B" in most subjects, except math (though it got a "B" in astrophysics). It has a better knowledge base than most of the people I know, on almost any topic. So if you want to chat with someone polite and well-spoken that knows more about almost anything the most people you know, why not?

Does it understand the timeline because it doesn't know Avatar 2 is out? Yes. This isn't a "gotcha", it's dataset stops at 2021, so when you ask the question, knowing that basic fact, you can expect timeline-related answers to be answered as if it was 2021.

"Stress-testing" it and finding something you prove is inadequate doesn't make it useless; that's like stress-testing a dozen cars and trucks by into walls and saying you're never going to use them because the can be driven into walls -- by choice or by accident, tossing aside all the things they do really, really well if you operate them safely.

I retired in June after working in a small DTP department with an open office design; during the day we'd often chat about various topics while we waited on our computers and in the last few years we were down to a half-dozen people, and chatting with them provided entertaining and educational. I worked for a translation company, and probably worked with people from almost every country in the world at one time or another. During conversations our topics ranges far and wide, and not everyone pretended to know everything, so there's be honest discussions and cross-leaning.

After the tests I've done so far, I view ChatGPT as an intelligent, well-versed, well-spoken co-worker. I find I can ask it for general information on almost any topic and get a clear, condensed answer that, if it's good enough, I can ask it to elaborate or where to get more detailed information. I asked it what would be the best planets or moons in the Solar system for humans to terraform, and it gave me a very solid answer, adding what general methods might be used to terraform each, and so on.

Do I want to use it to replace human interaction? Heck no, but that's not what it's for, really. Still, I'd rather chat with this than most of the random folk on the internet.

I haven't seen anything that it's done that I'd consider any worse than I've seen thousands of people on the internet doing and overall I think it's behaved better.

I doubt I'd ever ask it to operate anything in my home, but to me, that's not what I'd want it for.

I'd love to have it running on an iPhone or iPad next to my computer while I'm working on personal projects, with a 3D animated avatar, using Whisper technology and artificially-generated voices to allow me to talk to it while I work, possibly with different versions and "personalities" depending on the day of the week or by what I was doing.

Overall, I think ChatGPT decent for what it can do, but it's not supposed to be able to do everything and a lot of the negativity appears to come from people not actually using it for what it's designed for.

I see a lot of people very proud of tricking it into proven it's not a human being, even though when asked for a personal opinion it tells you it's not a person, so why do people think it's trying to trick them?

It tells you up front that it's not great at math, just ask it (or read the intro). Proving it's not great at math isn't that big a win.

Of course, for anything important you always need to double- or triple-check it's facts, but you really need to do that with Wikipedia and even proper medical information sites; there's a reason why you should usually get a second option from a human medical doctor when you're dealing with something serious.

Is it as smart as a 5-year old? I guess, if the 5-year old speaks multiple languages fluently, can translate between them, and has the ability to write high school and university exams and maintain a pretty solid "B" in most subjects, except math (though it got a "B" in astrophysics). It has a better knowledge base than most of the people I know, on almost any topic. So if you want to chat with someone polite and well-spoken that knows more about almost anything the most people you know, why not?

Does it understand the timeline because it doesn't know Avatar 2 is out? Yes. This isn't a "gotcha", it's dataset stops at 2021, so when you ask the question, knowing that basic fact, you can expect timeline-related answers to be answered as if it was 2021.

"Stress-testing" it and finding something you prove is inadequate doesn't make it useless; that's like stress-testing a dozen cars and trucks by into walls and saying you're never going to use them because the can be driven into walls -- by choice or by accident, tossing aside all the things they do really, really well if you operate them safely.

I retired in June after working in a small DTP department with an open office design; during the day we'd often chat about various topics while we waited on our computers and in the last few years we were down to a half-dozen people, and chatting with them provided entertaining and educational. I worked for a translation company, and probably worked with people from almost every country in the world at one time or another. During conversations our topics ranges far and wide, and not everyone pretended to know everything, so there's be honest discussions and cross-leaning.

After the tests I've done so far, I view ChatGPT as an intelligent, well-versed, well-spoken co-worker. I find I can ask it for general information on almost any topic and get a clear, condensed answer that, if it's good enough, I can ask it to elaborate or where to get more detailed information. I asked it what would be the best planets or moons in the Solar system for humans to terraform, and it gave me a very solid answer, adding what general methods might be used to terraform each, and so on.

Do I want to use it to replace human interaction? Heck no, but that's not what it's for, really. Still, I'd rather chat with this than most of the random folk on the internet.

I haven't seen anything that it's done that I'd consider any worse than I've seen thousands of people on the internet doing and overall I think it's behaved better.

I doubt I'd ever ask it to operate anything in my home, but to me, that's not what I'd want it for.

I'd love to have it running on an iPhone or iPad next to my computer while I'm working on personal projects, with a 3D animated avatar, using Whisper technology and artificially-generated voices to allow me to talk to it while I work, possibly with different versions and "personalities" depending on the day of the week or by what I was doing.

This is a solid take on what I think about it too put concisely into words (I actually suck at that).Sorry, a longer post, I'm playing catch-up.

Overall, I think ChatGPT decent for what it can do, but it's not supposed to be able to do everything and a lot of the negativity appears to come from people not actually using it for what it's designed for.

I see a lot of people very proud of tricking it into proven it's not a human being, even though when asked for a personal opinion it tells you it's not a person, so why do people think it's trying to trick them?

It tells you up front that it's not great at math, just ask it (or read the intro). Proving it's not great at math isn't that big a win.

Of course, for anything important you always need to double- or triple-check it's facts, but you really need to do that with Wikipedia and even proper medical information sites; there's a reason why you should usually get a second option from a human medical doctor when you're dealing with something serious.

Is it as smart as a 5-year old? I guess, if the 5-year old speaks multiple languages fluently, can translate between them, and has the ability to write high school and university exams and maintain a pretty solid "B" in most subjects, except math (though it got a "B" in astrophysics). It has a better knowledge base than most of the people I know, on almost any topic. So if you want to chat with someone polite and well-spoken that knows more about almost anything the most people you know, why not?

Does it understand the timeline because it doesn't know Avatar 2 is out? Yes. This isn't a "gotcha", it's dataset stops at 2021, so when you ask the question, knowing that basic fact, you can expect timeline-related answers to be answered as if it was 2021.

"Stress-testing" it and finding something you prove is inadequate doesn't make it useless; that's like stress-testing a dozen cars and trucks by into walls and saying you're never going to use them because the can be driven into walls -- by choice or by accident, tossing aside all the things they do really, really well if you operate them safely.

I retired in June after working in a small DTP department with an open office design; during the day we'd often chat about various topics while we waited on our computers and in the last few years we were down to a half-dozen people, and chatting with them provided entertaining and educational. I worked for a translation company, and probably worked with people from almost every country in the world at one time or another. During conversations our topics ranges far and wide, and not everyone pretended to know everything, so there's be honest discussions and cross-leaning.

After the tests I've done so far, I view ChatGPT as an intelligent, well-versed, well-spoken co-worker. I find I can ask it for general information on almost any topic and get a clear, condensed answer that, if it's good enough, I can ask it to elaborate or where to get more detailed information. I asked it what would be the best planets or moons in the Solar system for humans to terraform, and it gave me a very solid answer, adding what general methods might be used to terraform each, and so on.

Do I want to use it to replace human interaction? Heck no, but that's not what it's for, really. Still, I'd rather chat with this than most of the random folk on the internet.

I haven't seen anything that it's done that I'd consider any worse than I've seen thousands of people on the internet doing and overall I think it's behaved better.

I doubt I'd ever ask it to operate anything in my home, but to me, that's not what I'd want it for.

I'd love to have it running on an iPhone or iPad next to my computer while I'm working on personal projects, with a 3D animated avatar, using Whisper technology and artificially-generated voices to allow me to talk to it while I work, possibly with different versions and "personalities" depending on the day of the week or by what I was doing.

First time hearing about “Whisper”, search results mention it’s for “hearing”… so, that would be for the AI system to hear you out, voice AI to speak back to you, chatGPT for the script and heck, toss in there InvokeAI/Midjourney/etc for picturing ideas from those scripts back to you too.

…and RadioGPT for music during the coffee and lunch breaks 😅

Writing some of these down to expand on ideas fin what/how to ask it, found them quite the creative prompts to throw at it.Just a few examples of playing around with it.

-Asked it to invent a cocktail for me which could be from a James Bond movie.

-Asked it to translate me in five different ways "I want to pay by card" in Spanish for my next trip.

-Told it I got this in my fridge, what can I cook with it?

-Asked to pretend there were five more ancient Wonders of the World, what could there have been?

-Asked why my basil plants never last for long.

-Asked it to pretend to be a dog and to write me a poem about bones.

-Asked it which current singer could replace Freddie Mercury if Queen wanted to perform again.

-Asked it to write me a short story for my little daughter about an elephant and his mouse friends.

-Help me to write a nice card for my friends new home.

-Help me with some recipes I always do, I want to spice them up.

-Asked for some suggestions for my next Vespa scooter trip, no more that 4 hours. Got some nice ideas, asked for more details on one of the suggestions, got really great ideas.

-Need to build a bridge with just six sheets of paper. What do you suggest, how to fold it, how to lay it so it can hold a cup of water.

-Asked to invent some physics experiments for the kids just with things from the household.

Some of it was just for fun, some of it was really useful!

Thanks for the share.

Whisper is their speech-to-text software. The API is open source, and at least one Mac user has written a nifty (and cheap) transcription app that so far seems to work very well for me. So far I've only tested it using some of my 3D animations, and a couple of MP3 songs and I've been surprised at how well it did.This is a solid take on what I think about it too put concisely into words (I actually suck at that).

First time hearing about “Whisper”, search results mention it’s for “hearing”… so, that would be for the AI system to hear you out, voice AI to speak back to you, chatGPT for the script and heck, toss in there InvokeAI/Midjourney/etc for picturing ideas from those scripts back to you too.

…and RadioGPT for music during the coffee and lunch breaks 😅

Writing some of these down to expand on ideas fin what/how to ask it, found them quite the creative prompts to throw at it.

Thanks for the share.

Introducing Whisper

We’ve trained and are open-sourcing a neural net called Whisper that approaches human level robustness and accuracy on English speech recognition.

openai.com

ChatGPT doesn't "know" anything. It's basically a more complicated version of autocomplete, just stringing together words that are statistically likely to follow each other.

So this long crazy almost psychotic rant - is just a form of sophisticated autocomplete ? Just some words thrown together from a database according to some clever statistics ?

I think in this case - one could make the argument that maybe humans also have a large database in their brain where they throw together words according to their many years of "training".

That's Bing AI, not ChatGPT. I'm not familiar with how that works, or why it behaves that way.So this long crazy almost psychotic rant - is just a form of sophisticated autocomplete ? Just some words thrown together from a database according to some clever statistics ?

I think in this case - one could make the argument that maybe humans also have a large database in their brain where they throw together words according to their many years of "training".

View attachment 2168894

But it's true we don't actually know exactly how the human brain generates language. I don't think organic brains quite have a database the same way a computer program does, but our knowledge and memories are built up from countless interactions with our environment. I don't think we will be able to get computers to replicate human thought process, mainly because we don't know exactly how it works, so how can we replicate it? AI is a different process from the human brain, but one that is now able to produce output close to human language -- that is, a different way to achieve the same/similar result.

Used it once - probably gets it right 80% of the time. But just like Siri, pushing it beyond 80% has ever diminishing returns on significant efforts.

And I've got to feed it with my data. People are probably feeding it with all kinds of private information in the vain hope of some genius answer (that never comes).

And I've got to feed it with my data. People are probably feeding it with all kinds of private information in the vain hope of some genius answer (that never comes).

Bing AI is heavily based on chatGPT. They are, for all intents and purposes, the same thing, Bing AI just has access to the wider internet and more up to date information (even though it often gets hung up on outdated info).That's Bing AI, not ChatGPT. I'm not familiar with how that works, or why it behaves that way.

But it's true we don't actually know exactly how the human brain generates language. I don't think organic brains quite have a database the same way a computer program does, but our knowledge and memories are built up from countless interactions with our environment. I don't think we will be able to get computers to replicate human thought process, mainly because we don't know exactly how it works, so how can we replicate it? AI is a different process from the human brain, but one that is now able to produce output close to human language -- that is, a different way to achieve the same/similar result.

That's a pretty callous approach to human suffering, but not unsurprising from the AI-enthusiast crowd.

But this happens with EVERY advancement humans make since the industrial revolution. Every 10 years you can find a development that renders certain skill sets either useless or undesirable. Every single tool, invention and progression has done that to some humans somewhere - you have to to adapt, it opens new jobs and opportunities. Should we do away with the internet now because it's largely rendered the high street and shopping null and void meaning retail jobs are drying up? I don't know why i've offered one example as you could offer millions over the last 120 years.

Bing AI is based on Chat GPT 3.5, not the latest public vision and as such is less "ready for primetime".Bing AI is heavily based on chatGPT. They are, for all intents and purposes, the same thing, Bing AI just has access to the wider internet and more up to date information (even though it often gets hung up on outdated info).

The streaming show "Nothing Forever" ran live for three months without a single issue with content (other than the quality of the comedy) until chat GPT-3 was down for a bit and the producers decided to let it use an earlier version which resulted in some offensive dialogue and the series being suspended.

Nothing, Forever - Wikipedia

Bing is using an earlier version of Chat GPT, but it's also important to consider implementation; my impression is that Bing has nowhere near the safety guardrails that Chat GPT 4 has.

I believe the dataset for Chat GPT runs about 600 GB; if I were going to use it daily, I think my implementation (if eventually possible) would be to download the full data set onto a 2 terabyte SSD with all the guardrails in place and let it exist apart from the internet, updating the data set periodically. I could be wrong, but I think Bing -- still in the testing phase -- is being influenced by people "stress testing" it.

Exactly. I've been using my computers for over 40 years and I've seen very, very little that computers can do that humans can't. Computers just do it much, much faster. I used to tell clients that the computer doesn't do the work, I do. The computer just helps me make my mistakes much more quickly, but it also helps me catch and fix them more quickly and at the end of the day I have better quality work and more of it.But this happens with EVERY advancement humans make since the industrial revolution. Every 10 years you can find a development that renders certain skill sets either useless or undesirable. Every single tool, invention and progression has done that to some humans somewhere - you have to to adapt, it opens new jobs and opportunities. Should we do away with the internet now because it's largely rendered the high street and shopping null and void meaning retail jobs are drying up? I don't know why i've offered one example as you could offer millions over the last 120 years.

I think that's one of the things people need to understand about technology like Chat GPT, if you want it to write your paper for you or do your job and assume it'll do it perfectly, you're using it wrong and will no doubt be let down, but if you use it to *help* you write your paper or *help* you do your job, keeping in mind it's limitations, you'll probably be very happy.

One of the first things I printed on my new 1984 computer was a mantra I'd read, "This poor computer can't do what I want it to, only what I tell it too". I think that's mostly still true.

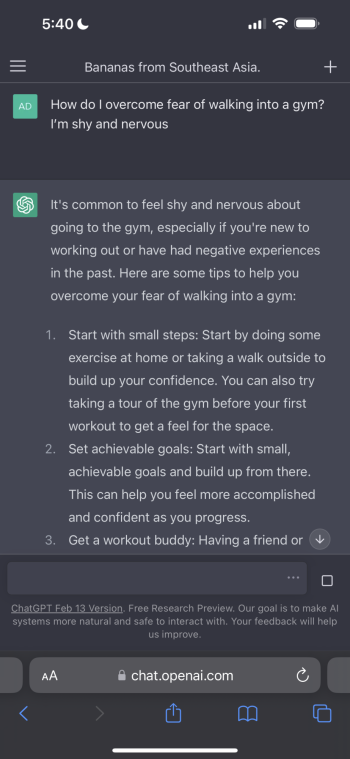

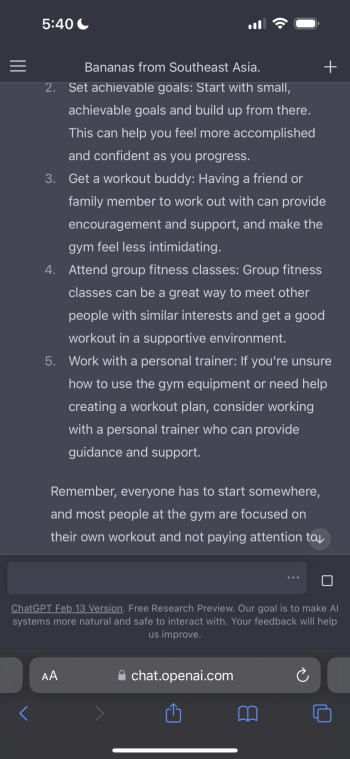

I was impressed actually it provided advice in a list form. Can’t wait to see what going forward the future holds. I can see the tool of bouncing ideas and questions back and forth.

Attachments

Register on MacRumors! This sidebar will go away, and you'll see fewer ads.