Sure but that's just what I'm saying that modularity has its own costs and why Apple might choose to not go with a modular RAM system. They still might, but they might not and this is one reason why. Because again, it'll likely have to feed the GPU too.I think just filling up all the slots is going to be easier than dealing with this lol.

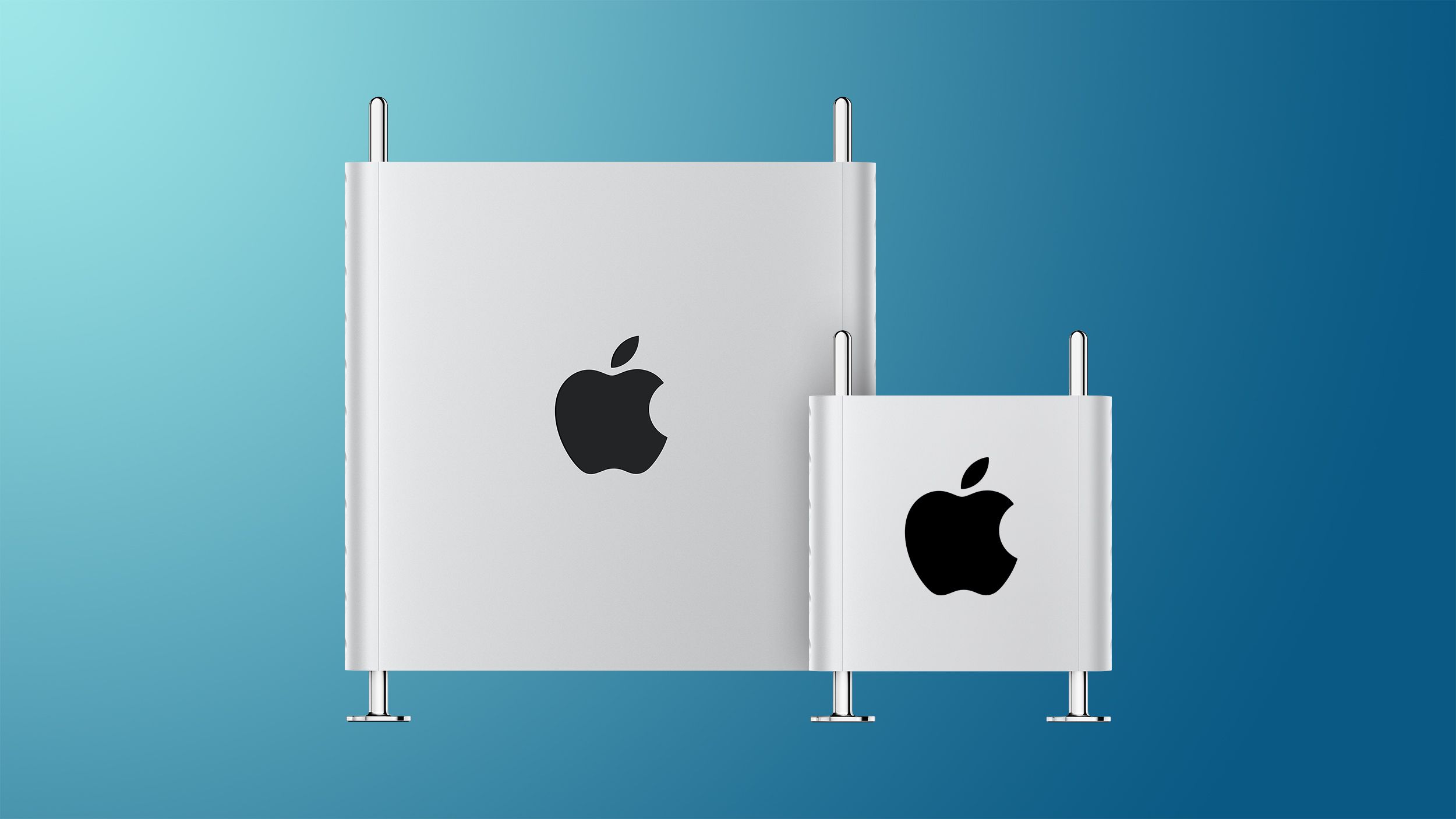

View attachment 1908297

I think Apple might have had Ice Lake Mac Pro planned initially, but may never see the light of the day.

AS "Mac Pro" could end being the entirely new system and might as well drop the Mac Pro name. They can just settle with either 256GB or 512GB(if they manage to double up the density next year) maximum memory configuration on 4x Jade-C

For Ice Lake, in reference to our earlier discussions about Intel and leak names being a pretty good indication of what's coming down the pipe, generally if product code names in macOS/Xcode that product has been released relatively soon after.

This is from just earlier this year:

2022 Mac Pro Rumored to Use Intel's Ice Lake Xeon W-3300 Chips

A new Mac Pro that's coming in 2022 is set to use Intel's Ice Lake Xeon W-3300 workstation chips, according to an Intel leaker that WCCFtech says has offered reliable information on Intel Xeon chips in the past. Intel's W-3300 Ice Lake CPUs are set to launch in the near future, and there have...

In the initial transition video, Tim Cook referenced releasing new Intel hardware, an Intel leaker said there will be one, and Xcode recently gained a reference to it. Apple could yes in theory change their mind, but that's pretty strong evidence that an Ice Lake Mac Pro is coming. Historically, that confluence of evidence was pretty much a guarantee of a product.